基于骨架感知神经网络的视觉手语翻译技术

-

摘要:研究背景

手语是通过手臂、手和手指等人体动作来表达的,是听障人士交流表达的主要手段。为了搭建与听障人士的沟通桥梁,手语识别和手语翻译任务引起了人们的注意。 手语识别又分为独立手语识别和连续手语识别,独立手语识别旨在将单独的手语识别为对应的单词,连续手语识别旨在将连续的手语识别为对应的手语单词序列。由于手语与自然语言的语法规则存在差异,所以连续手语识别的结果不符合自然语言规则。而手语翻译任务旨在将连续手语翻译为自然语言文本,其结果满足自然语言的语法规则。现有手语识别与手语翻译工作通常侧重于提取手语视频帧中的局部区域或全帧特征,而忽略了骨骼特征;而骨骼信息可以反映人体姿势动作,进而可以提供用于区分手语动作的重要线索,因此骨骼特征可以用于提升手语模型的性能。

目的本文的研究目标是利用骨骼特征来增强手语识别与翻译模型中的视觉特征提取,从而提高手语识别与翻译模型的性能。

方法本文提出了一种用于基于骨骼特征的手语识别与翻译网络。首先为了获取骨骼信息,我们设计了一个独立的网络分支用于骨骼提取。为了让骨骼特征有效地指导模型提取手语相关特征,我们连接了每个帧的骨架通道和 RGB 通道以进行特征提取。为了区分不同视频片段的重要性,我们构建了一个基于骨架的图卷积网络,为每个片段赋予不同的权重。最终,我们提供了端到端的手语翻译模型和两阶段的手语翻译模型。此外,我们还提供了智能手机上的手语翻译解决方案,从而将所提出的模型部署在智能手机上,以便于听障人士与普通人的沟通。

结果我们在三个公共数据集上对所做工作进行了实验,结果表明提出的手语模型进一步提高了手语翻译性能,并超过了现有的手语模型性能。此外,我们还在真实场景进行了案例测试,实验结果表明了我们的模型在真实场景下具备很强的鲁棒性。

结论所提出的模型通过利用骨骼模态提高手语识别与翻译性能。此外,通过可视化分析,我们可以看出基于骨骼的手语识别与翻译模型可以更加有效地聚焦手语相关的特征,而且基于骨骼的图卷积网络可以有效地区别每个视频片段的重要性,从而关注手势的动态特征。

Abstract:Sign languages are mainly expressed by human actions, such as arm, hand, and finger motions. Thus a skeleton which reflects human pose information can provide an important cue for distinguishing signs (i.e., human actions), and can be used for sign language translation (SLT), which aims to translate sign language to spoken language. However, the recent neural networks typically focus on extracting local-area or full-frame features, while ignoring informative skeleton features. Therefore, this paper proposes a novel skeleton-aware neural network, SANet, for vision-based SLT. Specifically, to introduce skeleton modality, we design a self-contained branch for skeleton extraction. To efficiently guide the feature extraction from videos with skeletons, we concatenate the skeleton channel and RGB channels of each frame for feature extraction. To distinguish the importance of clips (i.e., segmented short videos), we construct a skeleton-based graph convolutional network, GCN, for feature scaling, i.e., giving an importance weight for each clip. Finally, to generate spoken language from features, we provide an end-to-end method and a two-stage method for SLT. Besides, based on SANet, we provide an SLT solution on the smartphone for benefiting communication between hearing-impaired people and normal people. Extensive experiments on three public datasets and case studies in real scenarios demonstrate the effectiveness of our method, which outperforms existing methods.

-

Keywords:

- human action recognition /

- sign language translation /

- skeleton /

- neural network

-

1. Introduction

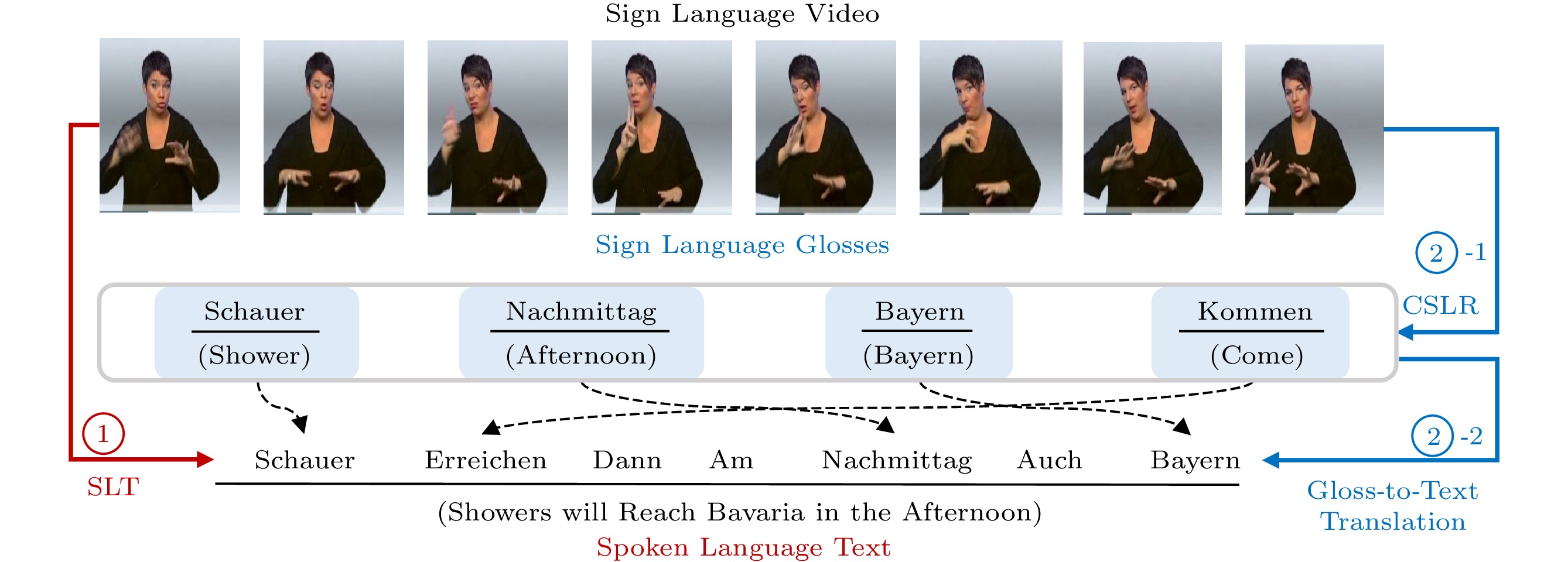

Sign languages are languages that use the visual modality to communicate with hearing-impaired people. To build the bridge between deaf and hearing people, many endeavors in vision-based sign language understanding have emerged and existing work can mainly be categorized into sign language recognition (SLR)[1–3] and sign language translation (SLT)[4–8]. Early work on sign language usually focused on SLR, including isolated SLR (ISLR) and continuous SLR (CSLR). ISLR[9] aims at recognizing an isolated sign (i.e., human gesture) as a gloss, which is similar to the gesture recognition task[10, 11]. CSLR[2, 3, 12, 13] recognizes continuous signs as the corresponding gloss sequence, which is a different and more difficult task than gesture recognition. Here, “gloss” means a gesture with its closest meaning in natural languages[14]. However, considering the differences between sign language and spoken language on grammatical rules, the recognized gloss sequence may be not grammatically correct, thus hindering the understanding of sign language. Compared with SLR, the objective of SLT is to get spoken language, i.e., the translated sentences, satisfying the grammatical rules and linguistic characteristics of spoken language. As shown in Fig.1, SLT can be achieved in an end-to-end way, i.e., directly translating the sign language in a video to the spoken language. Besides, SLT can also be decomposed into two stages, i.e., CSLR which outputs a gloss sequence by processing the video and gloss-to-text translation[4, 15] which generates the spoken language with the gloss sequence.

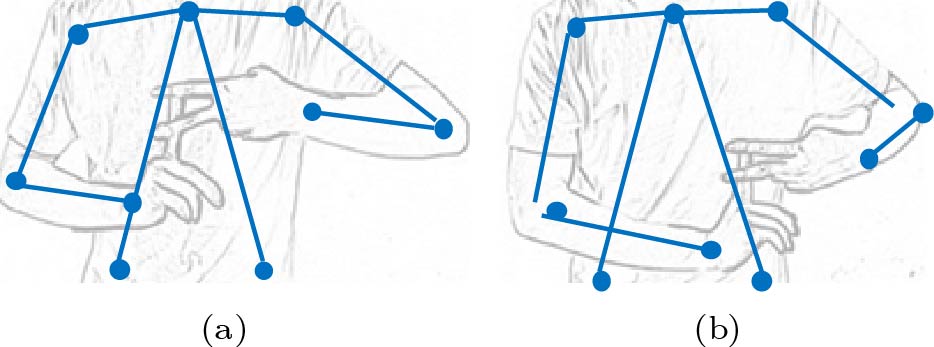

In regards to SLT, existing methods are dedicated to extracting discriminative sign-related features, including full-frame[4, 5, 16] or local-area[15, 17, 18] features, while skeleton information has not been fully studied. We notice that skeleton information which reflects the important spatial structure of human poses can be used to distinguish signs with different human poses (e.g., different relative positions of hands, arms), especially for signs which use the same hand gesture in different positions to represent different meanings, as shown in Fig.2. Given this observation, it is meaningful to introduce skeleton information into SLT for performance improvement.

There is only a little work using skeleton information in SLT[8, 17, 19]. Besides, the existing researches often suffer from the following problems: first, requiring an external device[19] or extra offline preprocessing[8, 17] to obtain skeletons, which hinders the end-to-end SLT from videos; second, using videos and skeletons as two independent data sources, i.e., not fusing them at the initial stage of feature extraction, thus the skeletons may not be used to guide video-based feature extraction efficiently; third, treating each clip (i.e., a short segmented video) equally while neglecting the different importance of meaningful (e.g., sign-related) clips and unmeaningful (e.g., end state) clips. Among these problems, the third one exists not only in skeleton-assisted SLT, but also in models of SLT.

Driven by the above three issues, we design a skeleton-aware neural network, SANet, for SLT. Firstly, we design a self-contained branch for skeleton extraction, while not needing external algorithms or tools. Secondly, to better fuse video-based features with skeleton features, we concatenate the skeleton channel and RGB channels for each frame, and thus the skeleton can guide feature extraction from images/videos. Thirdly, to distinguish the importance of clips, we construct a skeleton-based graph convolutional network (GCN) for feature scaling, i.e., giving an importance weight for each clip. Specifically, we design four components for our SANet, including FrmSke, ClipRep, ClipScl, and LangGen. At first, FrmSke is used to extract the skeleton from each frame and frame-level features for a clip by convolutions and deconvolutions. Then, ClipRep is used to enhance clip representation by adding the skeleton channel. After that, ClipScl is used to scale/weight the clip representation by designing a skeleton-based graph convolutional network (GCN). Finally, LangGen generates spoken language in an end-to-end way with a decoder and in a two-stage way with a gloss-to-text translation sub-network. In addition, a joint optimization strategy is designed for model training, aiming to improve the performance of SLT.

This is the extended work of a conference paper[20], in which we introduced skeletal modality to enhance the network's ability for extracting sign-related features. We mainly make the following changes in this paper. We modify the LanGen module to predict both gloss sequences and the spoken language, and propose a novel gloss-to-text translation model to translate the recognized gloss sequences to the spoken language. We also design a system that allows users to translate the sign language directly through smartphones. The major contributions of this paper can be summarized as follows.

● We propose a skeleton-aware neural network (SANet) for SLT, where a self-contained branch is designed for skeleton extraction and a joint training strategy is designed to optimize skeleton extraction and sign language translation jointly.

● We concatenate the extracted skeleton channel and RGB channels in the source data level. Thus we can highlight human pose-related features and enhance clip representation.

● We construct skeleton-based graphs and use a graph convolutional network to scale the clip representation, i.e., weighting the importance of each clip. Thus we can highlight meaningful clips while weakening unmeaningful clips.

● We conduct extensive experiments on two large-scale public SLT datasets. The experimental results demonstrate that our SANet outperforms existing methods. Besides, we design a system that allows users to translate the sign language directly through smartphones, perform a case study, and then apply SANet in real scenarios to demonstrate its usability.

2. Related Work

In this section, we mainly review the related work on vision-based SLR and SLT, where SLR can be further classified into ISLR and CSLR. Besides, we further analyze the differences between the existing SLT work and our work, especially on skeleton extraction and usage in SLT.

Isolated Sign Language Recognition (ISLR). The objective of ISLR is to recognize one sign as a word or expression[9, 21, 22]. Early work usually adopted the traditional hand-crafted visual features like Grassmann Covariance Matrix[23] and Histogram of Oriented Gradient[24], and utilized Hidden Markov Model[25] to analyze the gesture sequence of a sign (i.e., human action) for recognition. However, the hand-crafted visual features often lead to poor performance and are limited to SLR with a small number of classes. Recently, deep learning based methods have been introduced for ISLR. These methods employ 2D CNN[21], 3D CNN[22], and LSTM[21] to automatically extract features from videos[26], Kinect's sensor data[27], or moving trajectories of skeletons joints[9] for ISLR, and often achieve better performance.

Continuous Sign Language Recognition (CSLR). The objective of CSLR is to recognize a sign sequence to the corresponding gloss sequence[1, 3]. The intuitive thought is to decompose CSLR into ISLR, where temporal segmentation and Hidden Markov Model[1] are often adopted. However, temporal segmentation is non-trivial, since transition actions in sign language are diverse and hard to detect[28], and inaccurate temporal segmentation may incur possible errors in SLR. Thus CSLR is more challenging than ISLR. To solve the above issue, the recent deep learning based methods[2, 28] apply a sequence-to-sequence model for CSLR. Moreover, the connectionist temporal classification (CTC) loss function[3, 29] is often adopted for CSLR, but it requires that the source and target sequences have the same order. Considering that the sign sequences in sign language and the word sequences in spoken language can be different[4], the gloss sequences obtained by CSLR may not satisfy the grammatical rules of spoken language. However, when applying an appropriate translation model on a recognized gloss sequence, it is possible to generate the spoken language from the gloss sequence for SLT.

Sign Language Translation (SLT). The objective of SLT is to translate sign language into spoken language. Initially, SLT is decomposed into two stages[30], i.e., CSLR and gloss-to-text translation. The two-stage methods need both gloss annotations and sentence annotations, and can be optimized in two stages. However, annotating glosses requires specialists and is a labor-intensive task. Recently, inspired by the natural language translation task, the sequence-to-sequence frameworks[6, 8, 18] and transformers[7, 15, 31] are adopted to realize end-to-end SLT. To represent sign languages, the existing methods focus on extracting full-frame[4, 5, 16] or local-area features[17, 18] from videos. There was only a little work paying attention to skeleton information (i.e., human pose) in sign languages, although skeleton can be used as a good indicator of different signs. Specifically, Guo et al.[19] collected skeletons with a depth camera and proposed a hierarchical deep recurrent fusion network for feature extraction. Camgoz et al.[17] extracted 2D pose information using the OpenPose library in an offline stage. Kan et al.[8] extracted human skeletons by two algorithms, i.e., HRNet[32] and OpenPose[33], and introduced a hierarchical spatio-temporal graph neural network for feature extraction. These methods usually adopt an external device or offline preprocessing for skeleton extraction, which may hinder end-to-end SLT from videos. Besides, they parallelly input RGB videos with skeletons to a neural network for feature extraction and give each clip the same importance weight, which may limit the performance of feature representation. Unlike above work, we extract skeletons with a self-contained branch instead of external algorithms. Besides, we fuse videos and skeletons in the source data by concatenating skeleton and RGB channels, and construct a skeleton-based GCN to weigh the importance of each clip.

3. Proposed Approach

When given a sign language video X=(f1,f2,…,fu) with u frames, the objective of our model is to learn the conditional probability p(Y|X) of generating a spoken language sentence Y=(w1,w2,…,wv) with v words. Besides, when gloss annotations are provided, the objective of our model is further to predict the corresponding gloss sequence G=(g1,g2,…,gτ) with τ glosses and further predict the spoken language sentence Y based on G.

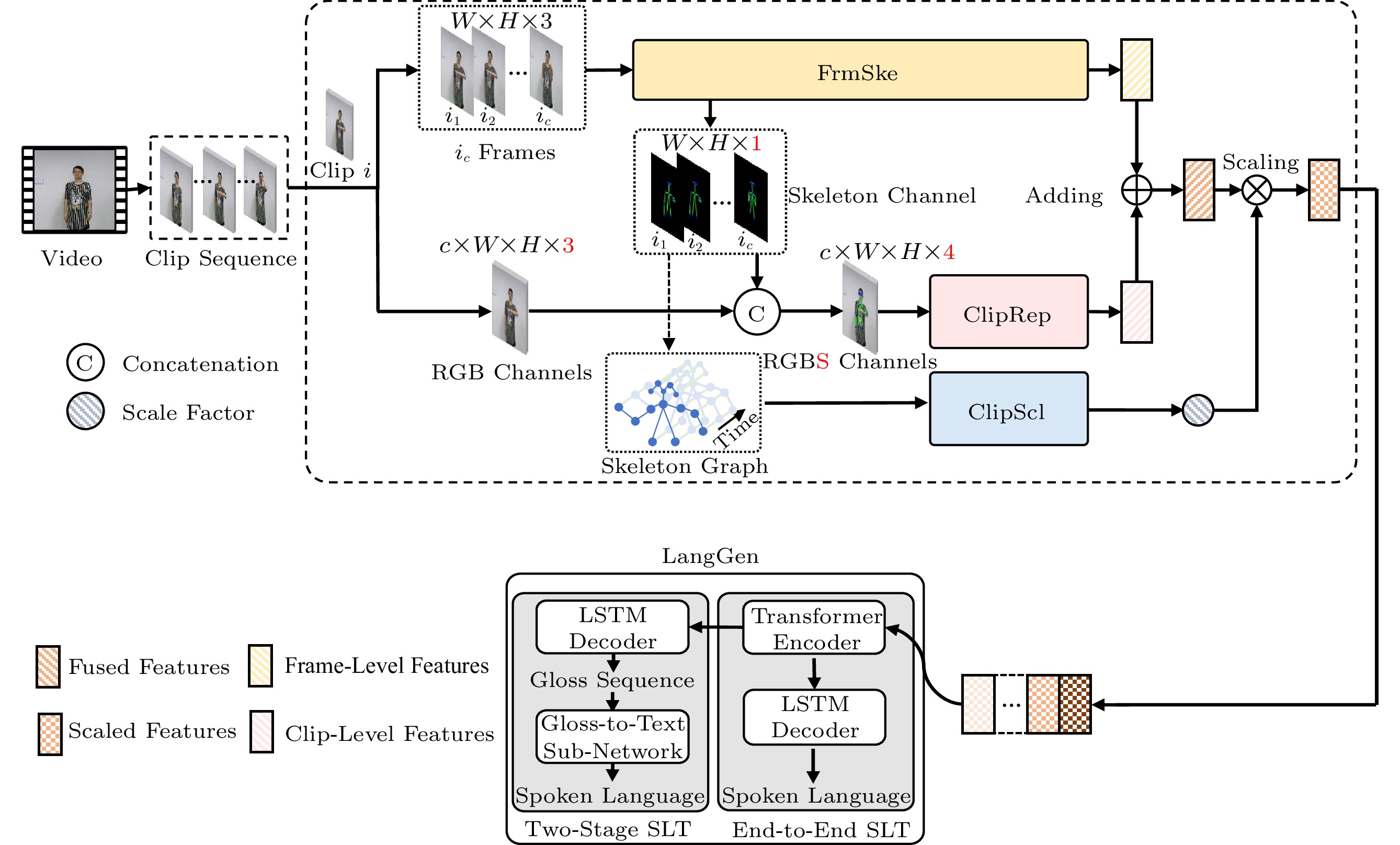

To achieve the goal of SLT, we propose Skeleton-Aware neural Network (SANet), which consists of FrmSke, ClipRep, ClipScl, and LangGen modules. As shown in Fig.3, a sign language video is first segmented into consecutive equal-length clips with 50% overlap. For each clip, we first use FrmSke to extract skeletons from each frame and get frame-level features. Then, we concatenate the skeleton channel and RGB channels of each frame in the clip, and adopt ClipRep to extract clip-level features, where clip-level features are further added with frame-level features to get fused features of a clip. Meanwhile, we utilize the skeletons in a clip to construct a spatial-temporal graph and adopt ClipScl to calculate the scale factor, which will be multiplied with the fused features to get the scaled feature representation of a clip. Finally, the scaled features of all clips are sent to LangGen for generating spoken language in an end-to-end way or a two-stage way.

3.1 Frame-Level Skeleton Extraction

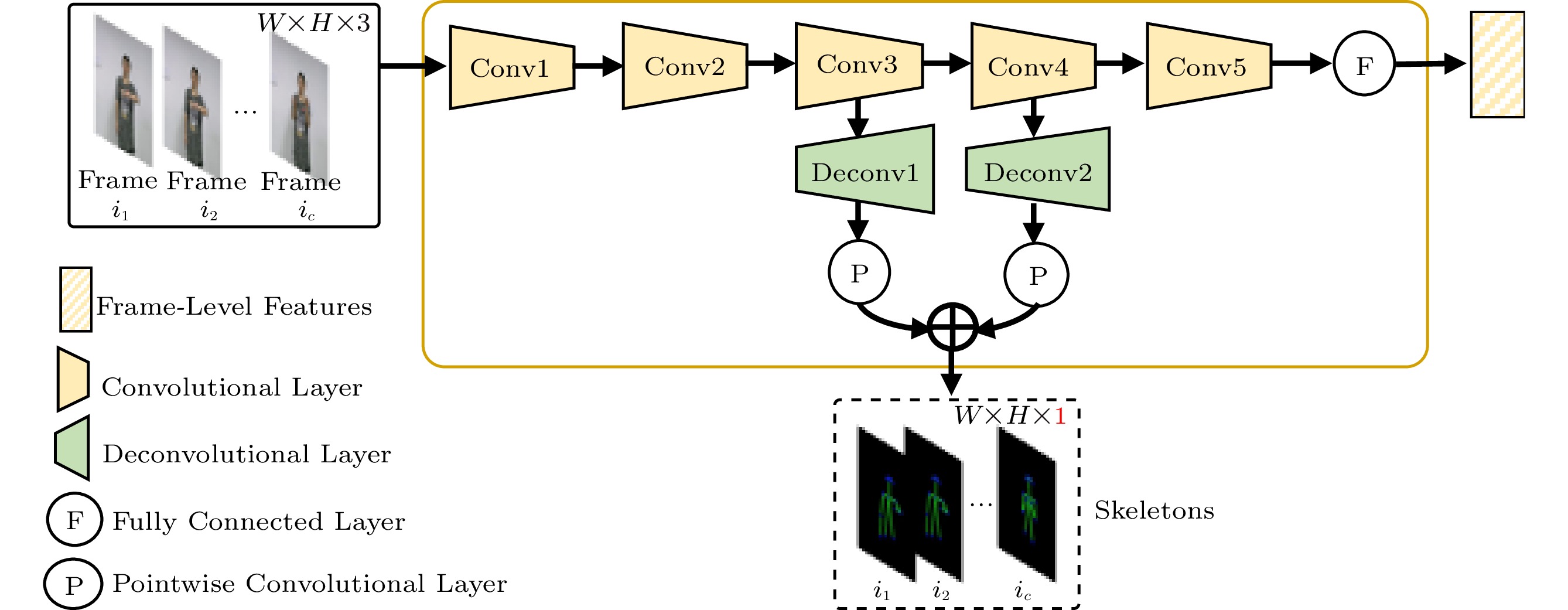

A frame is the minimal unit of a sign language video which contains the spatial structure of human gestures and detailed information on face, hands, fingers, etc. Therefore, we split the clip into frames and propose a FrmSke module to extract skeleton and frame-level features from each frame. As shown in Fig.4, the backbone network in FrmSke is a compressed variant of the VGG model[34], where the number of channels in convolutional layers is reduced to one fourth of that in the original VGG, to reduce memory usage and make the model work on our platform.

![]() Figure 4. FrmSke extracts skeletons from each image and frame-level features of each clip[20].

Figure 4. FrmSke extracts skeletons from each image and frame-level features of each clip[20].Skeleton Extraction. To obtain the human skeleton from a frame, we design two parallel deconvolutional layers to upsample high-to-low resolution representations after the Conv3 and Conv4 layers, as shown in Fig.4. Specifically, the Deconv1 layer adopts one 3×3 deconvolution with the stride 2 for 2× upsampling, and the Deconv2 layer adopts two consecutive 3×3 deconvolutions with the stride 2 for 4× upsampling. Then, we add two pointwise convolution layers and the element-sum operation after the Deconv1 and Deconv2 layers to generate K heatmaps, where each heatmap {\boldsymbol{M}}^{H}_{k} , k\in [1, \;K] contains one keypoint (with the highest heatvalue) of the skeleton. Here, K is set to 14 which means the keypoints of nose, neck, both eyes, both ears, both shoulders, both elbows, both wrists, and both hips. After that, we generate the skeleton channel (i.e., a 2D matrix) \boldsymbol f_{i}^{S} of each frame f_i by adding the corresponding elements in K heatmaps.

Frame-Level Clip Representation. As shown in Fig.4, to get sign language related features in a frame, the consecutive convolutions are first used to extract features like hand shapes and facial expressions from each frame in a clip. Then, these features (i.e., feature maps) are concatenated, flattened, and sent to a fully connected layer to get a feature vector {\boldsymbol{F}}_{m} with N_m = 4\;096 elements of the clip.

3.2 Channel Extended Clip Representation

Considering an action may last for several frames, a clip (i.e., a segmented short video) with several consecutive frames can capture the short action (i.e., continuous/dynamic gestures) in sign languages. Thus, we design the ClipRep module to track the dynamic changes of human poses and extract the clip representation. As shown in Fig.5, we extend the channels of each frame by concatenating the skeleton channel and the original RGB channels, and then adopt Pseudo 3D Residual Networks (P3D)[35] as the backbone network to extract clip-level features.

![]() Figure 5. ClipRep extracts clip-level features, where each frame of the clip is extended to four channels by concatenating the skeleton channels and RGB channels[20].

Figure 5. ClipRep extracts clip-level features, where each frame of the clip is extended to four channels by concatenating the skeleton channels and RGB channels[20].Channel Extension with Skeleton. In a clip C = \{f_i,\;\dots,\; f_{i+c}\} with c frames, each frame f_i = [f_{i}^{R},\;f_{i}^{G},\;f_{i}^{B}] originally consists of RGB channels, and [\cdot] denotes the concatenation operation. In SANet, we extend the channels of each frame, by concatenating the skeleton channel \boldsymbol f_{i}^{S} as the fourth channel. Then, we get four channels (i.e., RGBS channels) f_i^{e} = [f_{i}^{R},\;f_{i}^{G},\;f_{i}^{B},\;f_{i}^{S}] for each frame, as shown in Fig.5. After that, the clip with RGBS frames C^{e} = \{f_i^{e},\;\dots,\;f_{i+c}^{e}\} will be used for clip-level feature extraction.

Enhanced Clip Representation. Based on clip C^{e} with extended RGBS frames, we introduce the P3D block[35] to extract the features of clips. In regard to the P3D block[35], it decomposes traditional 3D convolution into one (2D) spatial filer (1×3×3), one (1D) temporal filter (3×1×1), and two pointwise filters (1×1×1), aiming to reduce the amount of computation and build a deeper network for better feature extraction. When combining these filters in different ways, we can get different modules (i.e., P3D-A, P3D-B, P3D-C) for P3D[35]. In ClipRep, each clip C^{e} is processed by 3D convolution and P3D blocks, and then the residual units, an average pooling layer, and a fully connected layer are adopted to get the feature vector {\boldsymbol{F}}_c with N_c = 4\;096 elements, as shown in Fig.5.

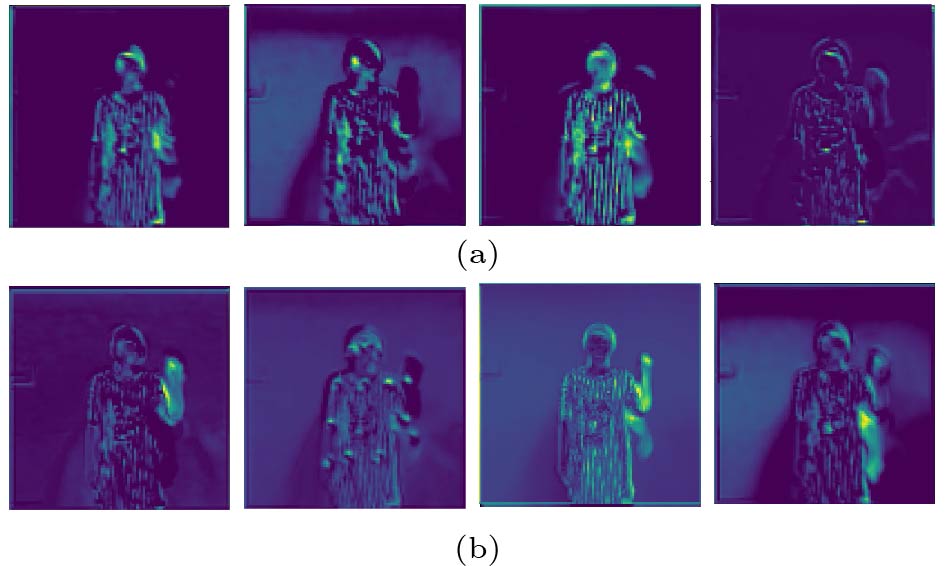

To verify whether the added skeleton channel can guide the network to focus on sign-related features, we make a comparison on the intermediate feature map (i.e., after the first P3D block) in ClipRep without or with the skeleton channel. As shown in Fig.6 (b), the areas with brighter colors indicate that the added skeleton can highlight the features related to signs, e.g., gesture changes and human poses, thus enhancing the clip representation.

![]() Figure 6. Intermediate feature maps after the first P3D block (a) without or (b) with the skeleton channel. In each case, we show four examples selected from 16 frames in a clip[20].

Figure 6. Intermediate feature maps after the first P3D block (a) without or (b) with the skeleton channel. In each case, we show four examples selected from 16 frames in a clip[20].3.3 Skeleton-Aware Clip Scaling

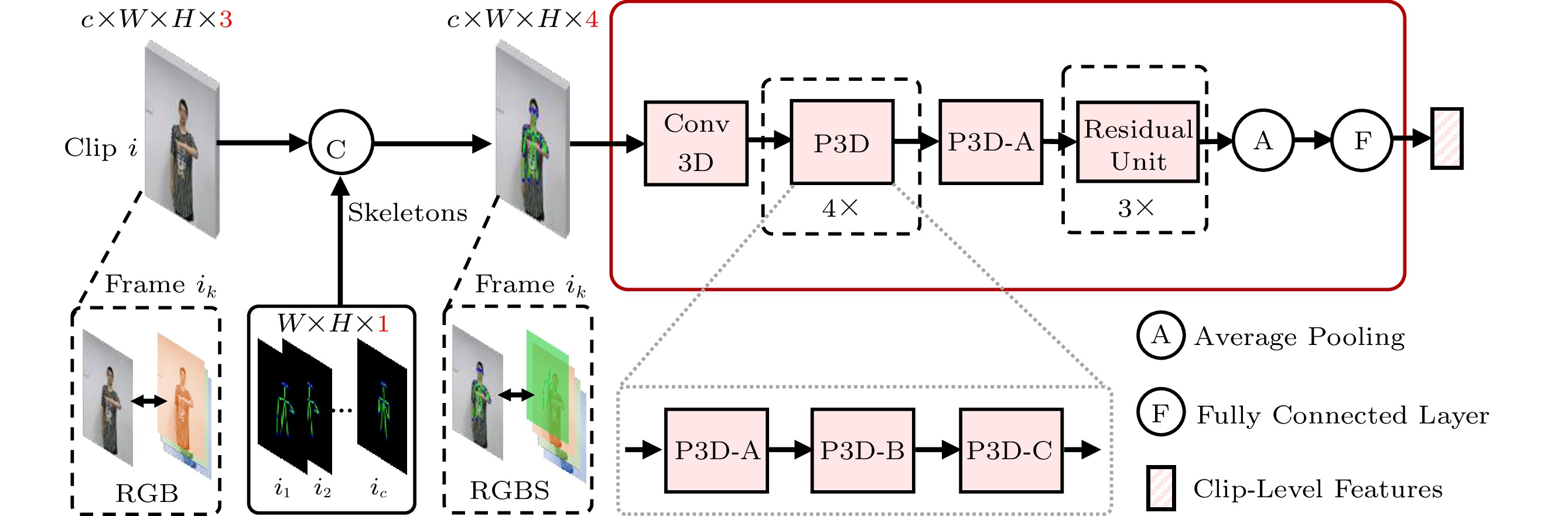

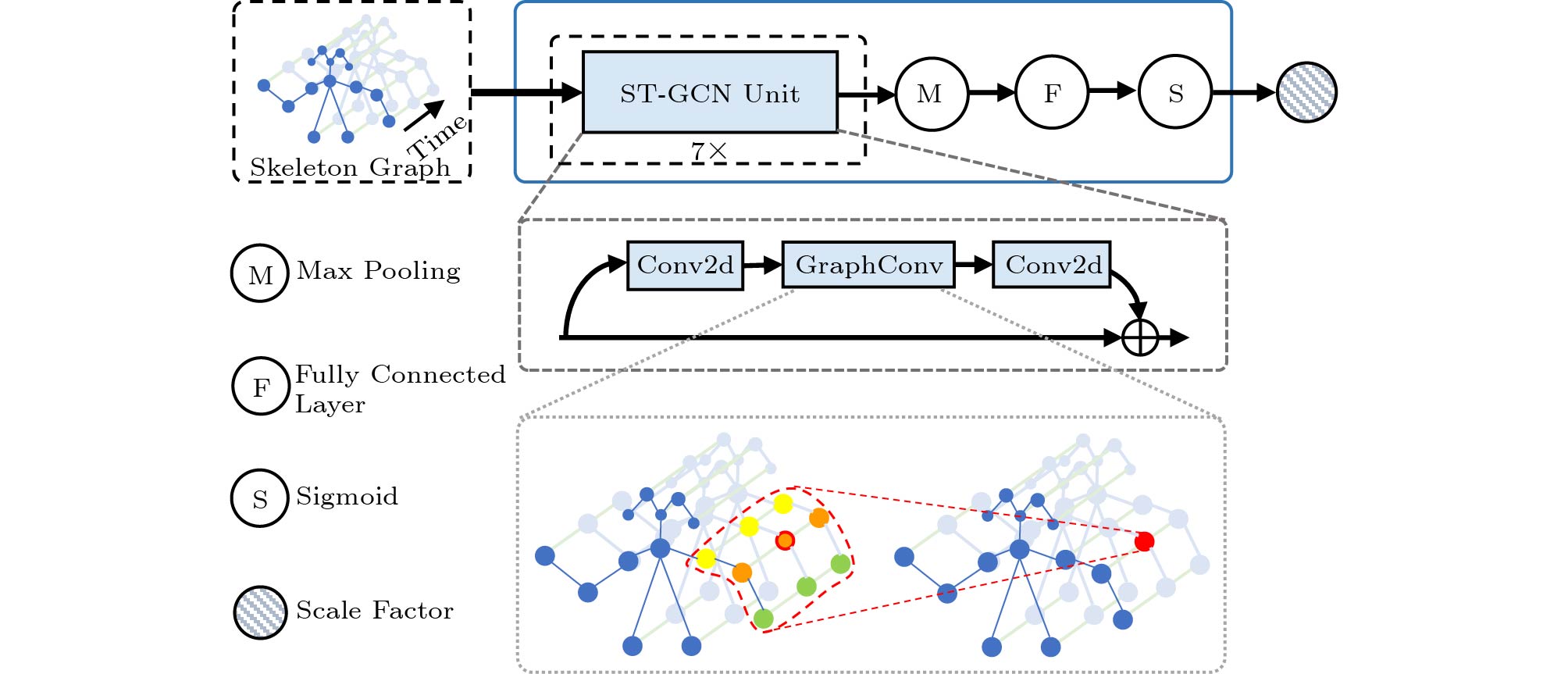

Considering that a human action can correspond to a meaningful sign, a transition action, an end state, etc., there exist redundant or less important clips in sign language videos. That is to say, not all clips are equally important for SLT. To track the human actions in a clip and weight each clip, we design the ClipScl module, which first constructs a skeleton-based graph and applies a graph convolutional network (GCN) to generate a scale factor, and then scales/weights the feature vector of each clip with the scale factor.

Skeleton-Based GCN. For a clip C with c frames, the node set of keypoints in skeletons is represented as V while the edge set (i.e., connections) between nodes is represented as E . When given V and E , we can construct a skeleton-based graph G = (V,\; E) . Specifically, the keypoints of the i -th skeleton in a clip are V_i = (\upsilon_{i_1},\; \upsilon_{i_2}, \;\dots,\; \upsilon_{i_K}), \;i\in[1,\;c] . Here, \upsilon_{i_j} , j\in[1, \; K] means the j -th keypoint/node in the i -th skeleton, while K = 14 means the number of keypoints in a skeleton. Then we can get the keypoint/node set V = \{ \upsilon_{i_j}, \;i\in[1,\;c], \;j\in[1,\; K]\} . The edge set E includes the intra-skeleton edge set E_a = \{\upsilon_{i_p}\upsilon_{i_q}|(p,\; q)\in S \} where S means the set of naturally connected body joints in a skeleton and the inter-skeleton edge set E_e = \{\upsilon_{i_p}\upsilon_{j_p}| i, j\in[1,\;c], |i-j| = 1 \} (i.e., the edge between the corresponding nodes of two adjacent skeletons), as shown in Fig.7. In the constructed skeleton-based graph, the initial feature vector {\boldsymbol{\upsilon}}^{f} of each node is represented with its coordinate vector (x,\; y) in the frame.

![]() Figure 7. ClipScl constructs a skeleton-based graph and uses GCN to calculate the scale factor, which is used for weighting the importance of each clip[20].

Figure 7. ClipScl constructs a skeleton-based graph and uses GCN to calculate the scale factor, which is used for weighting the importance of each clip[20].With the skeleton-based graph, we adopt the graph convolution network (GCN) which has received widespread attention in human action recognition, to calculate the scale factor (i.e., the importance weight) of a clip. Specifically, we design ClipScl, which consists of seven layers of spatial-temporal graph convolution (ST-GCN) units, while decreasing the channel number of ST-GCN by a factor of 0.25 to reduce the memory requirement for the model, as shown in Fig.7. Then, we adopt the max pooling and a fully connected layer to get the feature vector, which will be passed to a sigmoid function to get the scale factor s_f , i.e., a value belonging to [0, 1], as follows:

{s}_f = {\rm{Sigmoid}} (ST - GCN^+({\boldsymbol{V}}^{f},\;E)), where {\boldsymbol{V}}^{f} is the feature set of nodes in V , ST - GCN^{+}(\cdot) denotes the combination of seven ST-GCN units, a Maxpooling layer, and a fully connected layer, and {\rm{Sigmoid}}(\cdot) denotes the sigmoid function.

Fused Feature Scaling. Until now, we have obtained the frame-level feature {\boldsymbol{F}}_m , the clip-level feature vector {\boldsymbol{F}}_c , and the scale factor {s_f} for each clip C . To fuse different-level features and assign importance weight for each clip, we first adopt element addition \oplus to fuse {\boldsymbol{F}}_m and {\boldsymbol{F}}_c . Then, we scale the fused feature vector with multiply operation \otimes to get the scaled feature vector {\boldsymbol{F}}_f , as shown below:

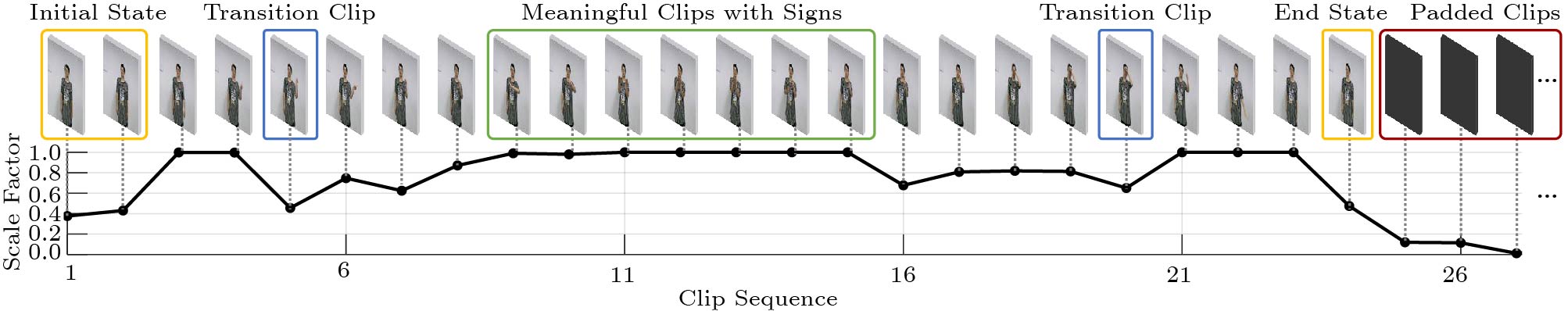

{\boldsymbol{F}}_f = ({\boldsymbol{F}}_m \oplus {\boldsymbol{F}}_c) \otimes {s}_f. To verify whether ClipScl can learn the scale factor of each clip, we visualize the calculated scale factor s_f for each clip[20]. As shown in Fig.8, clips with meaningful signs (i.e., clips in the green rectangle) have larger s_f , while clips without signs or containing transition actions have smaller s_f . It means that the designed ClipScl module can efficiently track the dynamic changes of a human pose with skeletons and distinguish the importance of different clips, (i.e., ClipScl can highlight meaningful clips while weakening unmeaningful clips).

![]() Figure 8. Visualization of the scale factor for each clip[20]. Meaningful clips with signs have larger scale factors, while unmeaningful clips have smaller scale factors.

Figure 8. Visualization of the scale factor for each clip[20]. Meaningful clips with signs have larger scale factors, while unmeaningful clips have smaller scale factors.4. Spoken Language Generation

With scaled feature vectors, we propose LangGen which provides two ways to generate the final spoken language, i.e., an end-to-end method for translation and a two-stage method for translation.

4.1 End-to-End Translation

When gloss annotations are unavailable, our model directly translates the sign language in a video to the spoken language in an end-to-end way. That is to say, the end-to-end model does not rely on gloss annotations for both training and inference stages. As shown in Fig.3, for scaled feature sequence {\boldsymbol{F}} = \{{\boldsymbol{F}}_f^{t}\}_{t = 1}^{l } where l is the number of clips, we first adopt a three-layered transformer[36] to learn the temporal relationship between clips, and then adopt the LSTM decoder and the attention mechanism to generate the spoken language.

Transformer Encoder. With scaled feature sequence {\boldsymbol{F}} , we adopt a three-layered transformer to extract temporal feature and generate hidden states {\boldsymbol{h}} as follows:

{\boldsymbol{h}} = Encoder({\boldsymbol{F}}), where Encoder (\cdot) denotes the transformer encoder with three layers.

LSTM Decoder. With generated hidden states {\boldsymbol{h}} , we use one LSTM layer as the decoder to decode words step by step. Specifically, the decoder outputs the prediction probability p(\hat{y}_{t,\, j}) , (i.e., the probability that the predicted word \hat{y}_{t} at the t -th time step is the j -th word in the vocabulary). Here, the decoder starts with a token “[BOS]” indicating the beginning of a sequence, and stops until meeting another token “[EOS]” indicating the end of prediction.

4.2 Two-Stage Translation

When gloss annotations are provided, we first predict the gloss sequence and adopt a transformer-based sub-network to generate the spoken language sentences based on the recognized gloss sequence.

Gloss Generation. After the three-layered transformer, we adopt another LSTM decoder to generate the gloss sequence, as shown in Fig.3. Similar to end-to-end translation, the decoder outputs the prediction probability p(\hat{g}_{t,\ j}) at the t -th time step of the j -th gloss in the vocabulary, while starting with “[BOS]” and ending with “[EOS]”.

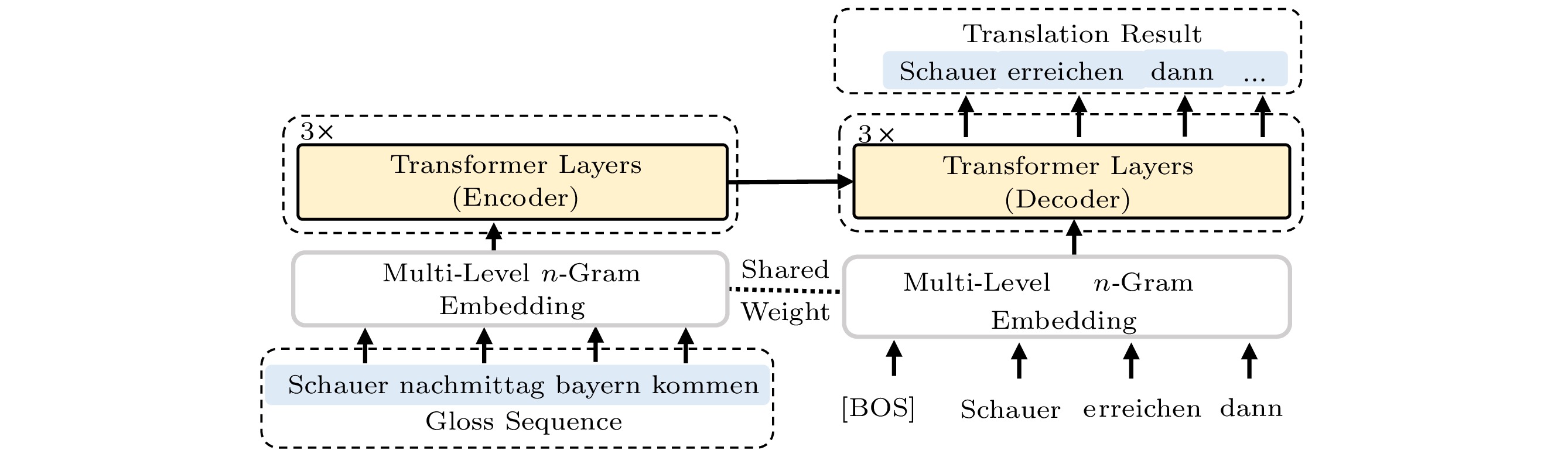

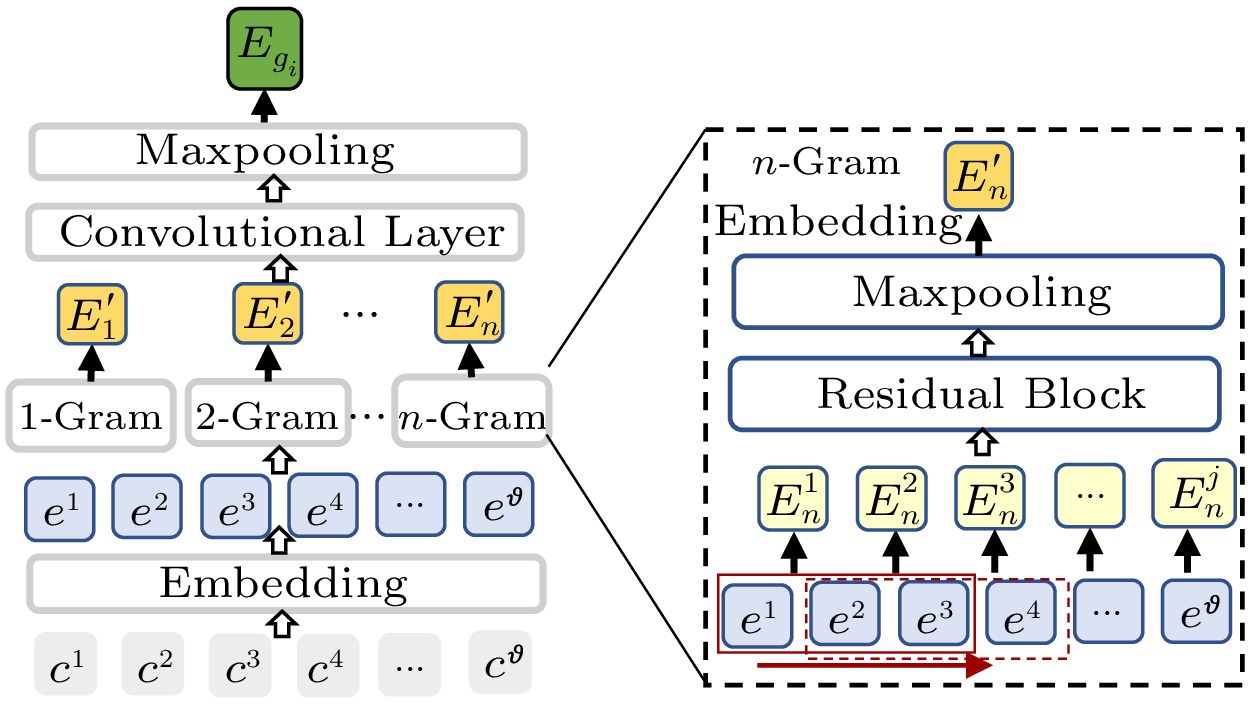

Gloss-to-Text Translation. After getting the gloss sequence, we propose a transformer-based sub-network for generating the final spoken language. It is worth mentioning that when translating the gloss sequence to the spoken language, words in gloss sequences can appear in translation sentences with different forms. Therefore, to improve gloss-to-text translation performance, we propose a multi-level n -gram embedding module with the shared weight mechanism aimed at exploiting the word-level similarity between the gloss sequence and the spoken language. As shown in Fig.9, our designed gloss-to-text sub-network consists of the multi-level n -gram embedding module and a transformer network with three encoder layers and three decoder layers.

Gloss/Word Representation with Multi-Level n -Gram Embedding. To get the representation of a gloss, we propose multi-level n -gram embedding, where n -gram embedding means the representation of n consecutive characters. For a gloss g_i = \{c^{j}\}_{j = 1}^{\vartheta} with \vartheta characters, we first get the initial embedding e^{j} of each character c^{j} . Second, as shown in the bottom-right red rectangular of Fig.10, we adopt a sliding window by setting the window size to n \in[1,\; N] and stride to 1, to get a set of n -gram embedding {\boldsymbol{E}}_{n} = \{{\boldsymbol{E}}_{n}^{j}\}_{j = 1}^{\vartheta-n+1} , where {\boldsymbol{E}}_{n}^{j} = {(e^{j},\;\dots,\;e^{j+n-1})} . Third, a residual block[37] res\_block(\cdot) and a Maxpooling layer Maxpool(\cdot) are adopted to get the final n -gram embedding {\boldsymbol{E}}_{n}^{'} of the gloss as follows:

\begin{split} {\boldsymbol{E}}_{n}^{'} =& Maxpool(\{ res\_block({\boldsymbol{E}}_{n}^{j})\}^{\vartheta-n+1}_{j = 1} )\\=& Maxpool (\{W_s{\boldsymbol{E}}_{n}^{j}+W_2\sigma(W_1{\boldsymbol{E}}_{n}^{j})\}^{\vartheta-n+1}_{j = 1}), \end{split} where \sigma(\cdot) is the ReLU function, and W_s , W_1 and W_2 are 1D convolution weights of the residual block.

When combining all n -gram embeddings ( n\in[1,N] ), i.e., 1-gram, 2-gram, \; \ldots, n -gram, we can get multi-level n -gram embedding \{{\boldsymbol{E}}_n^{'}\}_{n = 1}^{N} for gloss g_i . As shown in the left side of Fig.10, to fuse multi-level n -gram embedding for the final representation {\boldsymbol{E}}_{g_i} of gloss g_i , a 1D convolution layer W_e and a Maxpooling layer are adopted as follows:

{\boldsymbol{E}}_{g_i} = Maxpool(\{W_e {\boldsymbol{E}}_n^{'}\}_{n = 1}^{N}). Similarly, as shown in the right side of Fig.9, we can also get the multi-level n -gram embedding for each word of the spoken language in decoding, since the gloss-to-text sub-network shares the same multi-level n -gram embedding module for the encoder and the decoder.

Text Generation with Transformer. After getting multi-level n -gram embeddings \{{\boldsymbol{E}}_{g_i}\}_{i = 1}^{\nu} of \nu glosses, the transformer encoder takes multi-level n -gram embeddings as input and generates \nu hidden vectors \{{\boldsymbol{h}}_i\}_{i = 1}^{\nu} , and the decoder utilizes hidden vectors and previously predicted words \{w_i\}_{i = 1}^{t-1} to predict word w_t as the t -th step as follows:

\begin{split}& \{{\boldsymbol{h}}_i\}_{i = 1}^{\nu} = Encoder (\{{\boldsymbol{E}}_{g_i}\}_{i = 1}^{\nu}), \\ & {\boldsymbol{O}}_t = Decoder (\{{\boldsymbol{h}}_i\}_{i = 1}^{\nu},\ \{{\boldsymbol{E}}_{w_i}\}_{i = 1}^{t-1}), \\ & w_t = softmax(W {\boldsymbol{O}}_t +b), \end{split} where {\boldsymbol{E}}_{w_i} is the multi-level n -gram embedding of predicted word w_i , softmax(\cdot) denotes the softmax function, and W , b are the weight and the bias of the fully connected layer, respectively.

4.3 Joint Loss Optimization

In SANet, we need to regress both the heatmaps for skeleton generation and the predicted sequence, and thus the loss function L consists of skeleton extraction loss L_{\rm{ske}} , SLT loss L_{\rm{slt}}(y,\; \hat{y}) , SLR loss L_{\rm{slr}}(g,\; \hat{g}) , and gloss-to-text generation loss L_{\rm{g2t}}(y, \; \hat{y}) .

The skeleton extraction loss L_{\rm{ske}} is calculated as follows:

L_{\rm{ske}} = \frac{1}{K}\sum\limits_{k}^{K}\sum\limits_{i}^{h} \sum\limits_{j}^{w} ({{M}}^{H}_{k, \, i, \, j}-{{M}}^{G}_{k, \, i, \, j})^{2}, where {\boldsymbol{M}}^{H} \in {\mathbb{R}}^{3} , {\boldsymbol{M}}^{G} \in {\mathbb{R}}^{3} denote the predicted heatmap and the ground-truth heatmap, respectively. Here, K , h , and w are the number of keypoints, the height, and the width of a heatmap, respectively. The ground-truth heatmap contains a heating area, which is generated by applying a 2D Gaussian function with 1-pixel standard deviation[32] on the keypoint estimated by OpenPose[33].

When gloss annotations are unavailable, we adopt end-to-end translation. The cross entropy loss L_{\rm{slt}}(y, \, \hat{y}) is adopted and it is calculated as follows:

L_{\rm{slt}}(y, \, \hat{y}) = -\sum\limits_{t = 1}^{T_y} \sum\limits_{j = 1}^{V_y}y_{t,\, j}{\rm{log}}(p(\hat{y}_{t,\, j})), where \hat{y} is the predicted word sequence, y is the ground-truth word sequence (i.e., labels), T_{y} means the maximum number of time steps in the decoder and V_y means the number of words in the vocabulary. y_{t, j} is an indicator. When the ground-truth word at the t -th time step is the j -th word in the vocabulary, y_{t,\ j} = 0 ; otherwise, y_{t,\ j} = 1 . p(\hat{y}_{t,\ j}) means the probability that the predicted word \hat{y}_{t,\ j} at the t -th time step is the j -th word in the vocabulary.

When gloss annotations are available, we adopt two-stage translation. We first predict the gloss sequences and then train our gloss-to-text sub-network. The gloss prediction loss L_{\rm{slr}}(g, \, \hat{g}) and the gloss-to-text translation loss L_{\rm{g2t}}(y, \,\hat{y}) are both the cross entropy loss which is similar to L_{\rm{slt}}(y, \,\hat{y}) . They are calculated as follows:

L_{\rm{slr}}(g, \,\hat{g}) = -\sum\limits_{t = 1}^{T_g} \sum\limits_{j-1}^{V_g}g_{t,\, j} {\rm{log}}(p(\hat{g}_{t,\, j})), L_{\rm{g2t}}(y,\, \hat{y}) = -\sum\limits_{t = 1}^{T_y} \sum\limits_{j = 1}^{V_y}y_{t, \, j} {\rm{log}}(p(\hat{y}_{t, \, j})), where \hat{g} is the predicted gloss sequence, and g is the ground-truth gloss sequence. Besides, considering the gloss-to-text sub-network may not be fully trained with the limited gloss-sentence pairs provided by the dataset, we introduce data augmentation by adding the predicted gloss sequences into the corpus to enlarge training samples.

Based on L_{\rm{ske}} , L_{\rm{slt}} , L_{\rm{slr}} , and L_{\rm{g2t}} , we calculate the joint loss L as follows:

L = L_{{\rm{ske}}} + (1-\alpha) L_{{\rm{slt}}} + \alpha (L_{{\rm{slr}}}+ L_{{\rm{g2t}}}). where \alpha is a hyper-parameter, set to 1 when gloss annotations are provided, and 0 otherwise. That is to say, two kinds of losses (i.e., L_{\rm{slt}} , L_{\rm{slr}} + L_{{\rm{g2t}}} ) will not be adopted at the same time; only one will be used one time.

5. Experiments

5.1 Datasets

We test our model on three public datasets that are often used. The first one is the CSL dataset[28] which contains 25k labeled videos with 100 Chinese sentences filmed by 50 signers. For the CSL dataset, we split it into 17k, 2k, and 6k samples for training, validation, and testing, respectively. In this paper, the CSL dataset is used for the SLT task only. The second one is a German sign language dataset named RWTH-PHOENIX-Weather 2014T[4], which contains

8257 weather forecast samples from nine signers. The PHOENIX14T corpus has two-stage annotations: sign gloss annotations with a vocabulary of1066 different signs for CSLR and German translation annotations with a vocabulary of2877 different words for SLT. For PHOENIX14T, it has been officially split into7096 , 519, and 642 samples for training, validation, and testing, respectively. The third one is RWTH-PHOENIX-Weather 2014[38], which contains5672 , 540, and 629 samples from nine signers for training, validation, and testing, respectively and it has a vocabulary of1295 glosses for the CSLR task only.5.2 Experimental Setting

In this subsection, we will describe the detailed settings of SANet, including data preprocessing, module parameters, model training, and model implementation. For data preprocessing, we first reshape each frame of a video to 200\times 200 pixels with Gaussian noises augmentation. Then, we split the video into clips with a sliding window where the window size c is set to 16 and the stride size s is set to 8. Considering that sign language videos are variable-length, we set the maximum length of a sign language video to 300 frames and 200 frames for the CSL dataset and the PHOENIX14T dataset, respectively, during the training stage. For the LangGen module, the dimensions of transformer layers are both

1024 , and the N (i.e., the maximum n) of multi-level n -gram embedding is set to 4. For model training, the batch size is set to 64. The Adam optimizer is used to optimize model parameters at an initial learning rate of 0.001. The learning rate decreases by a factor of 0.5 every epoch. For model implementation, SANet is implemented with PyTorch1.6 and trained for 100 epochs on four NVIDIA Tesla V100 GPUs.As for performance metrics, we use Word Error Rate (WER) as the metric of CSLR performance, and adopt ROUGE-L F1-Score[39], BLEU-1, 2, 3, 4[40] as the metrics of SLT performance. They are often used to measure the quality of recognition and translation in existing work[3, 4, 41]. All evaluation codes of WER, ROUGE-L, and BLEU-1, 2, 3, 4 are provided by existing datasets[4, 38] for a fair comparison.

5.3 Model Performance

To verify the effectiveness of the proposed SANet, we perform ablation study, gloss analysis, time analysis and qualitative analysis for SANet. In the rest of our paper, we will highlight the best performance in bold for convenience.

5.3.1 Ablation Study

To estimate the contributions of our designed components including skeleton-related components (i.e., all skeletons, skeleton channels, the skeleton-based GCN), feature-related components (i.e., frame-level features, clip-level features) and language-related components (i.e., the two-stage method including multi-level n -gram embeddings, weight sharing and data augmentation, the end-to-end method), we perform the ablation study on the CSL dataset and the PHOENIX14T dataset. Here, “w/o” means without, “ske” means skeleton, “chl” means channel, “gph” means graph, “frm” means frame, “fea” means feature, and “all skeletons” means the combination of components related to skeletons, including the branch generating skeletons and the parts using skeletons (i.e., skeleton channels and the skeleton-based GCN). Besides, we use “SANet-E2E” to represent the end-to-end method (i.e., gloss annotations are not needed for both training and inference stages) and use “SANet-G2T” to represent the two-stage method (i.e., gloss annotations are needed for the training stage). In the experiment, we remove only one type of components at a time and list the corresponding performance in Table 1 and Table 2.

Table 1. SLT Performance in Ablation Study on the CSL DatasetModel Time (s) ROUGE BLEU-1 BLEU-2 BLEU-3 BLEU-4 w/o all-ske 0.467 0.951 0.953 0.939 0.927 0.916 w/o ske-chl 0.489 0.956 0.958 0.946 0.935 0.924 w/o ske-gph 0.472 0.966 0.967 0.957 0.947 0.939 w/o frm-fea 0.480 0.952 0.954 0.941 0.928 0.916 w/o clip-fea 0.242 0.928 0.921 0.898 0.882 0.879 SANet-E2E 0.499 0.996 0.995 0.994 0.992 0.990 Table 2. SLT Performance in Ablation Study on the PHOENIX14T DatasetModel Time (s) ROUGE BLEU-1 BLEU-2 BLEU-3 BLEU-4 w/o all-ske 0.358 0.510 0.532 0.381 0.290 0.222 w/o ske-chl 0.370 0.520 0.539 0.403 0.302 0.231 w/o ske-gph 0.371 0.513 0.531 0.394 0.291 0.225 w/o frm-fea 0.376 0.518 0.547 0.396 0.294 0.228 w/o clip-fea 0.214 0.516 0.541 0.390 0.291 0.220 SANet-E2E 0.385 0.548 0.573 0.424 0.322 0.248 SANet-G2T 0.395 0.555 0.579 0.436 0.336 0.254 1) Skeleton-Related Components. According to Table 1 and Table 2, when removing all skeletons, skeleton channels, or the skeleton-based GCN, the performance on the ROUGE score drops by 4.5%, 4.0%, 3.0% on the CSL dataset and 3.8%, 2.8%, 3.5% on the PHOENIX14T dataset, respectively, which means the proposed self-contained branch can efficiently extract the skeleton, the designed skeleton channel can guide the network to focus on sign-related features, and the skeleton-based GCN can efficiently enhance the feature representation of each clip.

2) Feature-Related Components. When frame-level features and clip-level features are removed respectively, the performance on the ROUGE score drops by 4.4%, 6.8% on the CSL dataset and 3.0%, 3.2% on the PHOENIX14T dataset, respectively. Regardless of whether frame-level or clip-level features are removed, the removal has a non-negligible impact on the SLT performance. This is because both frame-level and clip-level features contain effective sign-related information. In this paper, instead of only extracting features from frames or clips individually, we also contribute a design by fusing the frame-level features and clip-level features for a clip. In addition, our work makes a further step on the existing SLT work by introducing skeletons to enhance the feature representation of clips, thus further improving the SLT performance.

3) Language-Related Components. First, we evaluate the contribution of the multi-level n -gram embeddings, weight sharing and data augmentation adopted for two-stage translation. As shown in Table 3, “ME” means the multi-level n -gram embedding, “WS” means weight sharing, and “DA” means data augmentation. When the multi-level n -gram embedding is removed, the performance on the ROUGE score drops by 1.9%. When the weight sharing mechanism is removed, the performance on the ROUGE score drops by 1.5%. When data augmentation is removed, the gloss-to-text translation sub-network is trained with the ground-truth gloss-text pairs and the performance on the ROUGE score drops by 2.0%. It indicates that each component in the two-stage method contributes to higher performance. In addition, we also explore how to select suitable N (i.e., the maximum n ) in the designed multi-level n -gram embedding. As shown in Table 4, as N increases, the SLT performance first increases and then decreases. When N = 4 , the translation performance is the best, and therefore we set N to 4 in this paper. The ablation study indicates that our gloss-to-text sub-network can achieve good SLT performance by making full use of gloss annotations. Secondly, we further analyze the contribution of the designed two translation methods. According to Table 3, the end-to-end translation method “SANet-E2E” can achieve high performance with 51.9% on the ROUGE score. Further, when given gloss annotations, the performance of the two-stage method “SANet-G2T” on the ROUGE score rises by 2.3%, which indicates that two-stage translation can achieve better SLT performance by leveraging the inherent correlation between the gloss sequence and the spoken language.

Table 3. SLT Performance in Ablation Study on Gloss-to-Text TranslationModel ROUGE BLEU-1 BLEU-2 BLEU-3 BLEU-4 w/o ME 0.536 0.558 0.413 0.317 0.241 w/o WS 0.540 0.571 0.423 0.321 0.246 w/o DA 0.535 0.556 0.415 0.316 0.242 SANet-E2E 0.548 0.573 0.424 0.322 0.248 SANet-G2T 0.555 0.579 0.436 0.336 0.254 Table 4. SLT Performance in Ablation Study on Different N of the Multi-Level n-Gram EmbeddingsN ROUGE BLEU-1 BLEU-2 BLEU-3 BLEU-4 1 0.538 0.567 0.410 0.311 0.239 2 0.540 0.569 0.417 0.314 0.242 3 0.551 0.570 0.430 0.321 0.248 4 0.555 0.579 0.436 0.336 0.254 5 0.554 0.578 0.434 0.334 0.253 6 0.540 0.571 0.431 0.320 0.246 5.3.2 Efficiency of Gloss Sequence Prediction

To evaluate whether SANet can predict gloss sequences accurately, we show the recognition performance of gloss sequences on both the PHOENIX14T dataset and the PHOENIX14 dataset. As shown in Table 5, “del/ins” means the minimum numbers of deletion and insertion operations needed to transform a hypothesized sentence to the ground truth. On the PHOENIX14T dataset, the existing model STMC[3] combines features from multiple aspects (i.e., the full frame, hands, face, and skeleton), and thus can achieve good performance on the “DEV” set, i.e., 19.6% WER. In regard to our model, we mainly utilize skeleton information and carefully design the network, and can achieve comparable performance with STMC[3] on the “DEV” set. However, on the “TEST” set, our SANet outperforms all the existing methods and achieves 20.2% WER. As shown in Table 6, on the PHOENIX14 dataset, the best recognition performance achieved by the existing method is 20.5% and 20.4% WER on the “DEV” set and the “TEST” set, respectively. However, our proposed SANet further improves the recognition performance and achieves the best performance, e.g., 20.0% WER and 20.1% WER on the “DEV” set and the “TEST” set, which outperform all the existing methods. It indicates that SANet can predict gloss sequences accurately for the two-stage method.

Table 6. Comparison of CSLR Performance on the PHOENIX14 Dataset (%)Model DEV TEST WER del/ins WER del/ins Align-iOpt[2] 37.1 12.6/2.6 36.7 13.0/2.5 DPD+TEM[46] 35.6 9.5/3.2 34.5 9.3/3.1 SFL[42] 24.9 10.3/4.1 25.3 10.4/3.6 SBD-DL[47] 28.6 9.9/5.6 28.6 8.9/5.,1 FCN[43] 23.7 –/– 23.9 –/– CMA[48] 21.3 7.3/2.7 21.9 7.3/2.4 VAC[49] 21.2 7.9/2.5 22.3 8.4/2.6 STMC[3] 21.1 7.7/3.4 20.7 7.4/2.6 SMKD[44] 20.8 6.8/2.5 21.0 6.3/2.3 C2SLR[45] 20.5 –/– 20.4 –/– SANet 20.0 5.4/4.5 20.1 5.3/5.1 5.3.3 Inference Time Analysis

We show the inference time of SLT by removing one type of designed components. In the experiment, we evaluate the inference time of a sign language video with 300 frames on the CSL dataset and a sign language video with 200 frames on the PHOENIX14T dataset on a single GPU, and then average the time of 100 runs as the reported inference time. When keeping all components of SANet, the average inference time is 0.499 seconds for the CSL dataset and 0.395 seconds for the PHOENIX14T dataset. When removing any designed component, the inference time decreases. According to Table 1 and Table 2, the clip-level feature extraction introduces much time cost, since 3D convolutions have expensive computational cost. However, skeleton-related components and language-related components have only a little effect on the overall inference time.

5.3.4 Qualitative Analysis

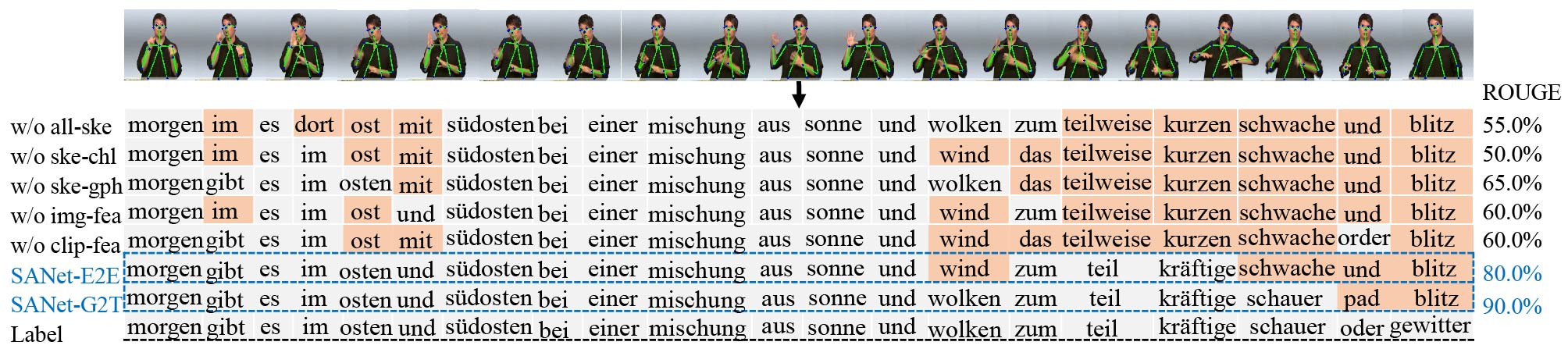

As shown in Fig.11, we provide a qualitative analysis of SLT on a sign language video sample from the PHOENIX14T test set. The proposed SANet-E2E and SANet-G2T achieve the best performance, while removing any designed component will lead to more errors (marked with orange color). It demonstrates that the proposed framework and all the designed components contribute to higher performance for SLT.

![]() Figure 11. Qualitative analysis of different components used for SLT on examples from the PHOENIX14T testing set[20]. The word order in the sign language and that in the spoken language may be not temporally consistent.

Figure 11. Qualitative analysis of different components used for SLT on examples from the PHOENIX14T testing set[20]. The word order in the sign language and that in the spoken language may be not temporally consistent.5.4 Comparisons

5.4.1 Evaluation on the CSL Dataset

We compare our model with the existing methods on two settings.

Split 1—Signer Independent Test. We select the sign language videos generated by 40 signers and those generated by the other 10 signers as the training set and testing set, respectively. The sentences of the training set and the testing set are the same, but the signers have no overlaps.

Split 2—Unseen Sentences Test. We select the sign language videos corresponding to 94 sentences as the training set and the videos corresponding to the remaining six sentences as the testing set. The signers and vocabularies of the training set and the testing set are the same, while the sentences have no overlaps.

As shown in Table 7, our SANet-E2E achieves 99.6%, 99.4%, 99.3%, 99.2%, and 99.0% on ROUGE, BLEU-1, BLEU-2, BLEU-3, and BLEU-4, respectively on split 1, which achieves the best performance and outperforms the existing methods. When moving to split 2, the ROUGE score drops by 31.5%. This is because translating the unseen sentences (i.e., the words in the sentence exist in the training set, while the sentence does not occur in the training set) can be more challenging and difficult for SLT. However, our SANet-E2E still outperforms the existing methods and achieves 68.1%, 69.7%, 41.1%, 26.8%, and 18.1% on ROUGE, BLEU-1, BLEU-2, BLEU-3, and BLEU-4, respectively. When compared with the best existing approach HLSTM-attn[5], our SANet-E2E increases ROUGE by 17.8%.

Table 7. Comparisons of SLT performance on the CSL Dataset Under the Signer-Independent Test and the Unseen-Sentences TestModel Split 1 Split 2 ROUGE BLEU-1 BLEU-2 BLEU-3 BLEU-4 ROUGE BLEU-1 BLEU-2 BLEU-3 BLEU-4 S2VT[50] 0.904 0.902 0.886 0.879 0.874 0.461 0.466 0.258 0.135 – S2VT(3-layer)[50] 0.911 0.911 0.896 0.889 0.884 0.465 0.475 0.265 0.145 – HLSTM[5] 0.944 0.942 0.932 0.927 0.922 0.481 0.487 0.315 0.195 – HLSTM-attn[5] 0.951 0.948 0.938 0.933 0.928 0.503 0.508 0.330 0.207 – HRF-Fusion[19] 0.994 0.993 0.992 0.991 0.990 0.449 0.450 0.238 0.127 – MSeqGraph[51] 0.995 0.995 – – – 0.566 0.531 – – – SANet-E2E 0.996 0.994 0.993 0.992 0.990 0.681 0.697 0.411 0.268 0.181 5.4.2 Evaluation on the PHOENIX14T Dataset

As shown in Table 8, we compare the performance of the existing methods with our model on both the validation set (i.e., “DEV”) and testing set (i.e., “TEST”), and the existing methods often have lower performance. For example, the best ROUGE and BLEU-4 achieved by the existing method[52] are 50.3% and 24.5%, respectively. This may be because of the high diversity, large size of vocabularies and limited number of training samples on the PHOENIX14T dataset. However, our proposed SANet-G2T further improves the SLT performance and achieves the best performance, e.g., 55.7% of ROUGE and 24.7% of BLEU-4 on the “DEV” set while 55.5% of ROUGE and 25.4% of BLEU-4 on the “TEST” set, which outperform the existing methods.

Table 8. Comparison of SLT Performance on the RWTH-PHOENIX-Weather 2014T DatasetMethod Model PHOENIX14T DEV PHOENIX14T TEST ROUGE BLEU-1 BLEU-2 BLEU-3 BLEU-4 ROUGE BLEU-1 BLEU-2 BLEU-3 BLEU-4 End-to-End TSPNet[16] – – – – – 0.349 0.361 0.231 0.169 0.134 H+M+P[17] 0.459 – – – 0.195 0.436 – – – 0.183 Sign2(Gloss+Text)[41] – 0.473 0.344 0.271 0.224 – 0.466 0.337 0.262 0.213 STMC-T[18] 0.482 0.476 0.364 0.292 0.241 0.467 0.469 0.361 0.287 0.237 HST-GNN[8] – 0.461 0.334 0.275 0.226 – 0.452 0.347 0.271 0.223 SimulSLT[7] 0.492 0.478 0.353 0.279 0.229 0.492 0.482 0.356 0.280 0.231 BN-TIN-Transf[52] 0.503 0.511 0.379 0.298 0.245 0.495 0.508 0.378 0.297 0.243 SANet-E2E 0.542 0.566 0.415 0.312 0.235 0.548 0.573 0.424 0.322 0.248 Two-Stage S2G\rightarrowG2T[4] 0.438 0.411 0.291 0.221 0.179 0.435 0.415 0.295 0.222 0.178 S2G2T[4] 0.441 0.429 0.303 0.230 0.184 0.438 0.433 0.304 0.228 0.181 Sign2Gloss\rightarrowGloss2Text[41] – 0.478 0.347 0.269 0.218 – 0.477 0.344 0.266 0.216 Sign2Gloss2Text[41] – 0.477 0.348 0.271 0.221 – 0.485 0.354 0.276 0.225 STMC-Transformer[15] 0.487 0.503 0.376 0.298 0.247 0.488 0.506 0.384 0.306 0.254 SANet-G2T 0.557 0.574 0.422 0.327 0.247 0.555 0.579 0.436 0.336 0.254 6. User Study

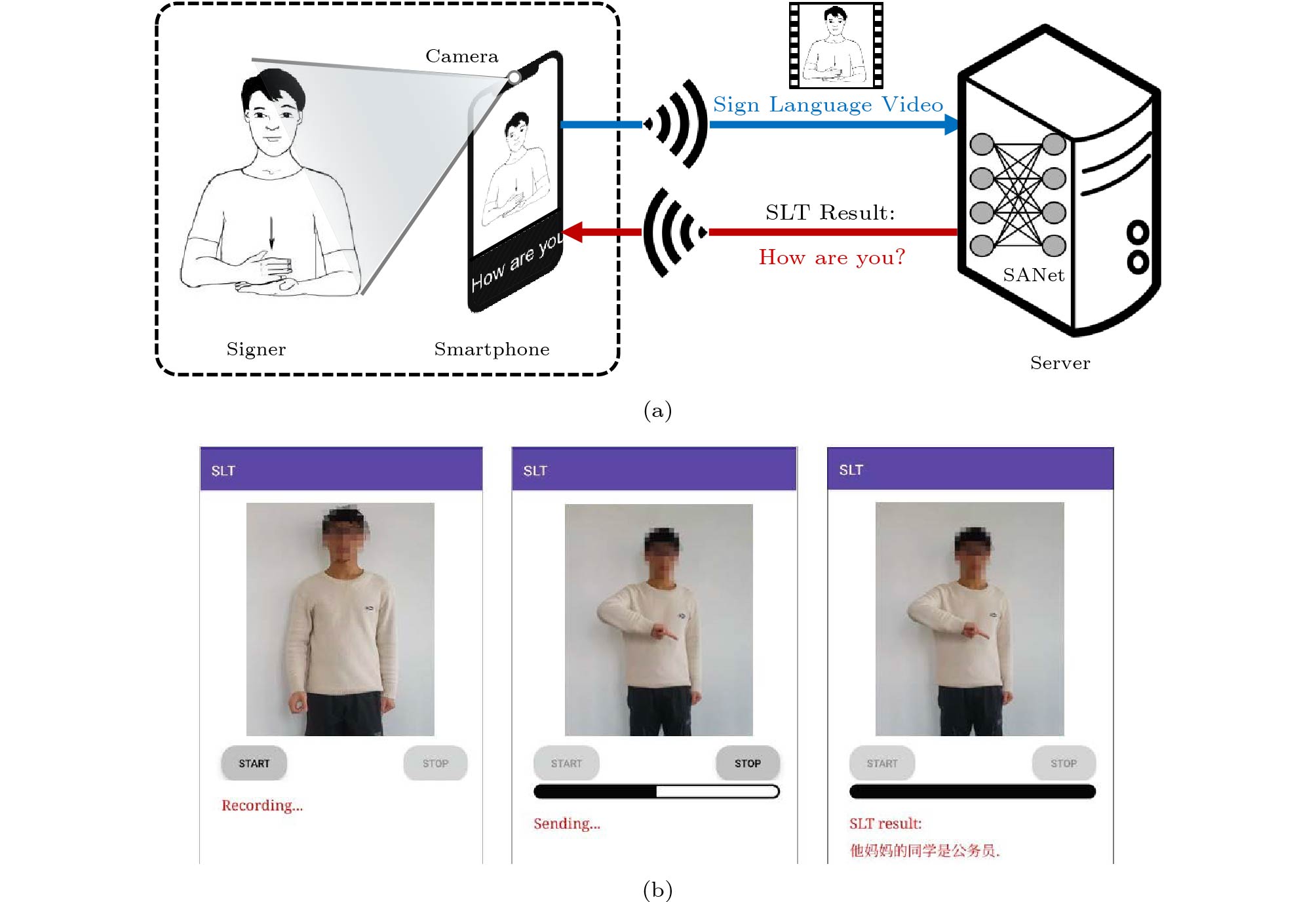

6.1 Application System

To facilitate instant communication for deaf people, we deploy SANet on a Samsung S9 smartphone whose Android version is 10.0 and is connected to a GPU server (configured with V100 GPUs). As shown in Fig.12(a), the user uploads the sign language video captured by phone to the server. The server runs our SANet to obtain the translation result, and returns the translation result to the user. Fig.12(b) shows the main interface of the application. First, the user opens the camera of the smartphone to start recording the sign language video, as shown in the left side of Fig.12(b). Then, the user stops recoding and sends the video to the server through WiFi, as shown in the middle of Fig.12(b). After that, when the server deployed with the trained SANet model receives the sign language video, it processes the video and sends back the SLT result (i.e., the spoken language sentence) to the smartphone, as shown in the right side of Fig.12(b). It is worth mentioning that to reduce the transmission overhead of the sign language video, we preprocess the video by reshaping each frame to 200\times200 pixels on the smartphone locally. In this way, the user can get the SLT result immediately. To verify the real-time performance of the system, we test the processing time of sentences of different lengths in real-world scenarios. As shown in Table 9, “Sen-Len” means the sentence length (i.e., the number of words) corresponding to a sign language video, “Tra-Time” means the duration for transmitting the video, and “Pro-Time” means the duration of running SANet for SLT on the server. Although as the sentence length increases, the SLT time (i.e., the duration from the user pressing the “STOP” button to the smartphone showing the SLT result) increases, the time is quite short. It indicates that it is possible to achieve SLT on the smartphone anytime anywhere.

Table 9. SLT TimeSen-Len Tra-Time (ms) Pro-Time (ms) SLT Time (ms) 5 words 282 370 652 6 words 329 372 701 7 words 368 374 742 8 words 412 380 792 9 words 461 381 842 10 words 492 383 875 6.2 Performance in Real Scenarios

To verify the efficiency and usability of our designed SANet, we recruit five volunteers (college students) to test its performance in real scenarios. We carefully choose 10 sentences from the CSL dataset as the test examples and each volunteer is asked to perform 10 times for each sentence. The detailed information of recorded videos is shown in Table 10. Before recording one sign language video, the signer has five minutes to learn and familiarize himself/herself with it. (Note: this implemented SANet means SANet-E2E, since the CSL dataset has no gloss-level annotations.)

Table 10. Statistics on Recorded CSL VideosParameter Value RGB resolution (pixel) 2224 \times1080 Vocabulary size (word) 48 Average words 7 Number of sentences 10 Total instances 500 Number of signers 5 Video duration (s) 5-16 FPS 30 Recording device SamSung Galaxy S9 Sentence length (word) 6-10 6.2.1 Performance of Different Signers

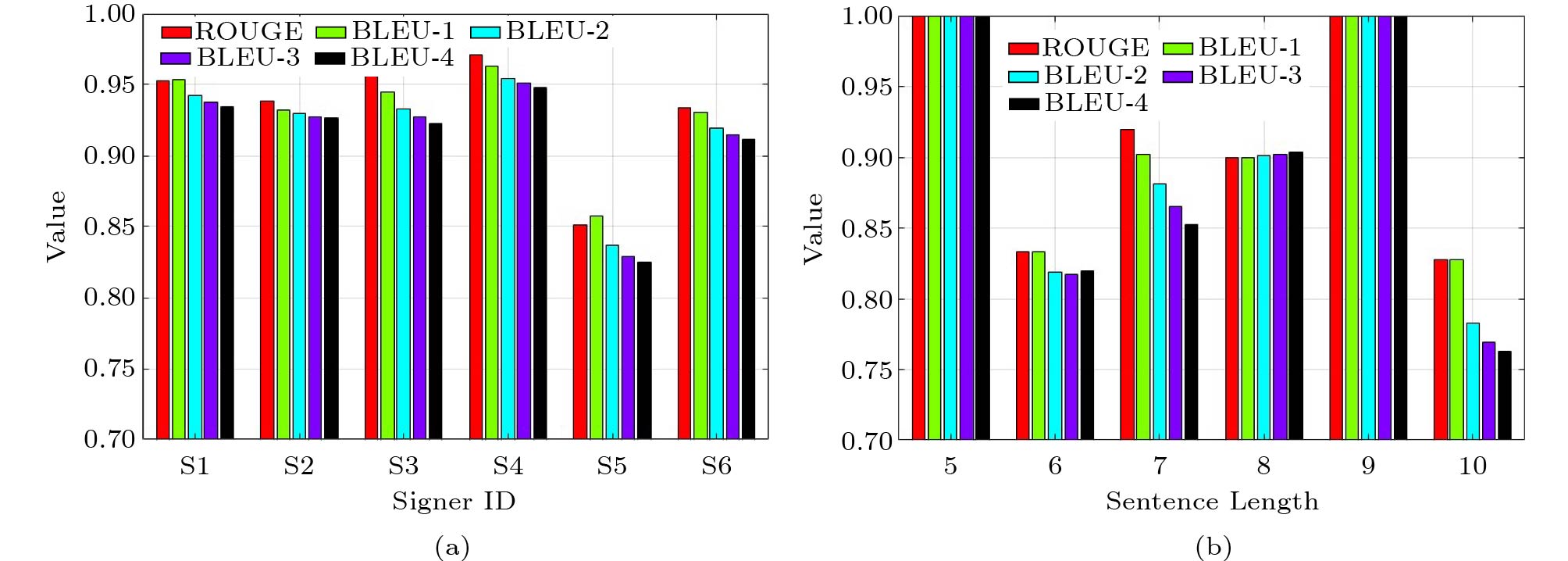

Fig.13(a) shows the performance of different signers. The ROUGE score reaches over 93% for Signers 1–4 and reaches 93.4% (“ALL”) on average. Compared with the performance on the CSL “TEST” set (as shown in Table 1), our SANet only drops 6.2% on the ROUGE score in real scenarios, which validates the robustness of our model. It is worth mentioning that the lowest performance is achieved by Signer 5, who is more “amateur” and “impatient” when doing the sign language. However, the lowest performance still reaches 85.1% on the ROUGE score. It indicates that our model can efficiently handle the sign language performed by different signers.

6.2.2 Performance of Different Sentence Lengths

We select sentences with lengths ranging from 5 to 10 words to observe how the sentence length affects the performance of SANet. As shown in Fig.13(b), the highest performances are 99.0% and 100% ROUGE scores when the sentences contain five words and nine words, while the lowest performances are 83.3% and 82.8% ROUGE scores when the sentences contain six words and 10 words. Intuitively, model performance will degrade as the sentence length increases. However, based on our experiments, sentence lengths have a small effect on the results of our model. The reason may be that model performance in real scenarios is mainly influenced by the difficulty of a gesture (here, we define the difficulty of a gesture as the time required for beginners to learn the gesture). Sentences with six words and 10 words contain more complex gestures than the other sentences, and thus the performances are much lower than the others.

7. Discussion

Particularity of the CSL Dataset. The CSL dataset is a special dataset, where the word order of the sign language is temporally consistent with that of the spoken language, and thus it can be used for the SLR task[3, 28] as well as the SLT task[5, 19]. In this paper, the CSL dataset is solved from the aspect of SLT and only SLT methods are considered for comparisons.

Translation Methods. In SLT, both end-to-end methods and two-stage methods can achieve promising results and have their own advantages. In our paper, we provide both the end-to-end method and the two-stage method. When gloss annotations are unavailable, the end-to-end method is adopted. When gloss annotations are available, the two-stage method is adopted.

SLT Application. In this paper, we provide a possible SLT solution on smartphones by sending sign language data to the sever and receiving the returned translated results for SLT. The reason for adopting the mobile-server mode instead of deploying the SLT model on the smartphone is that real-time video processing is a computationally-intensive task and current smartphones can hardly run large deep learning models to solve such a task. However, with the development of technology and improvement of configurations in smartphones (e.g., inference accelerators are coming to mobile devices including phones), it is possible to run the SLT model on mobile devices to benefit the deaf community in the future.

8. Conclusions

In this paper, we proposed a skeleton-aware neural network (SANet) for SLT. We introduced skeletons to enhance the feature representation of sign language. Specifically, we first used a self-contained branch to extract the skeleton from each frame, and then enhanced the feature representation of a clip by adding the skeleton channel and scaling (i.e., weighting the importance) the feature vector with a designed skeleton-based GCN. The experimental results on two large-scale datasets demonstrated that the introduction of skeletons can significantly improve the performance of sign language recognition and translation, and also demonstrated the effectiveness of SANet.

-

Figure 4. FrmSke extracts skeletons from each image and frame-level features of each clip[20].

Figure 5. ClipRep extracts clip-level features, where each frame of the clip is extended to four channels by concatenating the skeleton channels and RGB channels[20].

Figure 6. Intermediate feature maps after the first P3D block (a) without or (b) with the skeleton channel. In each case, we show four examples selected from 16 frames in a clip[20].

Figure 7. ClipScl constructs a skeleton-based graph and uses GCN to calculate the scale factor, which is used for weighting the importance of each clip[20].

Figure 8. Visualization of the scale factor for each clip[20]. Meaningful clips with signs have larger scale factors, while unmeaningful clips have smaller scale factors.

Figure 11. Qualitative analysis of different components used for SLT on examples from the PHOENIX14T testing set[20]. The word order in the sign language and that in the spoken language may be not temporally consistent.

Table 1 SLT Performance in Ablation Study on the CSL Dataset

Model Time (s) ROUGE BLEU-1 BLEU-2 BLEU-3 BLEU-4 w/o all-ske 0.467 0.951 0.953 0.939 0.927 0.916 w/o ske-chl 0.489 0.956 0.958 0.946 0.935 0.924 w/o ske-gph 0.472 0.966 0.967 0.957 0.947 0.939 w/o frm-fea 0.480 0.952 0.954 0.941 0.928 0.916 w/o clip-fea 0.242 0.928 0.921 0.898 0.882 0.879 SANet-E2E 0.499 0.996 0.995 0.994 0.992 0.990 Table 2 SLT Performance in Ablation Study on the PHOENIX14T Dataset

Model Time (s) ROUGE BLEU-1 BLEU-2 BLEU-3 BLEU-4 w/o all-ske 0.358 0.510 0.532 0.381 0.290 0.222 w/o ske-chl 0.370 0.520 0.539 0.403 0.302 0.231 w/o ske-gph 0.371 0.513 0.531 0.394 0.291 0.225 w/o frm-fea 0.376 0.518 0.547 0.396 0.294 0.228 w/o clip-fea 0.214 0.516 0.541 0.390 0.291 0.220 SANet-E2E 0.385 0.548 0.573 0.424 0.322 0.248 SANet-G2T 0.395 0.555 0.579 0.436 0.336 0.254 Table 3 SLT Performance in Ablation Study on Gloss-to-Text Translation

Model ROUGE BLEU-1 BLEU-2 BLEU-3 BLEU-4 w/o ME 0.536 0.558 0.413 0.317 0.241 w/o WS 0.540 0.571 0.423 0.321 0.246 w/o DA 0.535 0.556 0.415 0.316 0.242 SANet-E2E 0.548 0.573 0.424 0.322 0.248 SANet-G2T 0.555 0.579 0.436 0.336 0.254 Table 4 SLT Performance in Ablation Study on Different N of the Multi-Level n-Gram Embeddings

N ROUGE BLEU-1 BLEU-2 BLEU-3 BLEU-4 1 0.538 0.567 0.410 0.311 0.239 2 0.540 0.569 0.417 0.314 0.242 3 0.551 0.570 0.430 0.321 0.248 4 0.555 0.579 0.436 0.336 0.254 5 0.554 0.578 0.434 0.334 0.253 6 0.540 0.571 0.431 0.320 0.246 Table 5 Comparison of CSLR Performance on the PHOENIX14T Dataset (%)

Table 6 Comparison of CSLR Performance on the PHOENIX14 Dataset (%)

Model DEV TEST WER del/ins WER del/ins Align-iOpt[2] 37.1 12.6/2.6 36.7 13.0/2.5 DPD+TEM[46] 35.6 9.5/3.2 34.5 9.3/3.1 SFL[42] 24.9 10.3/4.1 25.3 10.4/3.6 SBD-DL[47] 28.6 9.9/5.6 28.6 8.9/5.,1 FCN[43] 23.7 –/– 23.9 –/– CMA[48] 21.3 7.3/2.7 21.9 7.3/2.4 VAC[49] 21.2 7.9/2.5 22.3 8.4/2.6 STMC[3] 21.1 7.7/3.4 20.7 7.4/2.6 SMKD[44] 20.8 6.8/2.5 21.0 6.3/2.3 C2SLR[45] 20.5 –/– 20.4 –/– SANet 20.0 5.4/4.5 20.1 5.3/5.1 Table 7 Comparisons of SLT performance on the CSL Dataset Under the Signer-Independent Test and the Unseen-Sentences Test

Model Split 1 Split 2 ROUGE BLEU-1 BLEU-2 BLEU-3 BLEU-4 ROUGE BLEU-1 BLEU-2 BLEU-3 BLEU-4 S2VT[50] 0.904 0.902 0.886 0.879 0.874 0.461 0.466 0.258 0.135 – S2VT(3-layer)[50] 0.911 0.911 0.896 0.889 0.884 0.465 0.475 0.265 0.145 – HLSTM[5] 0.944 0.942 0.932 0.927 0.922 0.481 0.487 0.315 0.195 – HLSTM-attn[5] 0.951 0.948 0.938 0.933 0.928 0.503 0.508 0.330 0.207 – HRF-Fusion[19] 0.994 0.993 0.992 0.991 0.990 0.449 0.450 0.238 0.127 – MSeqGraph[51] 0.995 0.995 – – – 0.566 0.531 – – – SANet-E2E 0.996 0.994 0.993 0.992 0.990 0.681 0.697 0.411 0.268 0.181 Table 8 Comparison of SLT Performance on the RWTH-PHOENIX-Weather 2014T Dataset

Method Model PHOENIX14T DEV PHOENIX14T TEST ROUGE BLEU-1 BLEU-2 BLEU-3 BLEU-4 ROUGE BLEU-1 BLEU-2 BLEU-3 BLEU-4 End-to-End TSPNet[16] – – – – – 0.349 0.361 0.231 0.169 0.134 H+M+P[17] 0.459 – – – 0.195 0.436 – – – 0.183 Sign2(Gloss+Text)[41] – 0.473 0.344 0.271 0.224 – 0.466 0.337 0.262 0.213 STMC-T[18] 0.482 0.476 0.364 0.292 0.241 0.467 0.469 0.361 0.287 0.237 HST-GNN[8] – 0.461 0.334 0.275 0.226 – 0.452 0.347 0.271 0.223 SimulSLT[7] 0.492 0.478 0.353 0.279 0.229 0.492 0.482 0.356 0.280 0.231 BN-TIN-Transf[52] 0.503 0.511 0.379 0.298 0.245 0.495 0.508 0.378 0.297 0.243 SANet-E2E 0.542 0.566 0.415 0.312 0.235 0.548 0.573 0.424 0.322 0.248 Two-Stage S2G\rightarrowG2T[4] 0.438 0.411 0.291 0.221 0.179 0.435 0.415 0.295 0.222 0.178 S2G2T[4] 0.441 0.429 0.303 0.230 0.184 0.438 0.433 0.304 0.228 0.181 Sign2Gloss\rightarrowGloss2Text[41] – 0.478 0.347 0.269 0.218 – 0.477 0.344 0.266 0.216 Sign2Gloss2Text[41] – 0.477 0.348 0.271 0.221 – 0.485 0.354 0.276 0.225 STMC-Transformer[15] 0.487 0.503 0.376 0.298 0.247 0.488 0.506 0.384 0.306 0.254 SANet-G2T 0.557 0.574 0.422 0.327 0.247 0.555 0.579 0.436 0.336 0.254 Table 9 SLT Time

Sen-Len Tra-Time (ms) Pro-Time (ms) SLT Time (ms) 5 words 282 370 652 6 words 329 372 701 7 words 368 374 742 8 words 412 380 792 9 words 461 381 842 10 words 492 383 875 Table 10 Statistics on Recorded CSL Videos

Parameter Value RGB resolution (pixel) 2224 \times1080 Vocabulary size (word) 48 Average words 7 Number of sentences 10 Total instances 500 Number of signers 5 Video duration (s) 5-16 FPS 30 Recording device SamSung Galaxy S9 Sentence length (word) 6-10 -

[1] Koller O, Zargaran S, Ney H. Re-sign: Re-aligned end-to-end sequence modelling with deep recurrent CNN-HMMs. In Proc. the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Jul. 2017, pp.3416–3424. DOI: 10.1109/CVPR.2017.364.

[2] Pu J, Zhou W, Li H. Iterative alignment network for continuous sign language recognition. In Proc. the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Jun. 2019, pp.4160–4169. DOI: 10.1109/CVPR.2019.00429.

[3] Zhou H, Zhou W, Zhou Y, Li H. Spatial-temporal multi-cue network for continuous sign language recognition. In Proc. the 34th AAAI Conference on Artificial Intelligence, Feb. 2020, pp.13009–13016. DOI: 10.1609/aaai.v34i07.7001.

[4] Camgoz N C, Hadfield S, Koller O, Ney H, Bowden R. Neural sign language translation. In Proc. the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Jun. 2018, pp.7784–7793. DOI: 10.1109/CVPR.2018.00812.

[5] Guo D, Zhou W, Li H, Wang M. Hierarchical LSTM for sign language translation. In Proc. the 32nd AAAI Conference on Artificial Intelligence, Feb. 2018. DOI: 10.5555/3504035.3504873.

[6] Orbay A, Akarun L. Neural sign language translation by learning tokenization. In Proc. the 15th IEEE International Conference on Automatic Face and Gesture Recognition, Nov. 2020, pp.222–228. DOI: 10.1109/FG47880.2020.00002.

[7] Yin A, Zhao Z, Liu J, Jin W, Zhang M, Zeng X, He X. SimulSLT: End-to-end simultaneous sign language translation. In Proc. the 29th ACM International Conference on Multimedia, Oct. 2021, pp.4118–4127. DOI: 10.1145/3474085.3475544.

[8] Kan J, Hu K, Hagenbuchner M, Tsoi A C, Bennamoun M, Wang Z. Sign language translation with hierarchical spatio-temporal graph neural network. In Proc. the 2022 IEEE/CVF Winter Conference on Applications of Computer Vision, Jan. 2022, pp.2131–2140. DOI: 10.1109/WACV51458.2022.00219.

[9] Liu T, Zhou W, Li H. Sign language recognition with long short-term memory. In Proc. the 2016 IEEE International Conference on Image Processing, Sept. 2016, pp.2871–2875. DOI: 10.1109/ICIP.2016.7532884.

[10] Murakami K, Taguchi H. Gesture recognition using recurrent neural networks. In Proc. the SIGCHI Conference on Human Factors in Computing Systems, Apr. 1991, pp.237–242. DOI: 10.1145/108844.108900.

[11] Zhou B, Li Y, Wan J. Regional attention with architecture-rebuilt 3D network for RGB-D gesture recognition. In Proc. the 35th AAAI Conference on Artificial Intelligence, Feb. 2021, pp.3563–3571. DOI: 10.1609/aaai.v35i4.16471.

[12] Cui R, Liu H, Zhang C. Recurrent convolutional neural networks for continuous sign language recognition by staged optimization. In Proc. the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Jul. 2017, pp.1610–1618. DOI: 10.1109/CVPR.2017.175.

[13] Camgoz N C, Hadfield S, Koller O, Bowden R. SubUNets: End-to-end hand shape and continuous sign language recognition. In Proc. the 2017 IEEE International Conference on Computer Vision, Oct. 2017, pp.3075–3084. DOI: 10.1109/ICCV.2017.332.

[14] Cui R, Liu H, Zhang C. A deep neural framework for continuous sign language recognition by iterative training. IEEE Trans. Multimedia, 2019, 21(7):1880–1891. DOI: 10.1109/TMM.2018.2889563.

[15] Yin K, Read J. Better sign language translation with STMC-transformer. In Proc. the 28th International Conference on Computational Linguistics, Dec. 2020, pp.5975–5989. DOI: 10.18653/v1/2020.coling-main.525.

[16] Li D, Xu C, Yu X, Zhang K, Swift B, Suominen H, Li H. TSPNet: Hierarchical feature learning via temporal semantic pyramid for sign language translation. In Proc. the 34th International Conference on Neural Information Processing Systems, Dec. 2020, Article No. 1009. DOI: 10.5555/3495724.3496733.

[17] Camgoz N C, Koller O, Hadfield S, Bowden R. Multi-channel transformers for multi-articulatory sign language translation. In Proc. the European Conference on Computer Vision, Aug. 2020, pp.301–319. DOI: 10.1007/978-3-030-66823-5_18.

[18] Zhou H, Zhou W, Zhou Y, Li H. Spatial-temporal multi-cue network for sign language recognition and translation. IEEE Trans. Multimedia, 2022, 24: 768–779. DOI: 10.1109/TMM.2021.3059098.

[19] Guo D, Zhou W, Li A, Li H, Wang M. Hierarchical recurrent deep fusion using adaptive clip summarization for sign language translation. IEEE Trans. Image Processing, 2020, 29: 1575–1590. DOI: 10.1109/TIP.2019.2941267.

[20] Gan S, Yin Y, Jiang Z, Xie L, Lu S. Skeleton-aware neural sign language translation. In Proc. the 29th ACM International Conference on Multimedia, Oct. 2021, pp.4353–4361. DOI: 10.1145/3474085.3475577.

[21] Bantupalli K, Xie Y. American sign language recognition using deep learning and computer vision. In Proc. the 2018 IEEE International Conference on Big Data, Dec. 2018, pp.4896–4899. DOI: 10.1109/BigData.2018.8622141.

[22] Huang J, Zhou W, Li H, Li W. Attention-based 3D-CNNs for large-vocabulary sign language recognition. IEEE Trans. Circuits and Systems for Video Technology, 2019, 29(9):2822–2832. DOI: 10.1109/TCSVT.2018.2870740.

[23] Wang H, Chai X, Hong X, Zhao G, Chen X. Isolated sign language recognition with grassmann covariance matrices. ACM Trans. Accessible Computing (TACCESS), 2016, 8(4): Article No. 14. DOI: 10.1145/2897735.

[24] Jangyodsuk P, Conly C, Athitsos V. Sign language recognition using dynamic time warping and hand shape distance based on histogram of oriented gradient features. In Proc. the 7th International Conference on Pervasive Technologies Related to Assistive Environments, May 2014, Article No. 50. DOI: 10.1145/2674396.2674421.

[25] Guo D, Zhou W, Wang M, Li H. Sign language recognition based on adaptive HMMS with data augmentation. In Proc. the 2016 IEEE International Conference on Image Processing, Sept. 2016, pp.2876–2880. DOI: 10.1109/ICIP.2016.7532885.

[26] Huang J, Zhou W, Li H, Li W. Sign language recognition using 3D convolutional neural networks. In Proc. the 2015 IEEE International Conference on Multimedia and Expo, Jun. 29-Jul. 3, 2015. DOI: 10.1109/ICME.2015.7177428.

[27] Tang A, Lu K, Wang Y, Huang J, Li H. A real-time hand posture recognition system using deep neural networks. ACM Trans. Intelligent Systems and Technology (TIST), 2015, 6(2): Article No. 21. DOI: 10.1145/2735952.

[28] Huang J, Zhou W, Zhang Q, Li H, Li W. Video-based sign language recognition without temporal segmentation. In Proc. the 32nd AAAI Conference on Artificial Intelligence, Feb. 2018, Article No. 275. DOI: 10.5555/3504035.3504310.

[29] Koller O, Camgoz N C, Ney H, Bowden R. Weakly supervised learning with multi-stream CNN-LSTM-HMMs to discover sequential parallelism in sign language videos. IEEE Trans. Pattern Analysis and Machine Intelligence, 2020, 42(9):2306–2320. DOI: 10.1109/TPAMI.2019.2911077.

[30] Chai X, Li G, Lin Y, Xu Z, Tang Y, Chen X, Zhou M. Sign language recognition and translation with kinect. In Proc. the IEEE International Conference on Automatic Face and Gesture Recognition, Apr. 2013, Article No. 4.

[31] Gan S, Yin Y, Jiang Z, Xia K, Xie L, Lu S. Contrastive learning for sign language recognition and translation. In Proc. the 32nd International Joint Conference on Artificial Intelligence, Aug. 2023, pp.763–772. DOI: 10.24963/ijcai.2023/85.

[32] Sun K, Xiao B, Liu D, Wang J. Deep high-resolution representation learning for human pose estimation. In Proc. the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Jun. 2019, pp.5686–5696. DOI: 10.1109/CVPR.2019.00584.

[33] Cao Z, Simon T, Wei S E, Sheikh Y. Realtime multi-person 2D pose estimation using part affinity fields. In Proc. the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Jul. 2017, pp.1302–1310. DOI: 10.1109/CVPR.2017.143.

[34] Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. In Proc. the 3rd International Conference on Learning Representations, May 2015.

[35] Qiu Z, Yao T, Mei T. Learning spatio-temporal representation with pseudo-3D residual networks. In Proc. the 2017 IEEE International Conference on Computer Vision, Oct. 2017, pp.5534–5542. DOI: 10.1109/ICCV.2017.590.

[36] Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez A N, Kaiser Ł, Polosukhin I. Attention is all you need. In Proc. the 31st International Conference on Neural Information Processing Systems, Dec. 2017, pp.6000–6010. DOI: 10.5555/3295222.3295349.

[37] He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. In Proc. the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Jun. 2016, pp.770–778. DOI: 10.1109/CVPR.2016.90.

[38] Koller O, Forster J, Ney H. Continuous sign language recognition: Towards large vocabulary statistical recognition systems handling multiple signers. Computer Vision and Image Understanding, 2015, 141: 108–125. DOI: 10.1016/j.cviu.2015.09.013.

[39] Lin C Y. ROUGE: A package for automatic evaluation of summaries. In Proc. the Text Summarization Branches Out, Jul. 2004, pp.74–81.

[40] Papineni K, Roukos S, Ward T, Zhu W J. BLEU: A method for automatic evaluation of machine translation. In Proc. the 40th Annual Meeting on Association for Computational Linguistics, Jul. 2002, pp.311–318. DOI: 10.3115/1073083.1073135.

[41] Camgöz N C, Koller O, Hadfield S, Bowden R. Sign language transformers: Joint end-to-end sign language recognition and translation. In Proc. the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Jun. 2020, pp.10020–10030. DOI: 10.1109/CVPR42600.2020.01004.

[42] Niu Z, Mak B. Stochastic fine-grained labeling of multi-state sign glosses for continuous sign language recognition. In Proc. the 16th European Conference on Computer Vision, Aug. 2020, pp.172–186. DOI: 10.1007/978-3-030-58517-4_11.

[43] Cheng K L, Yang Z, Chen Q, Tai Y W. Fully convolutional networks for continuous sign language recognition. In Proc. the 16th European Conference on Computer Vision, 2020, pp.697–714. DOI: 10.1007/978-3-030-58586-0_41.

[44] Hao A, Min Y, Chen X. Self-mutual distillation learning for continuous sign language recognition. In Proc. the 2021 IEEE/CVF International Conference on Computer Vision, Oct. 2021, pp.11283–11292. DOI: 10.1109/ICCV48922.2021.01111.

[45] Zuo R, Mak B. C2SLR: Consistency-enhanced continuous sign language recognition. In Proc. the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Jun. 2022, pp.5121–5130. DOI: 10.1109/CVPR52688.2022.00507.

[46] Zhou H, Zhou W, Li H. Dynamic pseudo label decoding for continuous sign language recognition. In Proc. the 2019 IEEE International Conference on Multimedia and Expo, Jul. 2019, pp.1282–1287. DOI: 10.1109/ICME.2019.00223.

[47] Wei C, Zhao J, Zhou W, Li H. Semantic boundary detection with reinforcement learning for continuous sign language recognition. IEEE Trans. Circuits and Systems for Video Technology, 2021, 31(3):1138–1149. DOI: 10.1109/TCSVT.2020.2999384.

[48] Pu J, Zhou W, Hu H, Li H. Boosting continuous sign language recognition via cross modality augmentation. In Proc. the 28th ACM International Conference on Multimedia, Oct. 2020, pp.1497–1505. DOI: 10.1145/3394171.3413931.

[49] Min Y, Hao A, Chai X, Chen X. Visual alignment constraint for continuous sign language recognition. In Proc. the 2021 IEEE/CVF International Conference on Computer Vision, Oct. 2021, pp.11522–11531. DOI: 10.1109/ICCV48922.2021.01134.

[50] Venugopalan S, Rohrbach M, Donahue J, Mooney R, Darrell T, Saenko K. Sequence to sequence—Video to text. In Proc. the 2015 IEEE International Conference on Computer Vision, Dec. 2015, pp.4534–4542. DOI: 10.1109/ICCV.2015.515.

[51] Tang S, Guo D, Hong R, Wang M. Graph-based multimodal sequential embedding for sign language translation. IEEE Trans. Multimedia, 2022, 24: 4433–4445. DOI: 10.1109/TMM.2021.3117124.

[52] Zhou H, Zhou W, Qi W, Pu J, Li H. Improving sign language translation with monolingual data by sign back-translation. In Proc. the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Jun. 2021, pp.1316–1325. DOI: 10.1109/CVPR46437.2021.00137.

-

其他相关附件

下载:

下载: