FaceCLIP—基于文本的面部表情生成方法

-

摘要:研究背景

人脸图像包含了身份、性别、情绪、年龄和种族在内的丰富信息。深度学习方法在处理与面部相关的任务时通常需要大量标注数据以获得更好的效果,但是大规模数据的收集和人工标注成本巨大,代价高昂。为了克服这些限制,自动生成具有所需情绪的面部表情图像的方法备受关注。基于文本的跨模态面部表情生成是一种利用计算机自动、快速生成与文本描述的面部特征及表情一致的高真实感人脸图像的方法,为图像信息的获取提供了极大的方便。然而,面部结构很复杂,面部表情是由多个面部肌肉协调运动产生的,这导致建模文本和表情图像之间的映射关系具有一定的难度。现有方法通常依赖于根据文本提示处理源面部图像来生成新的表情,但这些方法生成表情图片的数量受到源面部数据集大小的限制,生成图像的分辨率也相对较低。

目的本研究旨在提出一种便捷且注重隐私的面部表情生成方法,以解决现有跨模态图像生成方法面临的问题,帮助研究人员构建大量自然生动的人脸及表情图像。

方法本文提出了一种名为FaceCLIP的跨模态人脸表情生成方法,该方法基于纯文本描述生成自然表情图像。提出的方法结合了基于GAN的多阶段生成网络和基于CLIP(contrastive language-image pre-training,对比语言-图像预训练)的语义先验,逐步生成与文本高度一致的高分辨率面部表情图像。此外,我们创建了一个包含3万多张图像的表情-文本对数据集(Facial Expression and Text,FET)。我们使用多个评估标准在FET数据集上对FaceCLIP进行了与目前最好方法的定量和定性比较。

结果在定量比较实验中,FaceCLIP在FID(35.52)和R-precision(70.15±0.53)标准上取得了最佳性能,表明该方法能够生成逼真且最符合描述的面部表情图像。在LPIPS标准上,FaceCLIP方法的表现优于AttnGAN、DM-GAN和UMDM模型,证明其生成相对多样化的面部表情图像的能力。在定性比较实验中,可视化结果证明FaceCLIP生成的图像在质量和语义一致性方面有所提高。通过利用多模态文本和视觉线索的强语义先验,该模型能够有效地分离面部属性,实现属性编辑和语义推理。

-

关键词:

- 基于文本的表情生成 /

- 对比语言-图像预训练模型 /

- 多阶段 /

- 生成对抗网络

Abstract:Facial expression generation from pure textual descriptions is widely applied in human-computer interaction, computer-aided design, assisted education, etc. However, this task is challenging due to the intricate facial structure and the complex mapping between texts and images. Existing methods face limitations in generating high-resolution images or capturing diverse facial expressions. In this study, we propose a novel generation approach, named FaceCLIP, to tackle these problems. The proposed method utilizes a CLIP-based multi-stage generative adversarial model to produce vivid facial expressions with high resolutions. With strong semantic priors from multi-modal textual and visual cues, the proposed method effectively disentangles facial attributes, enabling attribute editing and semantic reasoning. To facilitate text-to-expression generation, we build a new dataset called the FET dataset, which contains facial expression images and corresponding textual descriptions. Experiments on the dataset demonstrate improved image quality and semantic consistency compared with state-of-the-art methods.

-

1. Introduction

Facial expression analysis plays an important role in the field of human-computer interaction, as it enables computers to interpret and respond to human emotions. However, the performance of facial expression analysis is often influenced by various attributes, including identity, gender, age, and race. Although deep learning methods achieve promising results in this domain[1, 2], they require a substantial amount of annotated data for training. Unfortunately, existing facial expression datasets face challenges such as outdated low-resolution images or limited access due to privacy concerns[3–7], impeding further improvements in related studies. Moreover, manually collecting and annotating such large-scale datasets is a resource-intensive and expensive task. To overcome these limitations, there is growing attention to the methods that automatically generate facial expression images with desired emotions.

Generating lively and natural facial expressions is an important challenge, and a crucial aspect of this task is the ability to generate realistic faces. Recent researches on face image generation focus on using descriptive information from various modalities, including natural languages, as conditions to guide the generation process. Natural languages are simple and universal, but current text-to-face generation methods[8, 9] have shortcomings in some aspects such as facial texture and eye details, resulting in unrealistic facial images. Additionally, some methods show an incomplete understanding of the text, resulting in facial images that deviate from the intended meaning of the text on certain attributes[10].

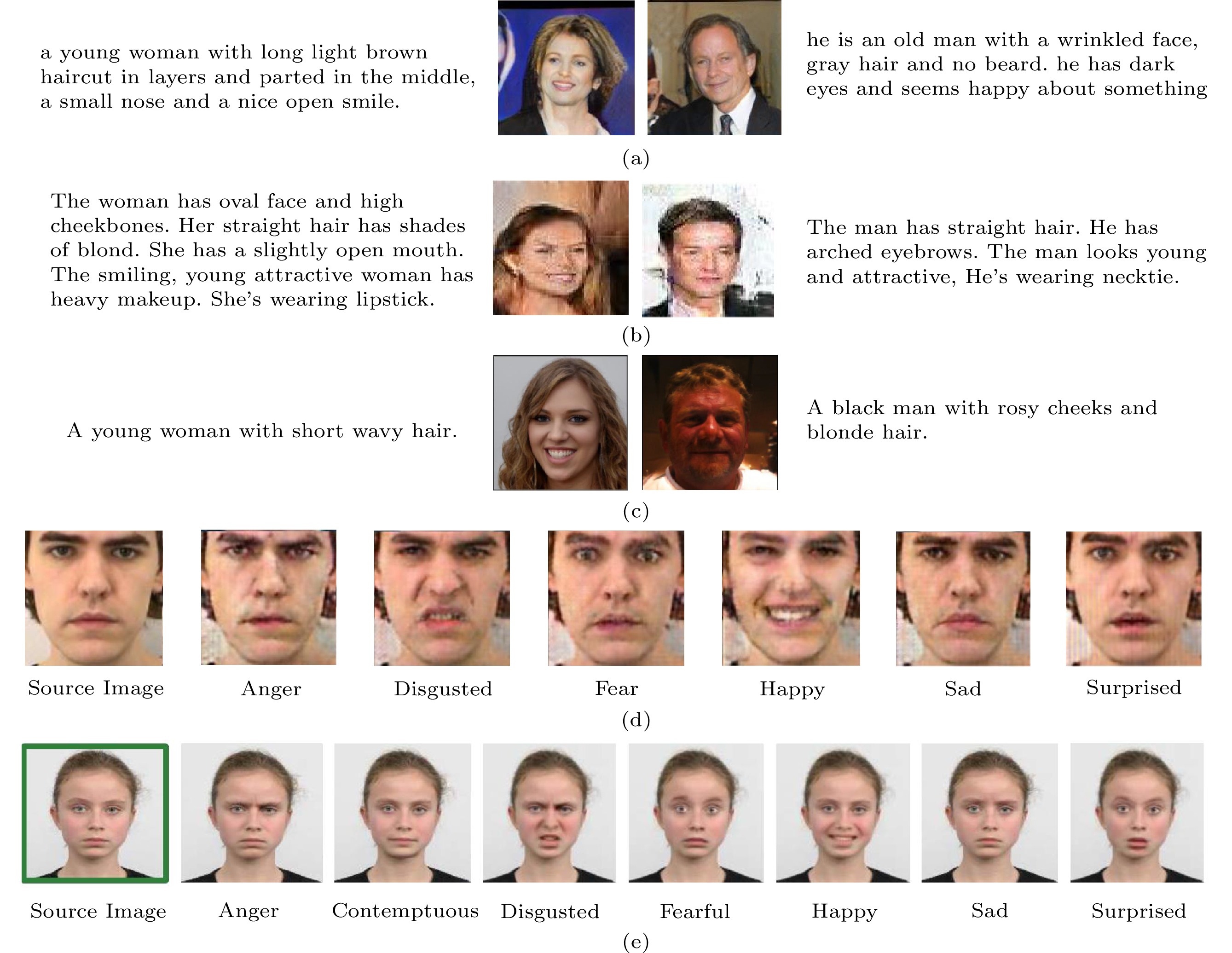

For the facial expression generation problem, existing methods typically rely on manipulating the source facial images based on textual prompts to generate new expressions, but they do have limitations. For example, the number of generated expression images is restricted by the size of source facial datasets, and the resolution of the generated images remains relatively low[11, 12]. Examples of the aforementioned face and expression generation challenges are visualized in Fig.1.

1 ![]() Figure 1. Generated images using the existing methods. (a) Low-quality facial images generated from text using T2F

Figure 1. Generated images using the existing methods. (a) Low-quality facial images generated from text using T2F1 . (b) Low-quality facial images generated from text using Text2FaceGAN[9]. (c) Problematic facial images generated by StyleT2F[10]. (d) Six facial expressions derived from editing the source image using Emoreact[11]. (e) Seven facial expressions generated by editing the source image using GANimation[12].Another critical issue in facial expression generation from text cues is the lack of text-face pairs dataset describing diverse expressions in natural language. Current face datasets with textual descriptions, such as the CelebTD-HQ dataset[13], only cover one type of emotion (i.e., smiling) instead of the seven common emotion labels used for expression recognition. Thus, these text-to-face methods[14, 15] have difficulties in comprehending expression labels and generating diverse expressions accurately due to the lack of training datasets.

Furthermore, for cross-modal tasks involving texts and images, handling and mapping intricate relationships between textual and visual elements poses a significant challenge. In particular, the relation between texts and expressions is especially complex, as texts describe various aspects of facial expressions, such as emotions, facial attributes, and gender, while expressions described by the same text may exhibit diverse forms and features. Thus, effectively capturing the relationship between texts and images with expressions is crucial to text-to-expression generation.

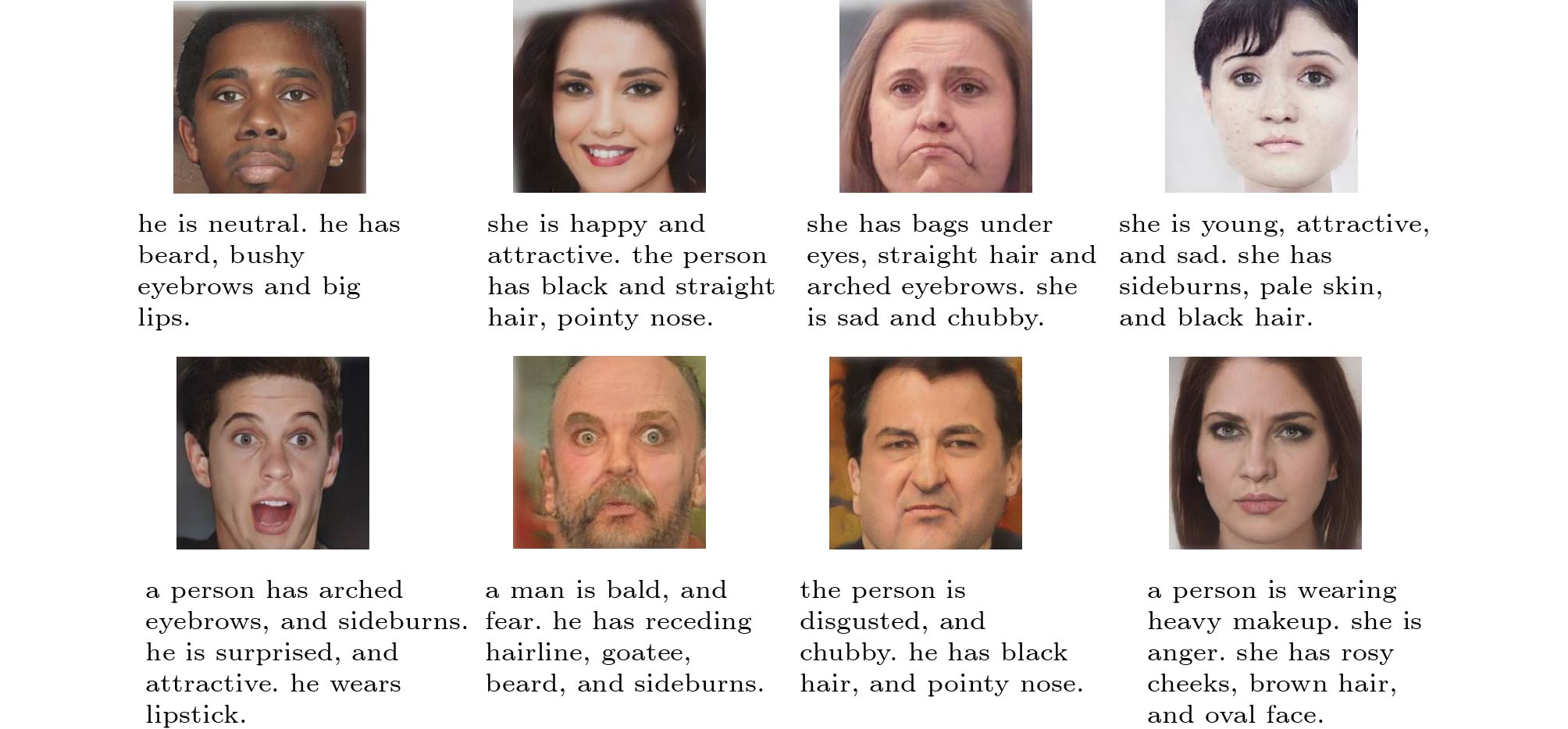

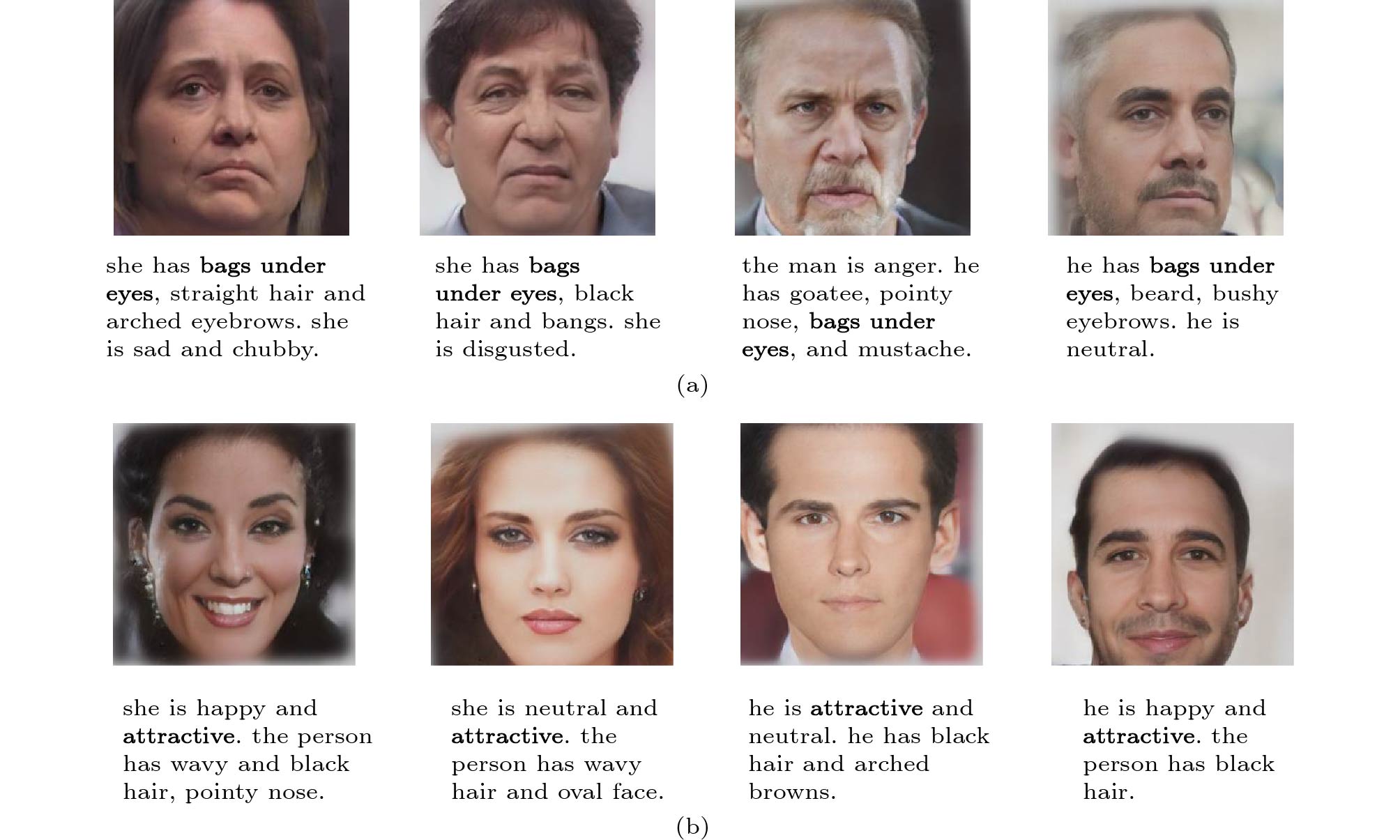

To address these issues, we propose a novel text-based facial expression generation method called FaceCLIP. The proposed method generates expression images of virtual faces that do not exist in the real world by leveraging natural language descriptions containing various facial attributes, such as expressions, makeup, bags under eyes, nose, and hair (see in Fig.2). Additionally, the FaceCLIP method is capable of efficiently synthesizing a large number of facial expression databases, which provides sufficient data support for face-related research without compromising facial privacy. Moreover, we build a middle-sized facial expressions and texts (FET) dataset with emotion descriptions based on two expression datasets: AffectNet[7] and RAF-DB[6]. For each image, we use a probabilistic context-free grammar (PCFG) algorithm to generate ten different descriptions based on manually annotated facial attributes. Our approach facilitates training on the FET dataset and achieves effective expressions generation from texts.

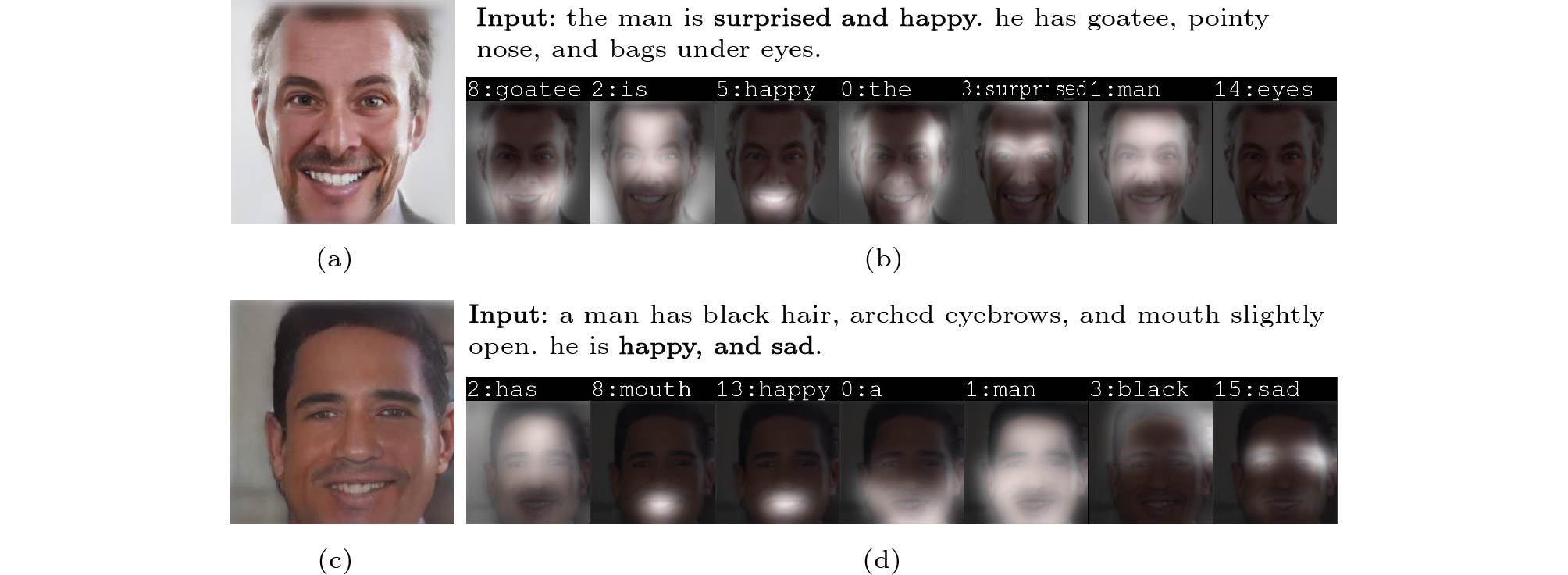

We also introduce contrastive language-image pre-training (CLIP) to mitigate the semantic gap between texts and facial expressions, making the generated images aligned with the users' specified requirements on various attributes. The CLIP model[16] is trained on 400 million text-image pairs collected from various public sources. This model learns a multi-modal common embedding space that estimates semantic similarity between given texts and images. The CLIP model has been successfully applied to texts and images (videos) tasks[17–21], which demonstrates its exceptional capabilities in learning textual and visual representations. With its natural language parsing ability, the proposed method is able to understand and disentangle the semantics of facial attributes, allowing the generated images to exhibit complex attribute combinations, e.g., “happy and surprised”. Examples are illustrated in Fig.3. Moreover, based on the semantics of the given attributes, the proposed approach is capable of reasoning other facial attributes that contribute to visually harmonious and realistic face generation.

![]() Figure 3. Examples of facial images depicting complex combinations of attributes. (a) Generated image of the compound expression “happy and surprised”. (b) Corresponding attention maps for the compound expression “happy and surprised”. (c) Generated image of the compound expression “happy and sad”. (d) Corresponding attention maps for the compound expression “happy and sad”.

Figure 3. Examples of facial images depicting complex combinations of attributes. (a) Generated image of the compound expression “happy and surprised”. (b) Corresponding attention maps for the compound expression “happy and surprised”. (c) Generated image of the compound expression “happy and sad”. (d) Corresponding attention maps for the compound expression “happy and sad”.The main contributions of this study are as follows.

• This study proposes a GAN-based method to generate facial expression images from pure texts. The proposed method introduces the semantic priors of the CLIP model to enhance its understanding of attribute semantics and ensure visually consistent image generation. With semantic disentanglement, the method successfully achieves attribute operations in the semantic level. Additionally, the incorporation of the CLIP model introduces semantic reasoning abilities, enabling the resulting images to be visually realistic and credible.

• This study builds a facial expressions and texts dataset called FET, which contains approximately

30000 expression images from the AffectNet and RAF-DB datasets. We include seven common expressions and 38 attributes. Each image has 10 natural language descriptions generated based on the attribute list. The dataset is publicly available, providing data support for future research in the text-to-expression domain.• This study evaluates the performance of our proposed method on the FET testing set using multiple metrics. Extensive experiments demonstrate that the proposed method outperforms the current state-of-the-art GAN-based models.

2. Related Work

Our work focuses on text-to-expression generation and the employment of the multi-modal prior in boosting the generation performance. In this section, we will discuss related work in three aspects: text-to-image generation, facial image and expression generation, and CLIP-based cross-domain relation modeling.

2.1 Text-To-Image Generation

Generative adversarial networks (GANs), first proposed by Goodfellow et al.[22], generate images through adversarial training. Based on this work, Mirza and Osindero[23] proposed conditional generative adversarial networks (CGANs), which incorporate label information as input into both the generator and discriminator networks. CGANs guide image generation through specific conditions, making it possible to generate more diverse and realistic content by leveraging the relationships between different modalities, especially between texts and images.

Generating high-resolution images that resemble real-world subjects has been a long-standing challenge. Many GAN-based variants have emerged to deal with the low-quality problem. Mansimov et al.[24] proposed alignDRAW, which combines the Deep Recurrent Attention Writer (DRAW) model and the soft attention mechanism with word alignment to address the lack of semantic correlation between linguistic and visual domains. However, alignDRAW sharpens generated images using a GAN only in the post-processing step, resulting in rough and blurry images with a resolution of only 36×36. Reed et al.[25] introduced the text-conditional convolutional GAN model, which is the first attempt to use the GAN as the backbone network for text-to-image generation. The model utilizes a recurrent neural network structure to learn discriminative text features, which are then used as constraints for the generator and discriminator to produce the low-resolution 64×64 images.

To overcome the inability of a single CGAN model in generating high-resolution images, the StackGAN model proposed by Zhang et al.[26] stacks two generative networks to produce realistic images with a resolution of 256×256. The model generates low-resolution outputs of 64×64 that meet specified text conditions in the first stage, and refines the images to a higher-resolution of 256×256 in the second stage. However, the model cannot be trained end-to-end and loses word-level cues by using global sentence vectors as conditions, leading to the loss of corresponding details about the images. To address these limitations, Xu et al.[27] proposed an attentional generative adversarial network (AttnGAN) that introduces attention mechanisms in multi-stage GANs. The AttnGAN model leverages multi-level conditions (e.g., word-level and sentence-level) to progressively generate fine-grained, high-quality images, starting from a resolution of 64×64, then 128×128, and finally 256×256. The model also proposes a deep attentional multi-modal similarity model (DAMSM) to calculate the matching similarity between the images generated in the final stage and the corresponding texts, enabling parallel training of generators and discriminators from the perspectives of image-text matching and image quality. Since then, attention and multi-stage methods have been widely adopted in text-to-image cross-modal generation to produce high-quality images[28, 29]. However, these methods only associate words with certain regions in the spatial domain, while the channel features also contain semantic connections between image parts and words. To address this, ControlGAN[30] suggests a multi-stage generator driven by word-level spatial- and channel-wise attention, allowing for the generation of images with progressive resolution similar to AttnGAN. This model is able to only modify the image attributes associated with the altered parts in the sentence, while keeping other attributes and background in the image unchanged.

In order to synthesize images with multiple objects and complex backgrounds from textual descriptions, Fang et al.[31] proposed a comprehensive pipeline method, which involves parsing foreground and background objects from the input text and then retrieving existing images of objects from the Microsoft COCO dataset and a background scene image dataset. This approach employs constrained MCMC to ensure the reasonableness of the object layout in the synthesized image. However, the diversity of image synthesis using this method is largely influenced by the underlying dataset used for retrieval.

2.2 Facial Image and Expression Generation

While the above methods have achieved high-quality image generation from textual descriptions, much work focuses on generating images of flowers, animals, and landscapes by learning from datasets such as Oxford-102[32], CUB[33], and COCO[34]. Efficient and high-quality facial image generation is essential in many domains, such as visual effects production, virtual reality, computer-aided design, assisted education, and security control. Generating facial images requires particular attention to facial structure and attributes, thus remaining a challenge.

To generate realistic faces, existing methods often rely on images of basic facial structures, such as sketches or semantic labels, to synthesize photo-quality facial images[35–40]. Alternatively, faces are generated based on source facial images, with text guiding changes for the style and facial attributes of subjects[17, 41, 42]. Specifically, TediGAN[14] is a method based on the StyleGAN[41] framework that integrates generation and manipulation into one structure and supports multiple modalities, including texts, source images, sketches, and labels. The model is capable of generating and manipulating high-resolution facial images (with a resolution of 1024×1024). Despite its capabilities, TediGAN requires training a separate model for each language input, and the results generated from the same sentence are highly random.

Vivid and natural facial expression generation is highly beneficial for expressive avatar creation and immersive human-computer interaction. Some facial expression generation methods also utilize single source images as conditions to produce realistic results, but the number of generated images is limited by the number of source images, and the resolutions also need to be improved. For example, GANimation[12] introduces a GAN-based model to edit source facial images to generate new expressions with text prompts. The model conditions on anatomical muscle movements and controls the activation level of each action unit (AU) to manipulate the continuous facial expressions on the sources. This method also employs an attention mechanism during the generation process, making the model robust to changing backgrounds and lighting conditions. While GANimation supports manipulation of different faces and expressions and has strong generality and scalability, its generation quality is unstable.

2.3 CLIP-Based Cross-Domain Relation Modeling

With the advancements of multi-modal deep learning, relation modeling between vision and language has become a prominent research direction. The CLIP model proposed by OpenAI is a significant milestone in the field as it establishes a shared subspace for both vision and language, achieving cross-modal comprehension between the two domains through contrastive learning. Based on the model, researchers are exploring the usage of the cross-domain semantic priors for various visual generation tasks.

In 2021, Patashnik et al.[17] developed the StyleCLIP model that combines the powerful image disentanglement capability of StyleGAN and the extraordinary linguistic and visual coding ability of CLIP. This approach allows for customized image generation by modifying the input latent vector based on users' textual prompts. However, the ambiguity and vagueness of texts may result in errors and inaccuracies for the generated images in some cases. Additionally, StyleCLIP relies on source images as the basis for operation, limiting its application. Wei et al.[43] introduced the HairCLIP model, which focuses on hair editing using a combination of the StyleGAN and CLIP models. Based on the shared latent space of the CLIP model, HairCLIP takes text cues or/and reference images as prompts for hair editing. This approach provides more flexibility and control over hair style and color modifications.

However, these approaches for cross-domain modeling between texts and images require a large amount of text-image pairs for model training. To overcome the dependence of high-quality image-text pairs, Zhou et al.[21] introduced a method to train a text-to-image generation model without any text by employing the joint multi-modal feature space of the pre-trained CLIP model, reducing the demand for textual descriptions and training time. However, this approach has limitations in generating diverse and expressive facial expressions.

In addition to its successful applications in image generation from texts, the CLIP model has also been extended to text-to-action generation and audio tasks. MotionCLIP[44] is an interactive text-driven action video generation model that utilizes a transformer-based autoencoder and CLIP to inject rich semantic knowledge into the human motion manifold. By aligning the latent space of motions with those of texts and images in CLIP, the model achieves continuity and disentanglement of actions. Despite that the motion domain is unseen within CLIP, MotionCLIP is still capable of generating sophisticated and personalized actions with text prompts and responding correctly to words that require semantic understanding.

In the field of audio analysis, Guzhov et al.[45] proposed AUDIOCLIP, a CLIP-assisted audio classification and retrieval model that expands the CLIP model to understand audio domain. Using a similar architecture to CLIP, the model maps audios, images, and texts into a shared subspace, achieving cross-domain understanding among multiple modalities. By learning the correspondence among texts, images, and audios in the shared subspace simultaneously, the model enhances its ability to utilize multiple modalities for audio classification and enables zero-shot inference on previously unseen datasets. These successful applications demonstrate the potential of the CLIP model in various tasks and domains.

3. Text to Facial Expression Generation Using CLIP-Based GAN

In this study, we aim to investigate the relations among linguistic descriptions, facial attributes and facial expressions, and to study high-quality facial expression generation from the input texts. To facilitate our research, we construct a new dataset named Facial Expressions and Text (FET), which consists of expression images and their corresponding textual descriptions. The proposed approach employs the multi-stage GANs as the backbone network and leverages the CLIP model to introduce the semantic priors of the images and texts relations, which enables the synthesis of highly realistic and diverse facial expressions from textual descriptions.

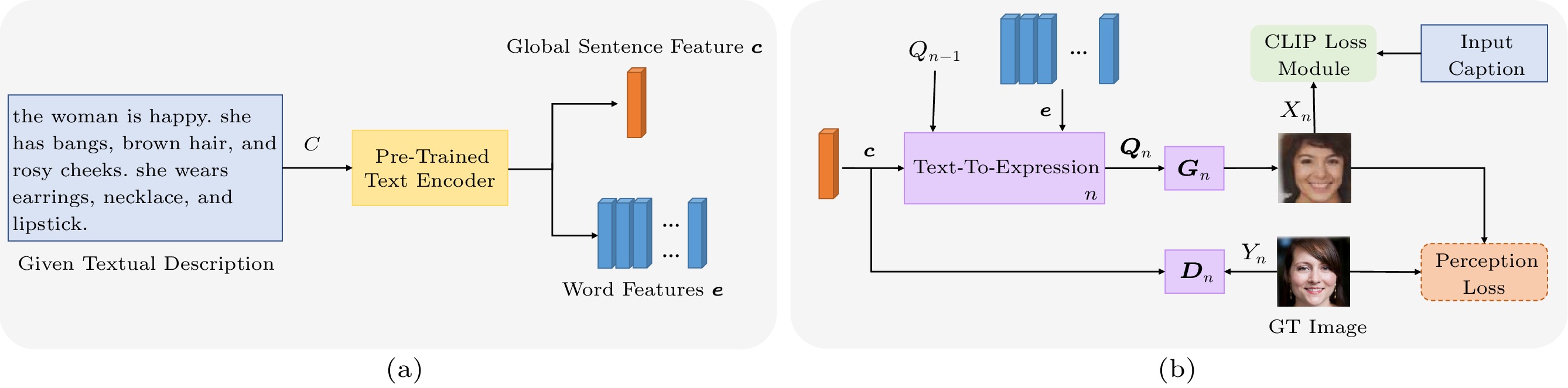

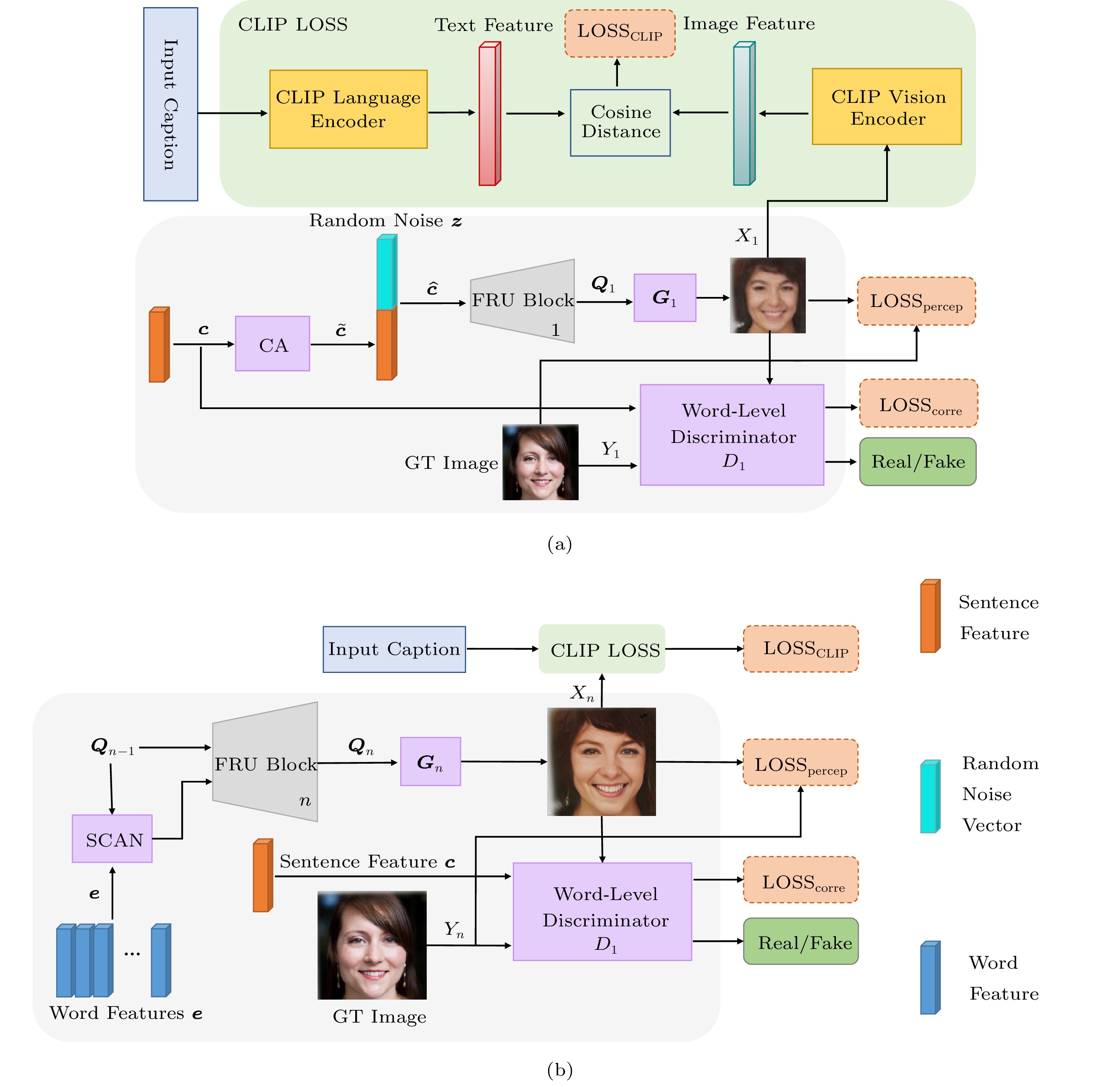

The proposed method consists of three generation stages, where the resolutions of the generated images increase stage by stage, from 64×64×3 to 128×128×3 to 256×256×3. In each generation stage, the text-to-expression module maps the features extracted from the input descriptions into latent features, which are further processed to generate images by the generator. The overall architecture of the method is illustrated in Fig.4.

![]() Figure 4. Overall architecture of the proposed FaceCLIP method. (a) Stage of feature extraction for textual descriptions. (b) The n-th (1⩽) stage of expression generation. {\boldsymbol{c}} and {\boldsymbol{e}} represent the global sentence feature and the word features extracted from the given description C of facial attributes using the pre-trained bi-LSTM based text encoder, respectively. The text-to-expression module transforms the input text features \{{\boldsymbol{c}},\;{\boldsymbol{e}}\} and the latent feature {\boldsymbol{Q}}_{n-1} from the previous stage into new latent feature {\boldsymbol{Q}}_n through the attention mechanism and convolution transitions, and then the feature {\boldsymbol{Q}}_n is fed into generator G_n to produce output image X_n . Y_n is the ground truth image, and D_n is the n -th discriminator.

Figure 4. Overall architecture of the proposed FaceCLIP method. (a) Stage of feature extraction for textual descriptions. (b) The n-th (1⩽) stage of expression generation. {\boldsymbol{c}} and {\boldsymbol{e}} represent the global sentence feature and the word features extracted from the given description C of facial attributes using the pre-trained bi-LSTM based text encoder, respectively. The text-to-expression module transforms the input text features \{{\boldsymbol{c}},\;{\boldsymbol{e}}\} and the latent feature {\boldsymbol{Q}}_{n-1} from the previous stage into new latent feature {\boldsymbol{Q}}_n through the attention mechanism and convolution transitions, and then the feature {\boldsymbol{Q}}_n is fed into generator G_n to produce output image X_n . Y_n is the ground truth image, and D_n is the n -th discriminator.3.1 Facial Expressions with Texts Dataset

We analyze several facial expression datasets and build the FET dataset by combining two of them. The statistics of the five facial expression datasets are listed in Table 1. From the table, it is evident that the AffectNet dataset offers the largest number of training samples, therefore we select a subset of

32842 images from AffectNet[7]. To ease the unbalanced class problem, we choose 983 images of high-quality from the RAF-DB dataset[6] to form a dataset with a similar scale to that of TediGAN[14]. The final FET dataset comprises a total of33825 images, each of which is annotated with 38 attributes and seven facial expression labels.Table 1. Statistics of Widely Used Facial Expression DatasetsDataset Number of Images Number of Basic Expressions Resolution Color Published Year JAFFE[3] 213 7 256 × 256 ✘ 1998 KDEF[4] 4900 7 562 × 762 ✔ 1998 FER_2013[5] 35887 7 48 × 48 ✘ 2013 RAF-DB[6] 15339 7 33 × 49 ^\ast \rightarrow 2147 ×3221 ^\dagger✔ 2017 AffectNet[7] 318969 8 130 × 130 ^\ast \rightarrow 4724 ×4724 ^\dagger✔ 2017 Note: Marker * means the minimum resolutions of the facial images. Marker † means the maximum resolutions of the facial images. To enhance the quality of the dataset, we preprocess the images by re-aligning the faces using rotation and padding[46], and uniformly re-scale them to the resolution of 1\;024\times1\;024 . Then, we annotate these expression images with a label size of L\times46 , where L represents the total number of images and 46 corresponds to the number of image attributes, including one column for image names, 38 columns for facial attributes, and seven columns for facial expressions. For each image in the FET dataset, the attribute is labeled as 1 if it holds for the subject; otherwise, it is labeled as -1 . Additionally, the facial expressions are labeled based on the expression labels provided by the two datasets.

In order to facilitate automatic generation of text annotations, we classify facial characteristics into three sets of attributes according to the verbs they follow[13]. For example, attributes like “arched eyebrows”, “beard”, and “straight hair” serve as objects of the verb “has”, and thus they belong to the set “has_attrs”. Furthermore, a set “subject” is also included in the components for text annotations. Specially, the attribute “gender” describes the subject in the text and belongs to the “subject” set. Further details about these annotated attributes can be found in Table 2. The column “component” denotes the members of textual descriptions according to the grammar rules of the PCFG algorithm. The column “attributes” presents all attribute options used for annotation. Using these categorized attribute sets, we then employ the PCFG algorithm to generate 10 different descriptions for each image by randomly sampling the attributes of the image. Examples of text-image pairs in the FET dataset are visualized in Fig.5.

Table 2. Attributes of Collected Facial Expressions and Text (FET) DatasetComponent Attribute subject Gender (male & female) has_attrs Pale skin, oval face, arched eyebrows, bushy eyebrows, narrow eyes, bags under eyes, high cheekbones, rosy cheeks, pointy nose, big nose, mouth slightly open, big lips, bread, mustache, goatee, double chin, receding line, bangs, sideburns, straight hair, wavy hair, blond hair, black hair, brown hair, gray hair wear_attrs Necklace, necktie, eyeglasses, heavy makeup, earrings, hat, lipstick is_attrs* Facial attribute: attractive, young, chubby, bald, smiling Facial expression: neutral, happy, sad, surprised, fear, disgusted, anger Note: Marker * denotes that attributes corresponding to the “is_attrs” encompass two categories: pertaining to facial attributes and facial expressions, respectively. However, the AffectNet dataset contains unbalanced emotion classes because people are reluctant to display negative emotions publicly (e.g. disgust and fear have relatively fewer samples). To address this problem, we horizontally flip these images in the training set to balance the data distributions while preserving the original emotions of the faces. The detailed composition of the FET dataset is listed in Table 3.

Table 3. Detailed Composition of Image Quantities Across Expressions in the Collected FET DatasetExpression Gender Split Male Female Training Set Testing Set Neutral 3030 2809 4600 1239 Happy 1981 3854 4600 1235 Sad 2913 2739 4600 1052 Surprise 2728 2614 4600 742 Fear 1248 1337 2285 +2285 † =4570 300 Disgust 1425 1295 2420 +2410 † =4830 300 Anger 4175 1677 4600 1252 Total 17500 16325 32400 6120 Note: The figures annotated with a dagger symbol (†) are images derived by horizontally flipping to compensate for an imbalance in the training dataset. The “Male” and “Female” columns specify the quantities of male and female images, respectively. The “Training Set” and “Testing Set” columns specify the total numbers of images in the training set and the testing set, respectively. 3.2 FaceCLIP

The proposed Facial Expression Generation from Text with the FaceCLIP method adopts the multi-stage generative adversarial network[27] which comprises three stages. The three stages progressively refine the quality and resolutions of the generated images. For the first stage of generation, we extract text features from the given input and use them as seeds along with noise to generate low-quality facial expression images through a GAN. To explore the relations between images and attributes, we pre-train an Inception_v3-based image encoder E_{\mathrm{img}} and a bi-LSTM based text encoder E_{\mathrm{txt}} on the FET dataset. This pre-trained text encoder extracts word features {\boldsymbol{e}} and a global sentence feature {\boldsymbol{c}} of the input description (denoted by C ) that describes the desired facial attributes and emotions from users. Conditioning augmentation (CA)[26] enhances training data by re-sampling input sentence vectors from independent Gaussian distributions to avoid over-fitting due to limited text data. In this study, we also apply CA on the sentence feature {\boldsymbol{c}} to obtain \widetilde{{\boldsymbol{c}}} . Next, we concatenate \widetilde{{\boldsymbol{c}}} with a random noise vector {\boldsymbol{z}} to acquire the conditional feature vector \widehat{{\boldsymbol{c}}} . The feature \widehat{{\boldsymbol{c}}} is further processed by the FRU block 1 consisting of a fully connected layer and four up-sampling operations, and produces a latent vector {\boldsymbol{Q}}_1 . The feature {\boldsymbol{Q}}_1 is fed into the first generator G_1 , which generates low-resolution expression images with 64\times64 pixels. This process is presented in Fig.6(a) and it can be denoted as follows:

![]() Figure 6. Details of the proposed FaceCLIP model. (a) The first stage of facial expression generation. (b) The following n -th ( 1< n \leqslant 3 ) stage of expression generation using an attention module. \widetilde{{\boldsymbol{c}}} is the sentence vector processed through CA, and \widehat{{\boldsymbol{c}}} is the conditional feature vector obtained by concatenating \widetilde{{\boldsymbol{c}}} with a random noise vector {\boldsymbol{z}} . FRU blocks involve operations with fully connected layers, residual connections, and up-sampling. SCAN denotes attention networks. Each stage is optimized using adversarial losses, a CLIP-based loss, a perceptual loss, and a word-level relevant loss.

Figure 6. Details of the proposed FaceCLIP model. (a) The first stage of facial expression generation. (b) The following n -th ( 1< n \leqslant 3 ) stage of expression generation using an attention module. \widetilde{{\boldsymbol{c}}} is the sentence vector processed through CA, and \widehat{{\boldsymbol{c}}} is the conditional feature vector obtained by concatenating \widetilde{{\boldsymbol{c}}} with a random noise vector {\boldsymbol{z}} . FRU blocks involve operations with fully connected layers, residual connections, and up-sampling. SCAN denotes attention networks. Each stage is optimized using adversarial losses, a CLIP-based loss, a perceptual loss, and a word-level relevant loss.\begin{array}{l} {\boldsymbol{e}}, {\boldsymbol{c}} = E_{\mathrm{txt}}(C), \\ {X}_1 = G_1({FRU_1}([{\boldsymbol{z}},\ CA( {\boldsymbol{c}})])), \end{array} where E_{\mathrm{txt}}(\cdot) is the pre-trained bi-LSTM text encoder, G_1 is the first generator, {X}_1 is the low-resolution image generated in the first stage, CA(\cdot) denotes the conditioning augmentation, [\cdot] represents the vector concatenation operation, and {FRU_1}(\cdot) denotes the operations of FRU block 1.

To enhance the quality of generated images, we employ spatial-channel attentions and up-sampling operations in the subsequent two generation stages. The spatial-channel attention network (SCAN)[30] highlights the detailed features of image patches that are relevant to the corresponding descriptions, and the FRU block n module consists of two residual connected layers and one up-sampling operation. In each of these stages, the word features {\boldsymbol{e}} and the visual feature {\boldsymbol{Q}}_{n-1} from the previous stage are input into the SCAN module to obtain attention features which are then concatenated with the original visual features {\boldsymbol{Q}}_{n-1} . Subsequently, the concatenated feature is processed by FRU block n , which outputs the updated visual latent vector {\boldsymbol{Q}}_{n} . Vector {\boldsymbol{Q}}_{n} is then fed into the following generator G_n to generate higher-resolution facial expression images. The above process is shown in Fig.6(b) and it can be represented as follows:

\begin{array}{l} {X}_{n-1} = G_{n-1}({\boldsymbol{Q}}_{n-1}),\\ {X}_n = {G_n}({FRU_n}([{\boldsymbol{Q}}_{n-1},\ SCAN({\boldsymbol{Q}}_{n-1},\ {\boldsymbol{e}})])), \end{array} where X_n is the refined image generated by the n -th generator G_n , SCAN(\cdot) represents the spatial-channel attention operation, and {FRU_n}(\cdot) denotes the operations of FRU block n .

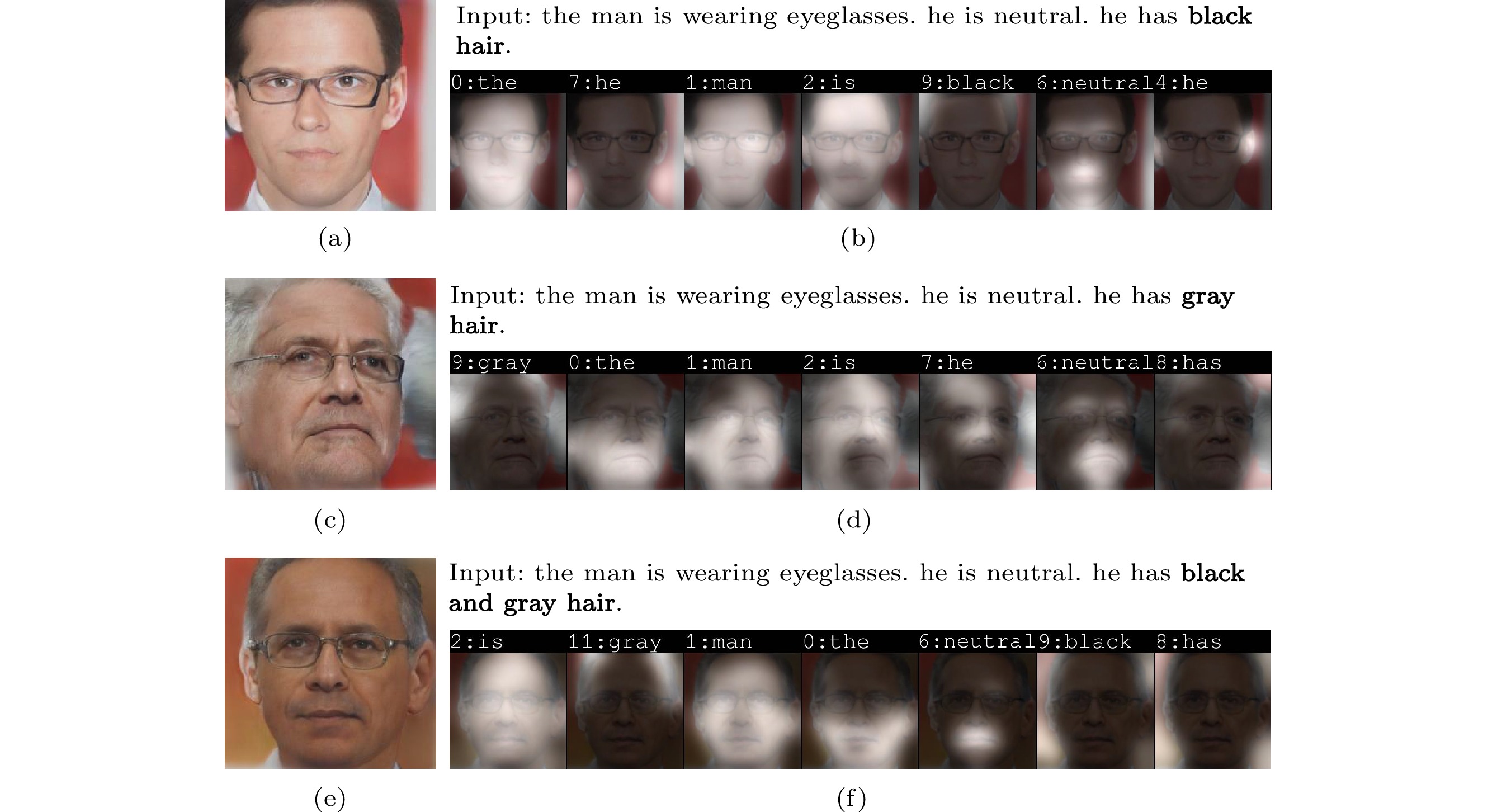

This study introduces the priors of text and image correspondence to the text-to-expression task using the CLIP model. By leveraging the linguistic encoder and visual encoder of the CLIP model, we map images and texts into a shared latent space, enhancing the semantic consistency between generated images and their corresponding descriptions. Moreover, we employ the powerful semantic disentanglement capability learned by the CLIP model to facilitate semantic editing on facial attributes, which enables the proposed FaceCLIP method to generate not only single basic facial expression but also composite facial expressions, such as the “happy and sad” expression visualized in Fig.3. In addition, the proposed FaceCLIP method is able to edit other facial attributes semantically, such as hair colors, as illustrated in Fig.7. With effective semantic interpretation of the CLIP model, the proposed method is also capable of reasoning over facial characteristics associated with the specified attributes to enhance the authenticity of generated images, as shown in Fig.8.

![]() Figure 7. Examples of semantic hair color editing illustrating the interpretations and operations of the proposed FaceCLIP method. (a) Generated image of hair color “black”. (b) Corresponding attention maps for hair color “black”. (c) Generated image of hair color “gray”. (d) Corresponding attention maps for hair color “gray”. (e) Generated image of hair color “black and gray”. (f) Corresponding attention maps for hair color “black and gray”.

Figure 7. Examples of semantic hair color editing illustrating the interpretations and operations of the proposed FaceCLIP method. (a) Generated image of hair color “black”. (b) Corresponding attention maps for hair color “black”. (c) Generated image of hair color “gray”. (d) Corresponding attention maps for hair color “gray”. (e) Generated image of hair color “black and gray”. (f) Corresponding attention maps for hair color “black and gray”.To introduce these priors, we incorporate a CLIP-based loss into the discriminator for each stage during the training. This involves extracting the embedded feature from the generated image using the visual encoder of the CLIP model and inputting the corresponding ground-truth textual descriptions into the text encoder of the CLIP model to obtain the text features. Subsequently, we calculate and minimize the cosine distance between these two feature vectors to ensure that the generated image aligns with its textual descriptions. The representation of this process is as follows:

\begin{aligned} {LOSS}_{\mathrm{CLIP}} = 1 - \mathrm{cos}({CLIP_{\mathrm{txt}}}(C),\ {CLIP_{\mathrm{vis}}}(X)), \nonumber \end{aligned} where CLIP_{\mathrm{txt}}(\cdot) represents the text encoder and CLIP_{\mathrm{vis}}(\cdot) denotes the visual encoder of the CLIP model, and \mathrm{cos}(\cdot) is the cosine similarity function. With the assistance of text-image priors in the multi-stage generation process, the proposed method successfully captures and models the intricate semantic mapping relations between texts and images. This enables the generated images exhibit a high level of fidelity to the attribute descriptions, showcasing the effectiveness of the proposed method in bridging the gap between textual descriptions and expression images.

In the proposed method, each discriminator is optimized independently and in parallel during the training. And the loss of each discriminator consists of four parts: the adversarial loss for the generated images’ authenticity, the conditional adversarial loss for distinguishing whether the generated image matches the input textual description, the word-level relevant loss for maintaining the correlation between words and image regions, and the CLIP-based loss aimed at generating images that align semantically with the given texts. To ensure that the generation process is stable, we adopt the word-level discriminators[30] to focus on the image regions associated with their facial attributes, and incorporate the word-level relevant loss. The objective function of each discriminator can be formulated as the sum of these four losses:

\begin{aligned} {LOSS}_{D_n} = & \overbrace{-{\frac{1}{2}}\mathbb{E}_{Y_n\sim{P_{\rm data}}}[{\rm{log}}(D_n(Y_n))]-{\frac{1}{2}}\mathbb{E}_{X_n\sim{P_{G_n}}}[{\rm{log}}(1-D_n(X_n))]}^{{\,\rm{adversarial\; loss}}\,}+ \\&\overbrace{{\bigg(-\frac{1}{2}}\mathbb{E}_{Y_n\sim{P_{\rm data}}}[{\rm{log}}(D_n(Y_n,\ C))]-{\frac{1}{2}}\mathbb{E}_{X_n\sim{P_{G_n}}}[{\rm{log}}(1-D_n(X_n,\ C))]\bigg)}^{{\,\rm{conditional \; adversarial \; loss}}\,}+ \\& {k_1}\overbrace{\mathbb{E}_{Y_n\sim{P_{\rm data}}}[{\rm{log}}(1-{LOSS}_{\rm corre}(Y_n,\ C))+{\rm{log}}({LOSS}_{\rm corre}(Y_n,\ \widehat{C}))]}^{{\,{{\mathrm{word}}{\text{-}}{\mathrm{level}}\; {\mathrm{relevant}}\; {\mathrm{loss}}}}\,}+\\ & {k_2}\overbrace{\mathbb{E}_{X_n\sim{P_{G_n}}}{LOSS}_{\rm CLIP}(C,\ X_n)}^{{\,{{\mathrm{semantic}}{\text{-}}{\mathrm{similarity}} \;{\mathrm{loss}}}}\,}, \end{aligned} where Y_n is the ground-truth image from the FET dataset used to make comparison with the generated image X_n in the n -th generation stage. \widehat{C} is a mismatch description randomly sampled from the collected dataset and is different from the source textual description C that matches Y_n , and k_i, i=\{1,2\} are the hyper-parameters and denote the weights of the corresponding losses.

To train generators, we incorporate generative adversarial loss and perceptual loss[47] to reduce the randomness in generated images and constrain them to be more realistic. Additionally, we integrate the DAMSM model[27], which leverages the text encoder E_{\mathrm{txt}} and the image encoder E_{\mathrm{img}} to compute similarity scores between image patches and words. This scoring mechanism is instrumental in gauging the semantic consistency between images and their corresponding textual descriptions. By combining these components, we optimize the generators to yield images that are not only realistic but also semantically aligned. The generator loss is formulated as below:

\begin{aligned} {LOSS}_{G} = & \sum_{n=1}^{N} (\overbrace{-{\frac{1}{2}}\mathbb{E}_{X_n\sim{P_{G_n}}}[{\rm{log}}(D_n(X_n))]-{\frac{1}{2}}\mathbb{E}_{X_n\sim{P_{G_n}}}[{\rm{log}}(1-D_n(X_n,\ C))]}^{{\,\rm{adversarial \;loss}}\,}+ \\&\quad \quad \overbrace{{(-\frac{1}{2}}\mathbb{E}_{Y_n\sim{P_{\rm data}}}[{\rm{log}}(D_n(Y_n,\ C))]-{\frac{1}{2}}\mathbb{E}_{X_n\sim{P_{G_n}}}[{\rm{log}}(1-D_n(X_n,\ C))])}^{{\,\rm{conditional\; adversarial\;loss}}\,}+ \\ &\quad \quad {k_3}\overbrace{\mathbb{E}_{X_n\sim{P_{G_n}}}({\frac{1}{{H_m}{W_m}{C_m}}}\left \| \sigma_m({X}_n)-\sigma_m(Y_n) \right \|_2^2) }^{{\,\rm{perceptual \;loss}}\,}+ \\ &\quad \quad {k_4}\overbrace{{\mathbb{E}_{X_n\sim{P_{G_n}}}}({\rm{log}}(1-LOSS_{\rm corre}(X_n,\ C))) }^{{\,{{\mathrm{word}}{\text{-}}{\mathrm{level}}\; {\mathrm{relevant}}\; {\mathrm{loss}}}}\,} )+ {k_5}\mathbb{E}_{X_n\sim{P_{G_n}}}{LOSS}_{{\mathrm{DAMSM}}}, \end{aligned} where N is the total number of generators. \sigma_m in the perceptual loss refers to the m -th layer of the VGG-16 network[48], and H_m, W_m , and C_m in the perceptual loss represent the height, the width, and the channel dimensions of the feature map in the m -th layer, respectively.

4. Experiments

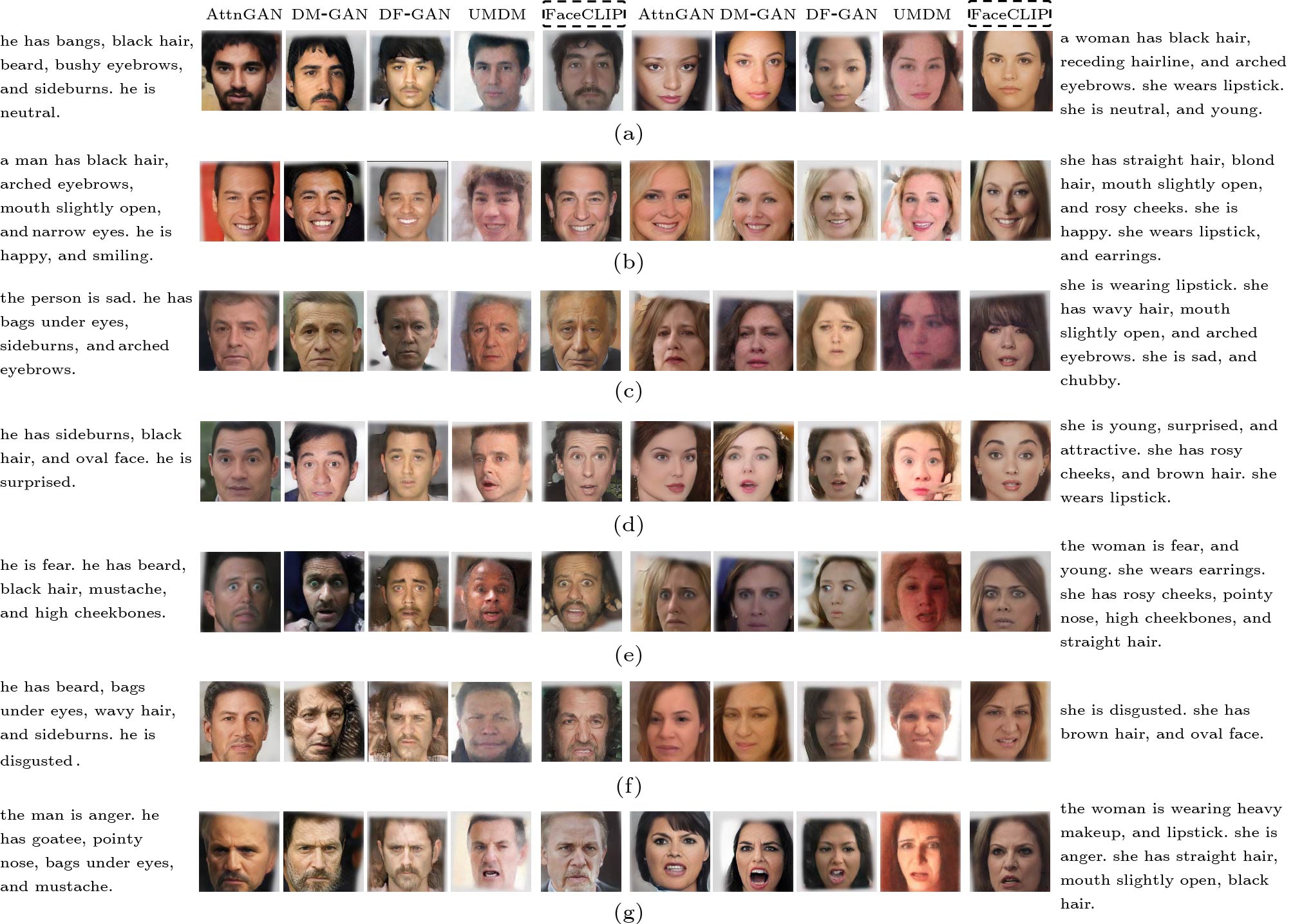

We perform extensive experiments on the collected FET dataset to evaluate the effectiveness of the proposed FaceCLIP method. The proposed FaceCLIP method is compared with the state-of-the-art text-to-image approaches, including AttnGAN[27], DM-GAN[29], DFGAN[49], and the uni-modal diffusion model (UMDM)[50], with multiple evaluation metrics. For comparison, all the methods are re-trained on the collected FET dataset, and generate images of a resolution of 256\times256\times3 . The results show that the proposed method is effective in generating realistic facial expressions based on descriptions. By introducing the CLIP priors, the model incorporates reasoning and is capable of semantic operations over facial attributes.

To assess the authenticity and diversity of the generated images, we employ two metrics: the Frechet inception distance (FID)[51] and the learned perceptual image patch similarity (LPIPS)[52]. FID measures the distance between the feature vectors of generated images and the ground-truth images obtained by the inception-v3 model. A lower FID value denotes that the generated images are closer to real images, indicating higher image generation quality. LPIPS simulates how the human visual system perceives images, transforms images into feature representations composed of perceptual factors, and evaluates the similarity between the generated images and corresponding real images by calculating the perceptual differences between them. Similar to FID, the lower the LIPIS value, the higher the quality and diversity of the generated images.

However, realism is not the only evaluation criterion for text-to-image generation. It is equally crucial to ensure that the generated images are semantically consistent with the input descriptions. To evaluate this, we use the R-precision[27] to measure the semantic similarity between the generated images and a set of texts. In our experiments, the text set consists of one ground-truth description and 99 randomly sampled descriptions. A generated image is considered to match a textual description if the ground-truth ranks within the top r candidates in the set. In our experiments, r is set to 1.

4.1 Quantitative Comparison with the SOTA Methods

For quantitative comparison, each approach generates images based on

30000 randomly selected unseen texts from the test set of the FET dataset. The evaluation results based on the above-mentioned criteria are listed in Table 4. From the table, we observe that the FaceCLIP method achieves the best performance in terms of the FID and R-precision criteria, indicating that the proposed method generates realistic facial expression images that best align with the given descriptions. Regarding the LPIPS criterion, the FaceCLIP method outperforms the AttnGAN, DM-GAN, and UMDM models, demonstrating its ability to generate relatively more diverse facial expression images.Table 4. Quantitative Comparison Between the State-of-the-Art Approaches and Proposed FaceCLIP on the FET TestsetEpoch Model FID ↓ LIPIS ↓ R-Precision (%) ↑ Epoch 60 AttnGAN[27] 40.27 0.547 48.46±0.89 DM-GAN[29] 42.39 0.551 47.62±0.52 DF-GAN[49] 49.20 0.527 21.48±0.63 FaceCLIP 44.23 0.541 65.17±0.68 Epoch 80 AttnGAN 37.28 0.550 50.03±0.77 DM-GAN 40.50 0.542 47.91±0.68 DF-GAN 51.37 0.494 25.75±0.61 FaceCLIP 36.89 0.544 66.52±0.94 Epoch 100 AttnGAN 40.72 0.546 51.14±1.04 DM-GAN 37.48 0.543 48.02±0.87 DF-GAN 41.22 0.483 21.18±0.47 FaceCLIP 37.30 0.536 69.74±0.93 Epoch 120 AttnGAN 35.61 0.542 53.95±0.73 DM-GAN 35.79 0.537 50.31±1.12 DF-GAN 41.89 0.468 27.34±0.75 UMDM[50] 59.38 0.545 – FaceCLIP 35.52 0.534 70.15±0.53 Note: Marker “↑” indicates that a higher value corresponds to better performance, and marker “↓” indicates the opposite. Bold fonts indicate the best results. 4.2 Qualitative Comparison with the SOTA Methods

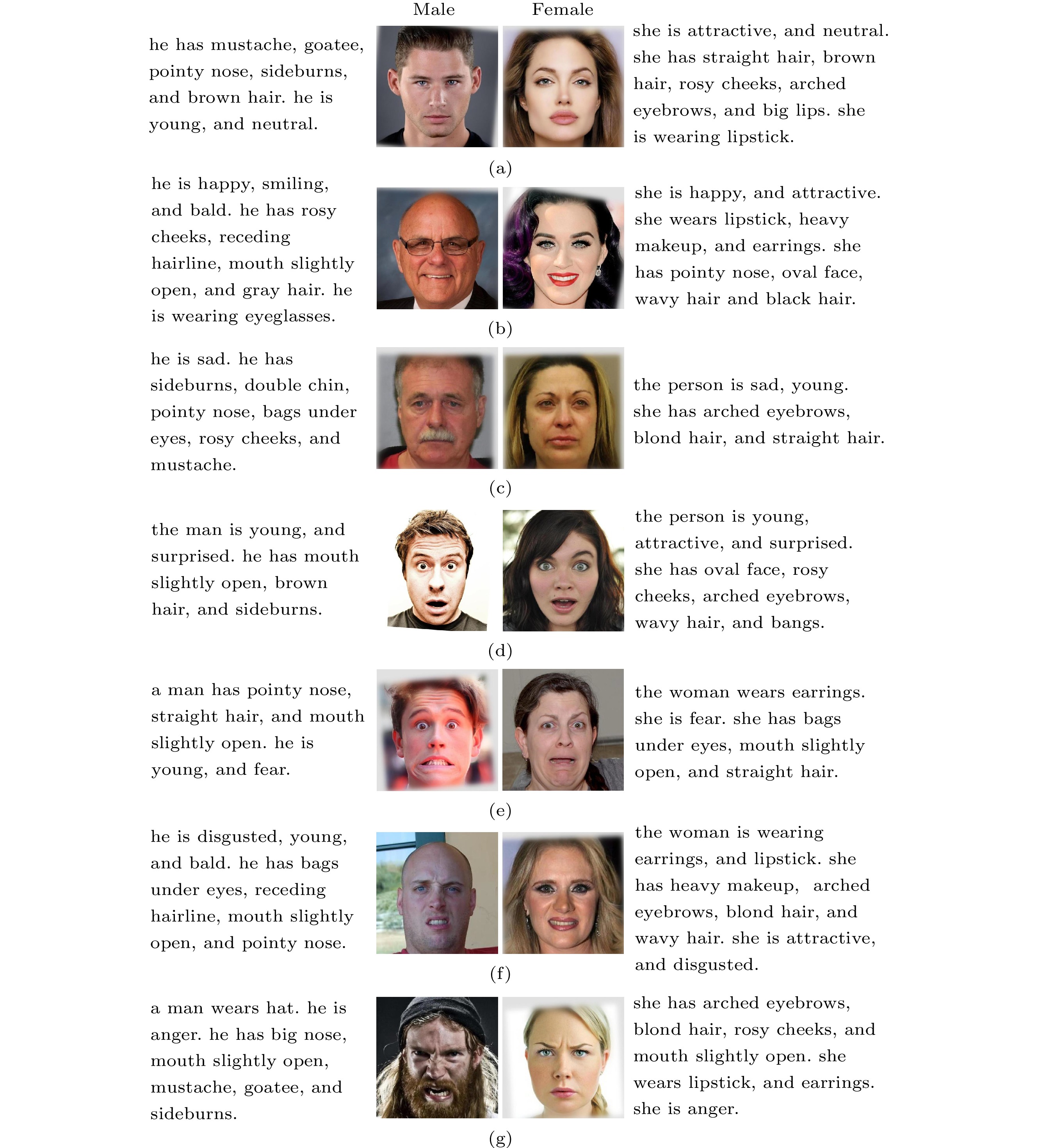

We visualize images generated by each approach from various facial attributes in Fig.9. As shown in the figure, the compared three approaches generate precise facial expressions for the “happy” and “neutral” categories and ambiguous facial expressions for other categories; while the FaceCLIP method produces more realistic expressions with natural muscle movements and better facial structures. Moreover, the proposed method accurately captures facial attributes, such as eyebrows and cheeks, improving the overall quality of the generated images.

![]() Figure 9. Qualitative comparison between the proposed FaceCLIP and the state-of-the-art methods on the FET dataset. For the columns, each approach generates images of seven facial expressions with males on the left side and females on the right, and each row shows one facial expression. (a) Neutral. (b) Happy. (c) Sad. (d) Surprise. (e) Fear. (f) Disgust. (g) Anger.

Figure 9. Qualitative comparison between the proposed FaceCLIP and the state-of-the-art methods on the FET dataset. For the columns, each approach generates images of seven facial expressions with males on the left side and females on the right, and each row shows one facial expression. (a) Neutral. (b) Happy. (c) Sad. (d) Surprise. (e) Fear. (f) Disgust. (g) Anger.In contrast, the compared methods exhibits certain shortcomings in synthesizing images with the same quality. For example, the AttnGAN and DM-GAN models are adapted to generate images with stripe noise, especially for the “neutral” and “happy” expressions, regardless of the gender. Moreover, the DM-GAN and DF-GAN models produce images with deformations and blurriness, especially noticeable in the “fear” and “disgust” expressions. For example, the image generated by the DM-GAN model for “fear” expression of a man exhibits facial deformation, as shown in Fig.9.

For the “surprise” expression, the AttnGAN and DM-GAN models are able to express the emotion although the results are not so natural as the proposed method. However, the facial expressions generated by the DF-GAN model are not consistent with the descriptions, indicating its limitation in accurate representation of the intended expression.

Although UMDM demonstrates a significantly accelerated training process compared with the GAN-based models, the generated images of the same resolution display conspicuous noise, leading to obscure details, such as facial textures. Additionally, this method yields relatively unnatural results for eyes and mouth, and is unsatisfactory in generating hair for female characters. Most importantly, UMDM encounters challenges in accurately generating certain negative expressions specified by textual descriptions, such as sadness, fear, and disgust.

4.3 Semantic Interpretation Evaluation

To demonstrate the ability of semantic interpretation for the proposed method, we show examples of operations over several attributes along with their descriptions and attention maps in Fig.3, Fig.7 and Fig.8. Examples of compound expressions are visualized in these figures, such as the “happy and surprise” expression in Fig.3(a) and the “happy and sad” expression in Fig.3(b). These maps demonstrate that the proposed FaceCLIP method is able to focus on the corresponding regions that affect each expression.

The visualized attention maps of the proposed FaceCLIP method also prove its interpretation capabilities. The attention regions of each facial expression coincide with their action units (AUs) in the facial action coding system (FACS)[53]. For example, the attention map of the “happy” expression focuses on the motion of smiling lips, corresponding to the “lip corner puller” AU. While for the “surprise” expression, it concentrates on the motion of raised eyebrows, corresponding to the “inner brow raiser” and “outer brow raiser” AUs. For the “sad” expression, it emphasizes the motion of lower eyebrows, corresponding to the “brow lower” AU. This indicates that the proposed method extracts emotional cues from the textual descriptions effectively and successfully transforms them into image regions which comply with the facial movements. Furthermore, the FaceCLIP method exhibits the ability to disentangle the facial action units based on its semantic interpretation over basic expressions and performs semantic operations to express compound emotions.

In addition to expressions, the proposed method is also able to operate on other facial attributes, e.g., hair colors. The attribute of “black and gray hair” forms a new hair color between the “black hair” and the “gray hair” attributes, as illustrated in Fig.7. These results indicate that the FaceCLIP method is not only capable of operating across different facial regions (e.g., mouth and eyes), but also achieving semantic operations within the same region (e.g., hair). The corresponding attention maps also confirm this ability.

Moreover, the proposed method demonstrates semantic comprehension and inference of attributes. For instance, the “bags under eyes” attribute typically indicates an older age for the subject, and in scenarios where ages are not specified, the model generates facial images portraying characteristics associated with aging, such as wrinkles, gray hair, or white beards. Examples generated from texts with “bags under eyes” descriptions visualized in Fig.9 proves this observation. Similarly, the “attractive” attribute usually implies younger subjects adorned with lipstick, or jewelries. An example is illustrated in Fig.9, in which the generated “attractive” woman using the proposed method wears earrings. For more examples of attribute reasoning, please refer to Fig.8. This figure also reveals distinctive gender-based representations in the semantic inference of attributes. Specifically, individuals described with the attribute “bags under eyes” are depicted as older with more wrinkles for both males and females, along with gray beards or gray hair for males. On the other hand, “attractive” individuals tend to be younger for both genders, and commonly display makeup, with the potential inclusion of earrings for females.

These observations validate that by introducing the CLIP model's semantic priors into the generation process, the proposed FaceCLIP method is capable of achieving robust semantic comprehension of multiple attributes, implementing semantic operations on corresponding facial regions, and incorporating reasoning during interpretation.

5. Conclusions

In this study, we introduced FaceCLIP, a novel method for generating realistic and vivid facial expressions from textual descriptions using CLIP-based GANs. Experimental results demonstrated that FaceCLIP outperformed the state-of-the-art text-to-image generation methods in terms of image quality and semantic consistency between texts and generated images. The proposed method exhibits a strong ability to edit and disentangle multiple facial attributes, allowing for semantic operation over multiple attributes. In addition to generating basic facial expressions, FaceCLIP also enables semantic editing to generate diverse and compound expressions. Additionally, the incorporation of semantic priors introduced reasoning capabilities into the proposed method, FaceCLIP.

Moreover, we constructed a novel dataset called FET, which describes facial expressions using both textual and visual information, providing a valuable resource for future research in this field. Furthermore, the FaceCLIP method's automatic generation of facial expression images from text cues facilitates research in facial expression analysis and opens up possibilities for applications in areas such as virtual reality, gaming, and human-computer interaction. By generating expressive and emotionally intelligent avatars, this method can enhance user experiences and enable more immersive and interactive communications between humans and computers.

Overall, the study demonstrated the potential of combining natural language and image processing to generate high-quality and semantically consistent facial expressions based on input descriptions, offering a new approach to address the challenge of facial expression generation. The dataset and models are available at https://github.com/ourpubliccodes/FaceCLIP.

-

Figure 1. Generated images using the existing methods. (a) Low-quality facial images generated from text using T2F

1 . (b) Low-quality facial images generated from text using Text2FaceGAN[9]. (c) Problematic facial images generated by StyleT2F[10]. (d) Six facial expressions derived from editing the source image using Emoreact[11]. (e) Seven facial expressions generated by editing the source image using GANimation[12].Figure 3. Examples of facial images depicting complex combinations of attributes. (a) Generated image of the compound expression “happy and surprised”. (b) Corresponding attention maps for the compound expression “happy and surprised”. (c) Generated image of the compound expression “happy and sad”. (d) Corresponding attention maps for the compound expression “happy and sad”.

Figure 4. Overall architecture of the proposed FaceCLIP method. (a) Stage of feature extraction for textual descriptions. (b) The n-th ( 1 \leqslant n \leqslant 3 ) stage of expression generation. {\boldsymbol{c}} and {\boldsymbol{e}} represent the global sentence feature and the word features extracted from the given description C of facial attributes using the pre-trained bi-LSTM based text encoder, respectively. The text-to-expression module transforms the input text features \{{\boldsymbol{c}},\;{\boldsymbol{e}}\} and the latent feature {\boldsymbol{Q}}_{n-1} from the previous stage into new latent feature {\boldsymbol{Q}}_n through the attention mechanism and convolution transitions, and then the feature {\boldsymbol{Q}}_n is fed into generator G_n to produce output image X_n . Y_n is the ground truth image, and D_n is the n -th discriminator.

Figure 6. Details of the proposed FaceCLIP model. (a) The first stage of facial expression generation. (b) The following n -th ( 1< n \leqslant 3 ) stage of expression generation using an attention module. \widetilde{{\boldsymbol{c}}} is the sentence vector processed through CA, and \widehat{{\boldsymbol{c}}} is the conditional feature vector obtained by concatenating \widetilde{{\boldsymbol{c}}} with a random noise vector {\boldsymbol{z}} . FRU blocks involve operations with fully connected layers, residual connections, and up-sampling. SCAN denotes attention networks. Each stage is optimized using adversarial losses, a CLIP-based loss, a perceptual loss, and a word-level relevant loss.

Figure 7. Examples of semantic hair color editing illustrating the interpretations and operations of the proposed FaceCLIP method. (a) Generated image of hair color “black”. (b) Corresponding attention maps for hair color “black”. (c) Generated image of hair color “gray”. (d) Corresponding attention maps for hair color “gray”. (e) Generated image of hair color “black and gray”. (f) Corresponding attention maps for hair color “black and gray”.

Figure 9. Qualitative comparison between the proposed FaceCLIP and the state-of-the-art methods on the FET dataset. For the columns, each approach generates images of seven facial expressions with males on the left side and females on the right, and each row shows one facial expression. (a) Neutral. (b) Happy. (c) Sad. (d) Surprise. (e) Fear. (f) Disgust. (g) Anger.

Table 1 Statistics of Widely Used Facial Expression Datasets

Dataset Number of Images Number of Basic Expressions Resolution Color Published Year JAFFE[3] 213 7 256 × 256 ✘ 1998 KDEF[4] 4900 7 562 × 762 ✔ 1998 FER_2013[5] 35887 7 48 × 48 ✘ 2013 RAF-DB[6] 15339 7 33 × 49 ^\ast \rightarrow 2147 ×3221 ^\dagger✔ 2017 AffectNet[7] 318969 8 130 × 130 ^\ast \rightarrow 4724 ×4724 ^\dagger✔ 2017 Note: Marker * means the minimum resolutions of the facial images. Marker † means the maximum resolutions of the facial images. Table 2 Attributes of Collected Facial Expressions and Text (FET) Dataset

Component Attribute subject Gender (male & female) has_attrs Pale skin, oval face, arched eyebrows, bushy eyebrows, narrow eyes, bags under eyes, high cheekbones, rosy cheeks, pointy nose, big nose, mouth slightly open, big lips, bread, mustache, goatee, double chin, receding line, bangs, sideburns, straight hair, wavy hair, blond hair, black hair, brown hair, gray hair wear_attrs Necklace, necktie, eyeglasses, heavy makeup, earrings, hat, lipstick is_attrs* Facial attribute: attractive, young, chubby, bald, smiling Facial expression: neutral, happy, sad, surprised, fear, disgusted, anger Note: Marker * denotes that attributes corresponding to the “is_attrs” encompass two categories: pertaining to facial attributes and facial expressions, respectively. Table 3 Detailed Composition of Image Quantities Across Expressions in the Collected FET Dataset

Expression Gender Split Male Female Training Set Testing Set Neutral 3030 2809 4600 1239 Happy 1981 3854 4600 1235 Sad 2913 2739 4600 1052 Surprise 2728 2614 4600 742 Fear 1248 1337 2285 +2285 † =4570 300 Disgust 1425 1295 2420 +2410 † =4830 300 Anger 4175 1677 4600 1252 Total 17500 16325 32400 6120 Note: The figures annotated with a dagger symbol (†) are images derived by horizontally flipping to compensate for an imbalance in the training dataset. The “Male” and “Female” columns specify the quantities of male and female images, respectively. The “Training Set” and “Testing Set” columns specify the total numbers of images in the training set and the testing set, respectively. Table 4 Quantitative Comparison Between the State-of-the-Art Approaches and Proposed FaceCLIP on the FET Testset

Epoch Model FID ↓ LIPIS ↓ R-Precision (%) ↑ Epoch 60 AttnGAN[27] 40.27 0.547 48.46±0.89 DM-GAN[29] 42.39 0.551 47.62±0.52 DF-GAN[49] 49.20 0.527 21.48±0.63 FaceCLIP 44.23 0.541 65.17±0.68 Epoch 80 AttnGAN 37.28 0.550 50.03±0.77 DM-GAN 40.50 0.542 47.91±0.68 DF-GAN 51.37 0.494 25.75±0.61 FaceCLIP 36.89 0.544 66.52±0.94 Epoch 100 AttnGAN 40.72 0.546 51.14±1.04 DM-GAN 37.48 0.543 48.02±0.87 DF-GAN 41.22 0.483 21.18±0.47 FaceCLIP 37.30 0.536 69.74±0.93 Epoch 120 AttnGAN 35.61 0.542 53.95±0.73 DM-GAN 35.79 0.537 50.31±1.12 DF-GAN 41.89 0.468 27.34±0.75 UMDM[50] 59.38 0.545 – FaceCLIP 35.52 0.534 70.15±0.53 Note: Marker “↑” indicates that a higher value corresponds to better performance, and marker “↓” indicates the opposite. Bold fonts indicate the best results. -

[1] Caroppo A, Leone A, Siciliano P. Comparison between deep learning models and traditional machine learning approaches for facial expression recognition in ageing adults. Journal of Computer Science and Technology, 2020, 35(5): 1127–1146. DOI: 10.1007/s11390-020-9665-4.

[2] Yao N M, Chen H, Guo Q P, Wang H A. Non-frontal facial expression recognition using a depth-patch based deep neural network. Journal of Computer Science and Technology, 2017, 32(6): 1172–1185. DOI: 10.1007/s11390-017-1792-1.

[3] Lyons M, Akamatsu S, Kamachi M, Gyoba J. Coding facial expressions with Gabor wavelets. In Proc. the 3rd IEEE International Conference on Automatic Face and Gesture Recognition, Apr. 1998, pp.200–205. DOI: 10.1109/AFGR.1998.670949.

[4] Lundqvist D, Flykt A, Öhman A. Karolinska directed emotional faces. Cognition and Emotion, 1998. DOI: 10.1037/t27732-000.

[5] Goodfellow I J, Erhan D, Carrier P L, Courville A, Mirza M, Hamner B, Cukierski W, Tang Y, Thaler D, Lee D H, Zhou Y, Ramaiah C, Feng F, Li R, Wang X, Athanasakis D, Shawe-Taylor J, Milakov M, Park J, Ionescu R, Popescu M, Grozea C, Bergstra J, Xie J, Romaszko L, Xu B, Chuang Z, Bengio Y. Challenges in representation learning: A report on three machine learning contests. In Proc. the 20th International Conference on Neural Inofrmation Processing (ICONIP), Nov. 2013, pp.117–224. DOI: 10.1007/978-3-642-42051-1_16.

[6] Li S, Deng W, Du J P. Reliable crowdsourcing and deep locality-preserving learning for expression recognition in the wild. In Proc. the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Jul. 2017, pp.2584–2593. DOI: 10.1109/CVPR.2017.277.

[7] Mollahosseini A, Hasani B, Mahoor M H. AffectNet: A database for facial expression, valence, and arousal computing in the wild. IEEE Trans. Affective Computing, 2017, 10(1): 18–31. DOI: 10.1109/TAFFC.2017.2740923.

[8] Wang Y, Dantcheva A, Bremond F. From attribute-labels to faces: Face generation using a conditional generative adversarial network. In Proc. the Computer Vision — ECCV 2018 Workshops, Sept. 2018, pp.692–698. DOI: 10.1007/978-3-030-11018-5_59.

[9] Nasir O R, Jha S K, Grover M S, Yu Y, Kumar A, Shah R R. Text2FaceGAN: Face generation from fine grained textual descriptions. In Proc. the 5th IEEE International Conference on Multimedia Big Data, Sept. 2019, pp.58–67. DOI: 10.1109/BigMM.2019.00-42.

[10] Sabae M S, Dardir M A, Eskarous R T, Ebbed M R. StyleT2F: Generating human faces from textual description using StyleGAN2. arXiv: 2204.07924, 2022. https://arxiv.org/abs/2204.07924, Feb. 2025.

[11] Graziani L, Melacci S, Gori M. Generating facial expressions associated with text. In Proc. the 29th International Conference on Artificial Neural Networks, Sept. 2020, pp.621–632. DOI: 10.1007/978-3-030-61609-0_49.

[12] Pumarola A, Agudo A, Martinez A M, Sanfeliu A, Moreno-Noguer F. GANimation: Anatomically-aware facial animation from a single image. In Proc. the 15th European Conference, Sept. 2018, pp.835–851. DOI: 10.1007/978-3-030-01249-6_50.

[13] Stap D, Bleeker M, Ibrahimi S, Hoeve M T. Conditional image generation and manipulation for user-specified content. arXiv: 2005.04909, 2020. https://arxiv.org/abs/2005.04909, Feb. 2025.

[14] Xia W, Yang Y, Xue J H, Wu B. TediGAN: Text-guided diverse face image generation and manipulation. In Proc. the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Jun. 2021, pp.2256–2265. DOI: 10.1109/CVPR46437.2021.00229.

[15] Sun J, Deng Q, Li Q, Sun M, Ren M, Sun Z. AnyFace: Free-style text-to-face synthesis and manipulation. In Proc. the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Jun. 2022, pp.18666–18675. DOI: 10.1109/CVPR52688.2022.01813.

[16] Radford A, Kim J W, Hallacy C, Ramesh A, Goh G, Agarwal S, Sastry G, Askell A, Mishkin P, Clark J, Krueger G, Sutskever I. Learning transferable visual models from natural language supervision. In Proc. the 38th International Conference on Machine Learning, Jul. 2021, pp.8748–8763.

[17] Patashnik O, Wu Z, Shechtman E, Cohen-Or D, Lischinski D. StyleCLIP: Text-driven manipulation of StyleGAN imagery. In Proc. the 2021 IEEE/CVF International Conference on Computer Vision, Oct. 2021, pp.2065–2074. DOI: 10.1109/ICCV48922.2021.00209.

[18] Sanghi A, Chu H, Lambourne J G, Wang Y, Cheng C Y, Fumero M, Malekshan K R. CLIP-Forge: Towards zero-shot text-to-shape generation. In Proc. the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Jun. 2022, pp.18582–18592. DOI: 10.1109/CVPR52688.2022.01805.

[19] Liu Y, Chen H, Huang L, Chen D, Wang B, Pan P, Wang L. Animating images to transfer CLIP for video-text retrieval. In Proc. the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, Jul. 2022, pp.1906–1911. DOI: 10.1145/3477495.3531776.

[20] Luo H, Ji L, Zhong M, Chen Y, Lei W, Duan N, Li T. CLIP4Clip: An empirical study of CLIP for end to end video clip retrieval and captioning. Neurocomputing, 2022, 508: 293–304. DOI: 10.1016/j.neucom.2022.07.028.

[21] Zhou Y, Zhang R, Chen C, Li C, Tensmeyer C, Yu T, Gu J, Xu J, Sun T. Towards language-free training for text-to-image generation. In Proc. the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Jun. 2022, pp.17886–17896. DOI: 10.1109/CVPR52688.2022.01738.

[22] Goodfellow I J, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y. Generative adversarial nets. In Proc. the 28th International Conference on Neural Information Processing Systems, Dec. 2014, pp.2672–2680. DOI: 10.5555/2969033.2969125.

[23] Mirza M, Osindero S. Conditional generative adversarial nets. arXiv: 1411.1784, 2014. https://arxiv.org/abs/1411.1784, Feb. 2025.

[24] Mansimov E, Parisotto E, Ba L J, Salakhutdinov R. Generating images from captions with attention. In Proc. the 4th International Conference on Learning Representations, May 2016.

[25] Reed S, Akata Z, Yan X, Logeswaran L, Schiele B, Lee H. Generative adversarial text to image synthesis. In Proc. the 33rd International Conference on International Conference on Machine Learning, Jun. 2016, pp.1060–1069. DOI: 10.5555/3045390.3045503.

[26] Zhang H, Xu T, Li H, Zhang S, Wang X, Huang X, Metaxas D. StackGAN: Text to photo-realistic image synthesis with stacked generative adversarial networks. In Proc. the 2017 IEEE International Conference on Computer Vision, Oct. 2017, pp.5908–5916. DOI: 10.1109/ICCV.2017.629.

[27] Xu T, Zhang P, Huang Q, Zhang H, Gan Z, Huang X, He X. AttnGAN: Fine-grained text to image generation with attentional generative adversarial networks. In Proc. the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Jun. 2018, pp.1316–1324. DOI: 10.1109/CVPR.2018.00143.

[28] Huang W, Xu Y, Oppermann I. Realistic image generation using region-phrase attention. In Proc. the 11th Asian Conference on Machine Learning, Nov. 2019, pp.284–299.

[29] Zhu M, Pan P, Chen W, Yang Y. DM-GAN: Dynamic memory generative adversarial networks for text-to-image synthesis. In Proc. the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Jun. 2019, pp.5795–5803. DOI: 10.1109/CVPR.2019.00595.

[30] Li B, Qi X, Lukasiewicz T, Torr P H S. Controllable text-to-image generation. In Proc. the 33rd International Conference on Neural Information Processing Systems, Dec. 2019, pp.2065–2075. DOI: 10.5555/3454287.3454472.

[31] Fang F, Luo F, Zhang H P, Zhou H J, Chow A L H, Xiao C X. A comprehensive pipeline for complex text-to-image synthesis. Journal of Computer Science and Technology, 2020, 35(3): 522–537. DOI: 10.1007/s11390-020-0305-9.

[32] Nilsback M E, Zisserman A. Automated flower classification over a large number of classes. In Proc. the 6th Indian Conference on Computer Vision, Graphics & Image Processing, Dec. 2008, pp.722–729. DOI: 10.1109/ICVGIP.2008.47.

[33] Welinder P, Branson S, Mita T, Wah C, Schroff F, Belongie S, Perona P. The Caltech-UCSD birds-200-2011 dataset. Technical Report, CNS-TR-2010-001, California Institute of Technology, 2011. https://authors.library.caltech.edu/records/cvm3y-5hh21, Feb. 2025.

[34] Lin T Y, Maire M, Belongie S, Hays J, Perona P, Ramanan D, Dollár P, Zitnick C L. Microsoft COCO: Common objects in context. In Proc. the 13th European Conference, Sept. 2014, pp.740–755. DOI: 10.1007/978-3-319-10602-1_48.

[35] Li Y, Chen X, Wu F, Zha Z J. LinesToFacePhoto: Face photo generation from lines with conditional self-attention generative adversarial networks. In Proc. the 27th ACM International Conference on Multimedia, Oct. 2019, pp.2323–2331. DOI: 10.1145/3343031.3350854.

[36] Chen S Y, Su W, Gao L, Xia S, Fu H. DeepFaceDrawing: Deep generation of face images from sketches. ACM Trans. Graphics, 2020, 39(4): Article No. 72. DOI: 10.1145/3386569.3392386.

[37] Xia W, Yang Y, Xue J H. Cali-sketch: Stroke calibration and completion for high-quality face image generation from human-like sketches. Neurocomputing, 2021, 460: 256–265. DOI: 10.1016/j.neucom.2021.07.029.

[38] Xie B, Jung C. Deep face generation from a rough sketch using multi-level generative adversarial networks. In Proc. the 26th International Conference on Pattern Recognition, Aug. 2022, pp.1200–1207. DOI: 10.1109/ICPR56361.2022.9956126.

[39] Ghosh A, Zhang R, Dokania P, Wang O, Efros A, Torr P, Shechtman E. Interactive sketch & fill: Multiclass sketch-to-image translation. In Proc. the 2019 IEEE/CVF International Conference on Computer Vision, Oct. 27–Nov. 2, 2019, pp.1171–1180. DOI: 10.1109/ICCV.2019.00126.

[40] Wang T C, Liu M Y, Zhu J Y, Tao A, Kautz J, Catanzaro B. High-resolution image synthesis and semantic manipulation with conditional GANs. In Proc. the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Jun. 2018, pp.8798–8807. DOI: 10.1109/CVPR.2018.00917.

[41] Karras T, Laine S, Aila T. A style-based generator architecture for generative adversarial networks. In Proc. the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Jun. 2019, pp.4396–4405. DOI: 10.1109/CVPR.2019.00453.

[42] Li H Y, Dong W M, Hu B G. Facial image attributes transformation via conditional recycle generative adversarial networks. Journal of Computer Science and Technology, 2018, 33(3): 511–521. DOI: 10.1007/s11390-018-1835-2.

[43] Wei T, Chen D, Zhou W, Liao J, Tan Z, Yuan L, Zhang W, Yu N. HairCLIP: Design your hair by text and reference image. In Proc. the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Jun. 2022, pp.18051–18060. DOI: 10.1109/CVPR52688.2022.01754.

[44] Tevet G, Gordon B, Hertz A, Bermano A H, Cohen-Or D. MotionCLIP: Exposing human motion generation to clip space. In Proc. the 17th European Conference on Computer Vision, Oct. 2022, pp.358–374. DOI: 10.1007/978-3-031-20047-2_21.

[45] Guzhov A, Raue F, Hees J, Dengel A. Audioclip: Extending clip to image, text and audio. In Proc. the 2022 IEEE International Conference on Acoustics, Speech and Signal Processing, May 2022, pp.976–980. DOI: 10.1109/ICASSP43922.2022.9747631.

[46] Karras T, Aila T, Laine S, Lehtinen J. Progressive growing of GANs for improved quality, stability, and variation. In Proc. the 6th International Conference on Learning Representations, Apr. 30-May 3, 2018.

[47] Johnson J, Alahi A, Fei-Fei L. Perceptual losses for real-time style transfer and super-resolution. In Proc. the 14th European Conference on Computer Vision, Oct. 2016, pp.694–711. DOI: 10.1007/978-3-319-46475-6_43.

[48] Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. In Proc. the 3rd International Conference on Learning Representations, May 2015.

[49] Tao M, Tang H, Wu F, Jing X, Bao B K, Xu C. DF-GAN: A simple and effective baseline for text-to-image synthesis. In Proc. the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Jun. 2022, pp.16494–16504. DOI: 10.1109/CVPR52688.2022.01602.

[50] Huang Z, Chan K C K, Jiang Y, Liu Z. Collaborative diffusion for multi-modal face generation and editing. In Proc. the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Jun. 2023, pp.6080–6090. DOI: 10.1109/CVPR52729.2023.00589.

[51] Heusel M, Ramsauer H, Unterthiner T, Nessler B, Hochreiter S. GANs trained by a two time-scale update rule converge to a local nash equilibrium. In Proc. the 31st International Conference on Neural Information Processing Systems, Dec. 2017, pp.6629–6640. DOI: 10.5555/3295222.3295408.

[52] Zhang R, Isola P, Efros A A, Shechtman E, Wang O. The unreasonable effectiveness of deep features as a perceptual metric. In Proc. the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Jun. 2018, pp.586–595. DOI: 10.1109/CVPR.2018.00068.

[53] Ekman P, Friesen W V. Facial action coding system. Environmental Psychology & Nonverbal Behavior, 1978. DOI: 10.1037/t27734-000.

-

其他相关附件

下载:

下载: