基于自适应全尺度Retinex的水下图像实时增强

-

摘要:研究背景

近年来,水下成像系统已广泛应用于海洋能源勘探、水下环境监测、军事应用等领域。然而,由于水对光的选择性吸收和光在透射介质中的散射,光学系统获取到的水下图像会发生颜色畸变、对比度降低等多种退化降质现象。值得一提的是,水下图像增强场景下难以获取真正的清晰参考图像,这使得如端到端的深度学习方法将难以用于该类图像的增强处理。因此,针对水下图像进行有效的增强处理是一项重要而富有挑战性的任务。

目的针对水下降质图像特性,研究无需高清参考图像便能有效实现图像颜色和对比度增强的处理方法。同时,无需手动设置参数,要降低方法的计算复杂度以满足实际应用中的实时性要求。

方法提出了一种自适应全尺度Retinex(AFSR)方法来增强水下退化图像或视频。与传统使用一个或多个固定尺度进行光照估计的方法不同,我们采用了由光传输引导的全尺度设计。因此,估计的照明分量克服了手动设计比例参数的限制。此外,该方法利用了颜色恒常性,直接进行颜色校正,无需任何先验知识和后处理。同时,采用一种简单的基于线性逼近的策略代替对数函数,大大降低了算法的计算复杂度。

结果对真实水下退化数据的增强实验结果表明,该方法能同时校正颜色投射和提高对比度,并且能够实现视频序列图像的实时处理(0.021 s/帧)。该方法已与8种最新的算法进行了比较,实验结果表明:该方法在对比度增强和颜色转换方面都比其他8种方法具有更好的性能。

结论本文中,我们提出了一种新的自适应全尺度Retinex(AFSR)水下图像增强方法,该方法同时解决了颜色偏差和对比度降低的问题。同时,所提方法适应于在不同光照和深度的场景,扩展了传统多尺度Retinex方法依赖于手动设计尺度参数的局限。与其他“级联”恢复方法(校正颜色和对比度问题的单个步骤)不同,该方法无需进行后处理便可以同时校正颜色和对比度。此外,本文提出的用线性映射函数代替对数运算直接计算反射分量的定量方法可以有效地降低计算复杂度,能够实现视频的实时增强处理。

Abstract:Current Retinex-based image enhancement methods with fixed scale filters cannot adapt to situations involving various depths of field and illuminations. In this paper, a simple but effective method based on adaptive full-scale Retinex (AFSR) is proposed to clarify underwater images or videos. First, we design an adaptive full-scale filter that is guided by the optical transmission rate to estimate illumination components. Then, to reduce the computational complexity, we develop a quantitative mapping method instead of non-linear log functions for directly calculating the reflection component. The proposed method is capable of real-time processing of underwater videos using temporal coherence and Fourier transformations. Compared with eight state-of-the-art clarification methods, our method yields comparable or better results for image contrast enhancement, color-cast correction and clarity.

-

Keywords:

- underwater /

- image enhancement /

- Retinex /

- imaging through turbulent media

-

1. Introduction

In recent years, underwater imaging systems have been widely used in marine energy exploration, underwater environment monitoring, military applications, and other fields. However, the underwater images[1, 2] suffer from significant visibility degradation mainly caused by the optical transmission properties of water. Hou et al.[2] proposed an optimization approach to estimate the underwater optical properties, and a set of individual images obtained under different conditions or ranges showed that the degradation is affected by many factors such as turbidity, location, depth, and temperature and is extremely non-linear. Thus, the distance between indistinguishable objects varies accordingly. Moreover, Wells[3] applied small angle approximations to derive an underwater modulation transfer model and provided a method to compare measurements at different ranges for consistency. The contrast response of an underwater imaging system shows exponential attenuation with the increasing distance between the imaging devices and targets. The recent experimental results in typical sea-water environments[4] have shown that the objects at a distance greater than 10 meters are almost indistinguishable. Therefore, it is a significant but challenging task to enhance underwater images.

The existing methods for underwater image enhancement can be divided into four classes: restoration methods[5-8], enhancement methods[9-13], fusion methods[14-16], and deep learning methods[17-19]. Research on restoration mainly focused on the construction of physical models and the utilization of prior information. For example, Chiang and Chen[6] proposed a method of wavelength compensation that estimates the transmittance by combining a dark channel prior[7] with an atmospheric scattering model. This method uses triangular bilateral filtering for noise removal and color correction. However, Chiang and Chen's wavelength compensation model is susceptible to the light within the aqueous medium. Recently, Zhang and Peng[8] have proposed an underwater image formation model, where the medium transmission is estimated for underwater images based on a joint prior. However, the prior information may fail in realistic scenarios, which degrades the robustness of the model. On the other hand, image enhancement methods mainly focus on color correction and contrast enhancement, such as color and contrast enhancement using Retinex-based methods[9-11], color correction with the white-balance method[12] and the shade-of-gray method[13]. Specifically, these Retinex-based methods[10, 11] are based on an assumption of color constancy[9], that is, the color of the object is determined by the reflection characteristics of the object surface and has only a weak relationship with the irradiation component in the scene. Therefore, the Retinex algorithm[9-11] can simultaneously achieve state-of-the-art results for contrast improvement and color correction. For example, the multi-scale Retinex (MSR) algorithm[10] usually uses three fixed-scale filters to estimate the illumination component of the entire image. Moreover, a weighted variational Retinex model[14] was proposed to estimate the reflectance and illumination under the regularization terms. This model can preserve more details and partially suppress noise. However, the relationship between the imaging depth and the illuminance component is not considered in the weighted variational Retinex model.

On the other hand, Ancuti et al.[15] proposed a method to restore the underwater degraded images. This method firstly obtains the inputs and the weight measures from the degraded version of the image. Then, four weight maps are developed to increase the visibility of the distant objects degraded due to the medium scattering and absorption. However, this method requires many parameters and considerable post-processing. Moreover, Zosso et al.[16] proposed a non-local Retinex framework using non-local versions of the sparseness and fidelity priors. Lately, deep learning methods have been applied to enhance underwater images. For instance, Han et al.[17] provided a systematic review of the state-of-the-art intelligent methods for dehazing and color restoration of underwater images. However, underwater images distorted by either color or some other phenomena lack ground truth, which is a major hindrance towards adopting a similar deep learning approach. Instead of collecting training data, Li et al.[18] proposed a method named WaterGAN to generate realistic underwater images from in-air images and depth pairings in an unsupervised pipeline used for the color correction of monocular underwater images. Unlike the existing single underwater image enhancement techniques, Berman et al.[19] considered multiple spectral profiles of different water types and reduced the underwater enhancement problem to single image dehazing. However, since the water type is unknown, this method needs prior evaluation of different parameters of water types. Meanwhile, Li et al.[20] proposed a real-time visualization system for deep-sea surveying, and Sun et al.[21] proposed a deep pixel-to-pixel network (DP2P) model by designing an encoding-decoding framework.

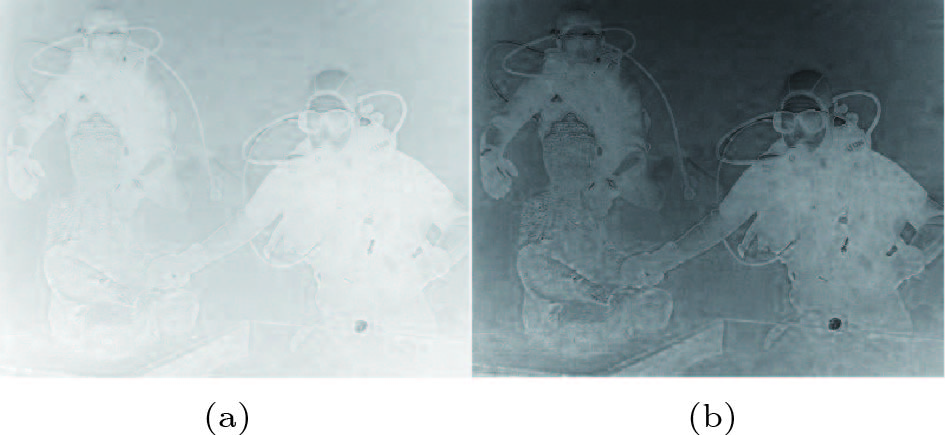

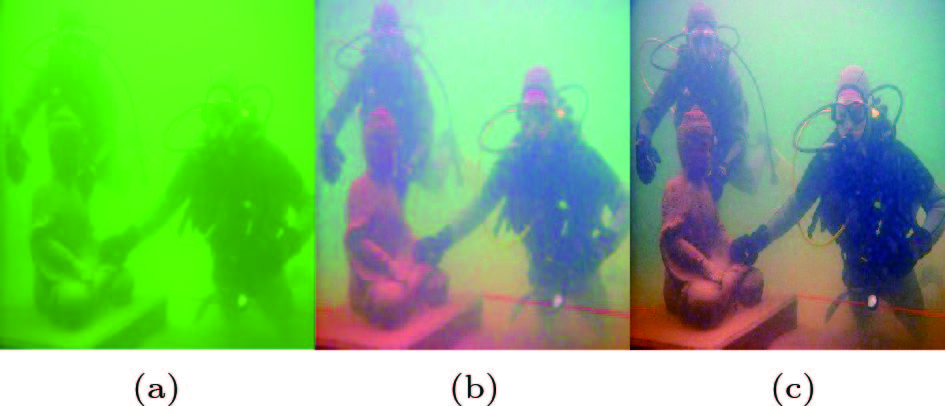

Most of the above-mentioned methods have made significant progress in improving the clarity of underwater images or videos. However, as described in [22, 23], the design of the filter in Retinex-based algorithms, such as the single-scale Retinex (SSR) and the multi-scale Retinex (MSR), does not fully consider the image atomization degree and the image information of different depths of field. Meanwhile, the deep learning methods[24, 25] are almost impossible to obtain prior evaluation of different parameters of water types. In the CLM[26] method, a wavelength-compensation strategy is hardly to correct the colorcast prior caused by the water depth. Therefore, this wavelength-compensation method is unable to guarantee the color fidelity and the clarity of local details at the same time. Meanwhile, the computational complexity of these variational and fusion methods is very high due to the use of iterative optimization solutions. Thus, it is particularly important to develop an adaptive scale filter, and reduce the complexity of the method. This is the starting point of our research. As shown in Fig.1(b), the MSR method[10] can enhance the underwater image to a great extent, but the effect of the depth of the field change is neglected when the illumination component is obtained by manually setting the multi-scale filter. Thus, the enhancement result with the MSR method suffers from different degrees of blur. For example, the texture information of the two frogmen at different depths of field is missing. In contrast, the proposed method (as shown in Fig.1(c)) can simultaneously correct color cast and improve the contrast for different depths of field, such as the two frogmen and the Buddha statue.

![]() Figure 1. Typical underwater image processing results. (a) Original underwater image. (b) Result of the MSR method[10]. (c) Result of the proposed adaptive full-scale Retinex method.

Figure 1. Typical underwater image processing results. (a) Original underwater image. (b) Result of the MSR method[10]. (c) Result of the proposed adaptive full-scale Retinex method.The rest of this paper is organized as follows. Section 2 presents the proposed model for generating full-scale images and enhancing degraded images. The experimental results and performance evaluations are presented in Section 3. Finally, Section 4 concludes this paper.

2. Proposed Method

We aim for a simple and robust approach that can increase the visibility of a wide variation of underwater videos and images. To alleviate the aforementioned limitations, an adaptive full-scale Retinex (AFSR) method is proposed to enhance underwater degraded images or videos.

In contrast to previous methods[9-12] using one or more fixed scales for illumination estimation, we firstly utilize a full-scale design that is guided by optical transmission. Then, we develop a simple strategy based on linear approximations instead of log functions to reduce the computational complexity of the MSR algorithm[10]. The following Subsections 2.1-2.3 will focus on the related Retinex mode, the adaptive full-scale Retinex, and the framework of the proposed method, respectively.

2.1 Relevance Between Transmission and the Retinex Model

Underwater imaging is mainly affected by absorption and scattering. Absorption is related to the refractive index of the medium, while scattering is caused by the suspended particles and water molecules in the water. According to the Lambert-Beer empirical observation[1], the propagation of light in media exponentially decays. Assuming that a medium is homogeneous, the optical path transfer function can be expressed as:

t(x)=e−βd(x), where β is the attenuation coefficient and d(x) is the depth of the image scene. However, it is not easy to directly obtain these parameter values in actual situations. It is should be noted that this is a priori information closely related to the imaging model. According to the optical imaging model[2], the light received by the underwater camera consists of direct, forward and background-scattering components. The observed image Iλ(x) can be expressed as the sum of three components:

Iλ(x)=Jλ(x)tλ(x)+Jλ(x)tλ(x)⊗g(x)+Aλ(1−tλ(x)), (1) where λ denotes the optical transmission wavelength, ⊗ represents the convolution operator, Aλ is the illumination of the optical transmission medium and Jλ(x) denotes the clarity of the image. The two components Jλ(x)tλ(x)⊗g(x) and Aλ(1−tλ(x)) lead to fuzzy and color-cast effects since the small-angle scattering comes from the reflected and background light through the suspended particles. Assuming that the distance between the scene and the camera is short, the fuzzy influence caused by forward scattering g(x) can be neglected. (1) can be simplified as follows:

I(x)=J(x)t(x)+A(1−t(x)). (2) The above-mentioned underwater degradation physical model from (2) is essentially the same as the atmospheric scattering model[1]. The underwater imaging scenes contain shadows and colored objects or surfaces, which satisfy the conditions of dark channel priors[9]. Therefore, the underwater light value A and transmission rate t(x) can be obtained by local minimum filtering (i.e., the dark channel prior), and then the enhanced image can be restored. Unfortunately, unlike atmospheric media, the selective absorption of light by water results in different degrees of attenuation at different wavelengths. For example, long red wavelengths are attenuated more than short blue wavelengths. Therefore, using the dark channel method to restore the underwater images will result in serious color distortion. Moreover, the simplified ellipsis also implies that no matter how accurate the transmission rate estimation is, the restoration image will have fog and color deviation. Compared with the transmission models[7, 9], our method accounts for the assumption of color constancy with Retinex-based methods. As shown in Fig.1(c), the proposed method can remove the color casts while producing a natural vision image.

The Retinex model[1] assumes color constancy. The color of an object is determined by the reflective characteristics of the surface of the object. From the viewpoint of signal processing, the illumination component of the Retinex changes slowly at low frequencies and the reflection component changes at high frequencies. Thus, the basic model of illumination components can be separated using a Gaussian low-pass filter. The most basic Retinex model is:

Iλi=LiRλi, where λ is one of the RGB channels while Li and Ri are the illumination and reflection components, respectively. It can be seen that estimating the illumination and reflection components based on the known degraded image signals is essentially an inverse problem. As mentioned in Section 1, there are three ways (path, surround, and variation) to estimate these two components. Considering the reliability and validity of the illumination estimation, our method originates from a surround-function model. The conventional surround-function model is:

Li=Ii⊗Fi(x,y), Fi(x,y)=12πσ2ie−(x2+y22σ2i), where Fi(x,y) is a scale surround function. Based on the Retinex model, the reflection component R(x, y) can be obtained using the logarithmic operations under the illumination estimation. Different scales of σi have different effects. However, the traditional estimation methods[12, 13] rely on experience. In order to improve the model, we use the transmission rate to construct scale parameters. Unlike the transmission model[7], our method considers color constancy. Unlike multi-scale Retinex, we consider the depth-dependent transmission rate. The proposed method will be derived in detail in Subsection 2.2.

2.2 Adaptive Full-Scale Retinex

We assume that both the Retinex method and the transmission model can achieve the same clarification results, that is, J=R. Since the observations of the two degraded models are identical, we have:

J(x)t(x)+A(1−t(x))=(Ii⊗Fi(x,y))×Rλi. (3) It can be seen from the mathematical model that the optical transmission rate is related to the scale factor. According to (3), the point-to-point mapping relationship between t(x) and σi can be further calculated. Through the design of scale parameters guided by transmission rates, we can obtain a more accurate model scale. At the same time, the color-constancy prior is integrated so that the proposed model does not require color-balance post-processing alone. We find that the scale is not sensitive to channel attenuation, which proves that the proposed method is more robust than the channel attenuation model[5].

According to (3), we can further obtain the mapping relationship between t(x) and σi. Both sides of this formula are divided by J, and we add a small value so that the denominator will not be 0. We have:

t(x)+A(1−t(x))J+ε=Ii⊗Fi(x,y). (4) We simplify the left side of (4) as

t(x)+A(1−t(x))J+ε=G(t), and obtain the Fourier transformation:

F(G(t))=F(I)×e−(r22σ2i), where F(⋅) is the Fourier transformation. Thus, we further deduce the analytic function relationship between the scale and the transmission rate as follows:

σi=r√|−2ln(F(G(t))/F(G(t))F(I)F(I))|. (5) (5) is an analytic expression based on the frequency that can also be regarded as the representation of the implicit variable t. To directly obtain the spatial domain-mapping relationship between the transmission rate and the full scale, we use the least square fitting method. The final formula is:

σi=eθטti, where θ is an exponential coefficient. According to experimental experience, the value of θ is between 4.30 and 4.95. In this paper, we use 4.7. ˜ti is the transmission rate that can be obtained by restoration methods[7, 9]. The normal space-domain maps using the transmission rate ˜ti acquired by the dark method[7] and the scale parameter σi are shown in Fig.2.

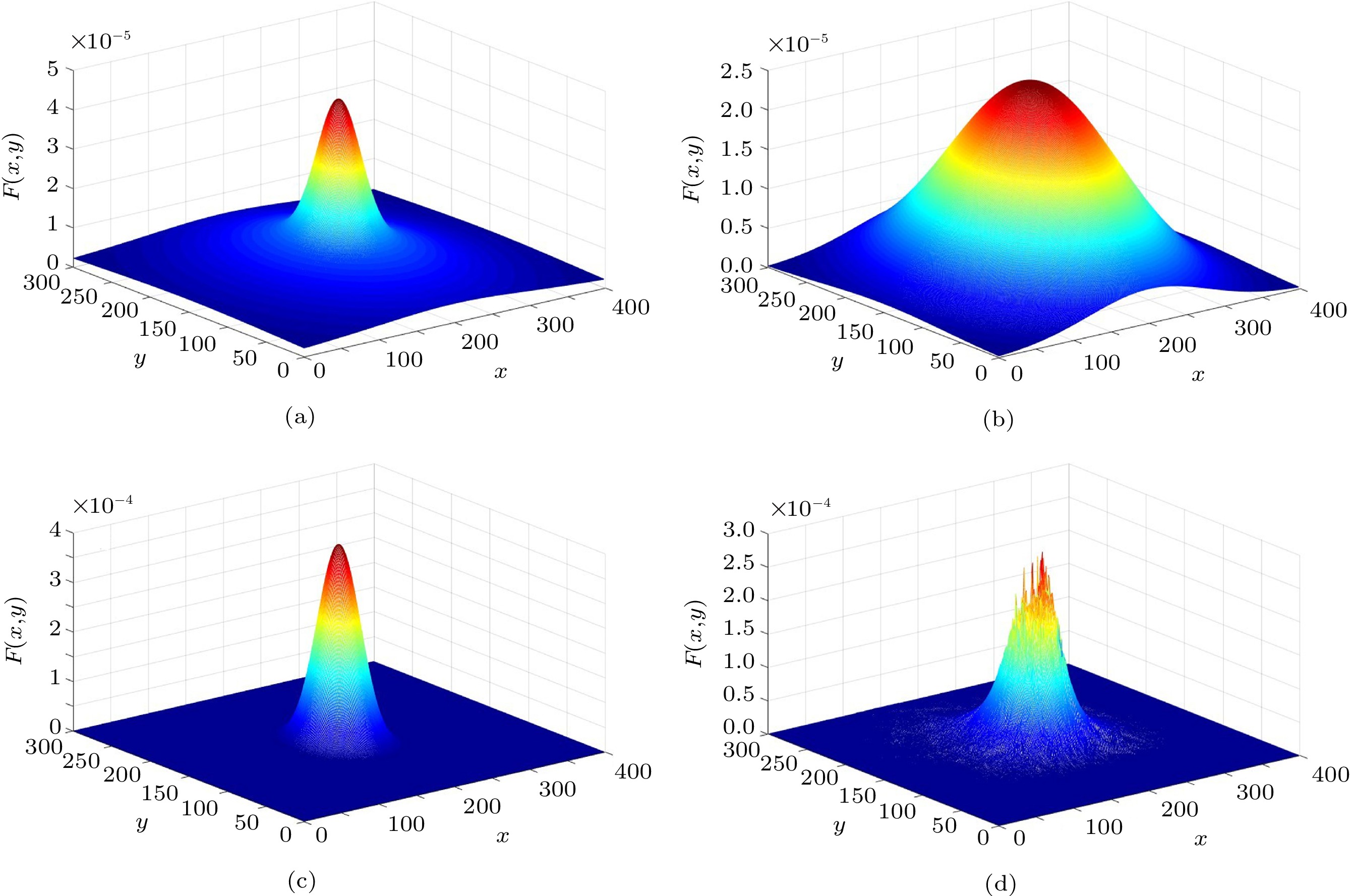

We can see that each pixel's spatial position scale parameter values (Fig.2(b)) are adaptively guided by the transmission rate (Fig.2(a)). Thus, we refer to this method as “adaptive full-scale Retinex”. One advantage of our method is using color constancy, and the other is that the requirement for accurate transmission rates can be relaxed by using an exponential transformation. Therefore, the proposed model is much more robust for underwater image enhancement. Once the values of σi are obtained, the surround function shown in Fig.3 can be deduced.

![]() Figure 3. Scale surround functions under different scale settings. (a) and (b) are spatial surround functions with single scale σ=60 and σ=90, respectively. (c) The spatial surround function is corresponding to multi-scale σ=40,80,100. (d) Proposed full-scale σi that is adaptively guided by optical transmission rates.

Figure 3. Scale surround functions under different scale settings. (a) and (b) are spatial surround functions with single scale σ=60 and σ=90, respectively. (c) The spatial surround function is corresponding to multi-scale σ=40,80,100. (d) Proposed full-scale σi that is adaptively guided by optical transmission rates.As shown in Fig.3, the normalized surround function with different scale parameters presents various attributes. Compared with the SSR (Fig.3(a) and Fig.3(b)) and MSR (Fig.3(c)), the proposed method (Fig.3(d)) takes full account of the depth information, and can adapt to the scene of uneven illumination and inhomogeneous optical-transmission media. According to the scale surround functions under different scale settings, we can see that the proposed full-scale σi is adaptively guided by optical transmission rates. The image enhancement results (Fig.1) also demonstrate the effectiveness of our method.

2.3 Algorithm Framework

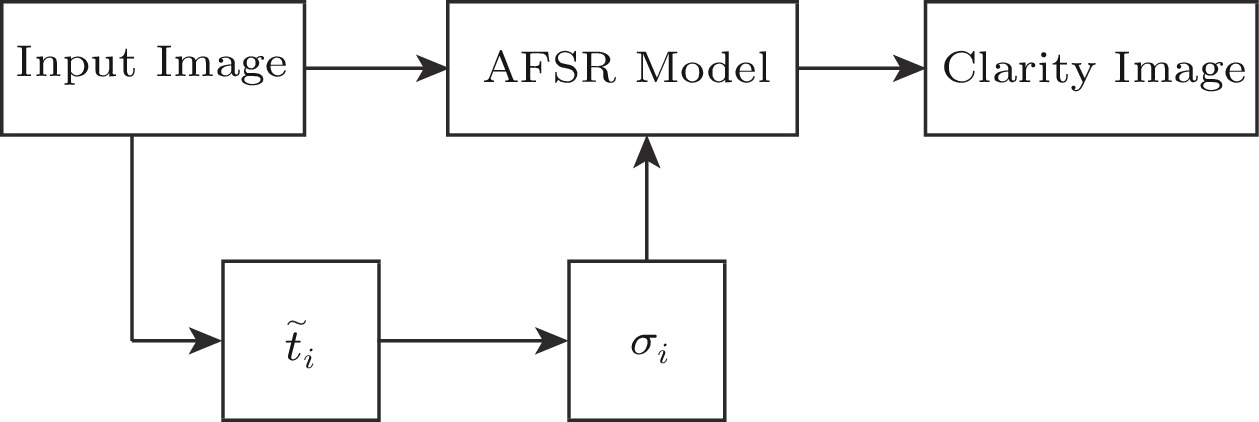

Our method extends the conventional Retinex-based enhancement methods[9-11], and the proposed framework blends the adaptive full-scale filters guided by transmission models. Since color constancy is assumed in Retinex-based methods (in both natural and artificial illumination scenes), the influence of these optical absorbance effects and back-scattering components can be easily reduced. The block diagram of the proposed method is illustrated in Fig.4.

Firstly, we obtain the transmittance values ˜ti with a restored model. Secondly, the full-scale surround function is constructed with the scale parameter σi. Thirdly, the illumination and reflection components are obtained using the Retinex model. Fourthly, multiple scenes of underwater images are clarified. Subsection 2.4 will further refine the model.

2.4 Retinex Algorithm Improvement

We theoretically and experimentally analyze the robustness of the proposed framework and optimize the computational time complexity.

2.4.1 Sensitivity Analysis

We can obtain the scale parameter σi guided by transmission rate ti, which theoretically demonstrates that the scale parameters are not sensitive to different transmission rates during each channel. At the same time, the scale parameter corresponds to the weight of the pixels in the spatial position, while the estimation deviation of the transmission rate affects only the boundary value in the scale space. In other words, the estimation deviation affects only the spatial edges with the smallest weights. Therefore, it can be deduced theoretically that the scale parameter is insensitive to the transmission rate. For further experiments, we use the dark channel method[9] to calculate the scale parameters and compare it with the ARC[5] method. Naturally, since the ARC method accounts for different channel transmission rates in underwater image processes, we first use this ARC method to obtain J. Thus, this model is:

JC(x)=IC(x)−ACmax where

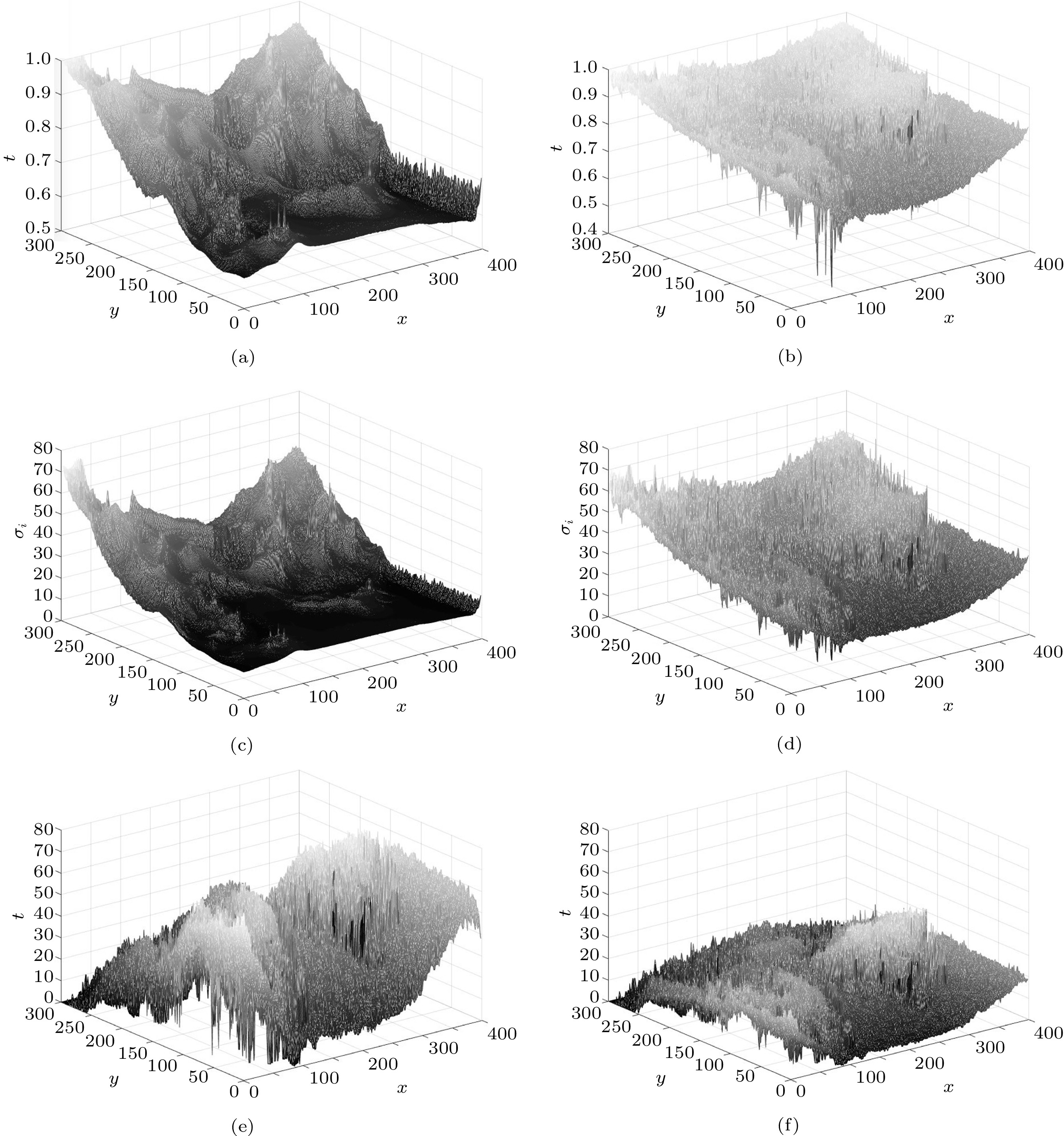

\left\{ \begin{aligned}& {t^{\rm{R}}}(x) = {{\rm e}^{ - {\beta ^{\rm{R}}}d(x)}}, \\& {t^{\rm{G}}}(x) = {({{\rm e}^{ - {\beta ^{\rm{R}}}d(x)}})^{\textstyle\frac{{{\beta ^{\rm{G}}}}}{{{\beta ^{\rm{R}}}}}}} = {({t^{\rm{R}}}(x))^{{\lambda _{\rm{G}}}}}, \\& {t^{\rm{B}}}(x) = {({{\rm e}^{ - {\beta ^{\rm{R}}}d(x)}})^{\textstyle\frac{{{\beta ^{\rm{B}}}}}{{{\beta ^{\rm{R}}}}}}} = {({t^{\rm{R}}}(x))^{{\lambda _{\rm{B}}}}}. \end{aligned} \right. Interestingly, when the three transmission rates are the same, this ARC[5] method degenerates to the dark channel method[9]. The transmission rates, the scale values, and the corresponding residual graphs are shown in Fig.5.

![]() Figure 5. Analysis results of our method. (a) and (b) are the transmission rate (t) of the ARC method[5] and our method, respectively, while (c)(d) and (e)(f) correspond to the scale values and the transmission rate residuals of these two methods, respectively.

Figure 5. Analysis results of our method. (a) and (b) are the transmission rate (t) of the ARC method[5] and our method, respectively, while (c)(d) and (e)(f) correspond to the scale values and the transmission rate residuals of these two methods, respectively.Since the ARC method uses different proportions of channel transmission rates, the obtained transmission rates and scales are much smoother than the results of our method, as shown in Figs.5(a)–5(d). This smoothing effect ignores some details. Furthermore, according to the normalized residual graphs in Figs.5(e)–5(f), the fluctuation error corresponding to the transmission rate is much larger than the mapping scale fluctuation of the proposed method. In other words, the estimation of scale parameters has a weak relationship with each channel. Thus, the proposed method does not need to separately deal with the transmission rate compared with the ARC method. The ARC method requires high precise transmission rate estimation, but our method can recover well when this estimation value is not very accurate. The advantages of our method are that it increases the robustness of the red-channel algorithm[5], while providing support for parallel computing. Next, we will further optimize the algorithm.

2.4.2 Algorithm Optimization

After the illumination component is obtained, the reflection component can be obtained by the traditional logarithmic operation. However, since logarithmic operations are non-linear, the computational complexity is high. To address this problem, we develop a quantitative method that directly calculates the reflection component using the mapping function of mean and squared errors.

{{{R}}^{\rm C}} is defined as the reflection component without the logarithmic operation, and {{R}}_{{\rm{mean}}}^C and {{R}}_{\rm{var} }^C are the mean value and the mean square error (MSE) in the R, G, B channel, respectively. Thus, the maximum and the minimum of each channel are calculated as:

\left\{ \begin{gathered} {{R}}_{\max }^{\rm C} = {{R}}_{\rm{mean} }^{\rm C} + \mu {{R}}_{\rm{var} }^{\rm C}, \\ {{R}}_{\min }^{\rm C} = {{R}}_{\rm{mean} }^{\rm C} - \mu {{R}}_{\rm{var} }^{\rm C}, \\ \end{gathered} \right. where \mu is an empirical parameter to control the dynamic adjustment of the image. In our experience, it is set to 3.0. Then, the quantitative mapping function for replacing the logarithmic operations is:

{{R}}_{{\rm{opt}}}^{\rm C} = \frac{{{{R}}_{}^{\rm C} - {{R}}_{\min }^{\rm C}}}{{{{R}}_{\max }^{\rm C} - {{R}}_{\min }^{\rm C}}} \times 255 , where {{R}}_{{\rm{opt}}}^{\rm C} is the final reflection component that represents the enhanced-clarity images. Once the illumination component is obtained by the AFSR in Section 2, the reflection component is obtained by the proposed quantitative function.

3. Experimental Results and Analysis

Experiments are performed using Matlab2014bon a PC with Intel Core i7 CPU 2.23 GHZ and 8 GB RAM. In our method, only the empirical parameter \mu is set to 3.0. As described in Section 1, we compare the proposed model with other eight state-of-the-art methods of underwater image clarification. The parameters used in the compared methods are set to be optimal according to the original models. Images captured in different underwater environments often show various degrees of attenuation and degradation, which leads to strong performance for several certain underwater environment conditions, but weak performance for others. Like the previous methods[7, 15, 22], the experiment datasets are derived from four representational real scenarios, which represent different depths and varying degrees of color cast. Quantitative and qualitative metrics are utilized to compare the restoration performance of these methods.

3.1 Evaluation Metrics

We employ several basic underwater image-quality metrics to evaluate and analyze the performance of different underwater imaging methods, including entropy (EN), standard deviation (SD), and edge intensity (EI). Generally, the standard deviation of an image reflects its high-frequency component, which is related to the image contrast. The higher the standard deviation is, the higher the contrast is, and the greater the color information is. Meanwhile, the entropy represents the amount of information, and the edge intensity describes the degree of edge details of the image. The edge intensity is calculated using the fast Sobel operator[27]. However, these basic metrics cannot provide a comprehensive evaluation, since underwater images suffer various degradations, and each of them has its own features. Thus, we introduce the underwater image quality metrics measurement (UIQM)[28, 29] to achieve a comprehensive evaluation.

The UIQM is composed of three independent measurements: the underwater image colorfulness measure (UICM), the underwater image sharpness measure (UISM), and the underwater image contrast measure (UIConM)[29]. The UICM is utilized to evaluate the performance of underwater image enhancement algorithms[13, 15, 19], and the Red-Green (YG) and Yellow-Blue (YB) color components are used. The overall colorfulness coefficient metric used for measuring underwater image colorfulness can be expressed as:

\begin{array}{lll}UICM & =& - 0.026\,8 \times \sqrt {\mu _{{\rm{RG}}}^{\rm{2}} + \mu _{{\rm{YB}}}^{\rm{2}}} +\\&& 0.156\,8 \times \sqrt {\sigma _{{\rm{RG}}}^{\rm{2}} + \sigma _{{\rm{YB}}}^{\rm{2}}} ,\end{array} where RG=R–G and YB=(R+G)/2–B. In order to measure the sharpness, the Sobel operator is first applied to each color component to generate the edge maps. Then the obtained edge maps are multiplied by the original color component to calculate the gray-scale edge maps. By doing this more efficiently, enhancement measure estimation (EME)[17] is utilized to measure the sharpness:

EME = \frac{2}{{mn}}\sum\limits_{k = 1,l = 1}^{m,n} {\log \left(\frac{{{I_{\max,\ k,\ l}}}}{{{I_{\min ,\ k,\ l}}}}\right)} , where the image is divided into blocks and (m, n) is the size of the block, and we obtain the maximal and minimal pixel values in each block. The argument of the logarithmic function indicates the relative contrast ratio within each block. Then the UISM can be written as:

UISM = \sum\limits_{c = 1}^3 {{\gamma _c}} EME , where {\gamma _{\rm{c}}} is the weight coefficient of each color channel (C), normally set to 0.299, 0.587 and 0.114 for R, G and B channels, respectively.

The contrast performance can be measured by the logarithmic measurement as:

\begin{split} &\quad\; UIconM\\&= \log AMEE \\& = \dfrac{1}{{mn}} \sum\limits_{k = 1,\ l = 1}^{m,\ n} {\dfrac{{{I_{\max ,\ k,\ l}} - {I_{\min ,\ k,\ l}}}}{{{I_{\max,\ k,\ l}} + {I_{\min ,\ k,\ l}}}}\log \left(\dfrac{{{I_{\max ,\ k,\ l}} - {I_{\min ,\ k,\ l}}}}{{{I_{\max,\ k,\ l}} + {I_{\min ,\ k,\ l}}}}\right)}. \end{split} It has been demonstrated that underwater images can be modeled as linear superpositions of absorbed and scattered components[29].

Meanwhile, the water absorption and backscatter by dusk-like particles may cause color casting, sharpness attenuation and contrast degradation. Therefore, it is reasonable to use the linear model to generate the overall underwater image quality measure. Thus, the UIQM is defined as:

UIQM = \alpha \times UICM + \beta \times UISM + \eta \times UIconM , where the colorfulness, sharpness and contrast measure are combined together through the linear function designed above, and \alpha ,\beta ,\eta are the weight coefficients to control the importance of each measure and balance their values. Generally, these parameters are set as \alpha = 0.028\,2,\beta = 0.295\,3,\eta = 3.575\,3 .

3.2 Performance Evaluation

We perform experiments in four scenarios with different color casts and compare them with eight state-of-the-art methods. The experimental results in each scenario are analyzed subjectively and objectively.

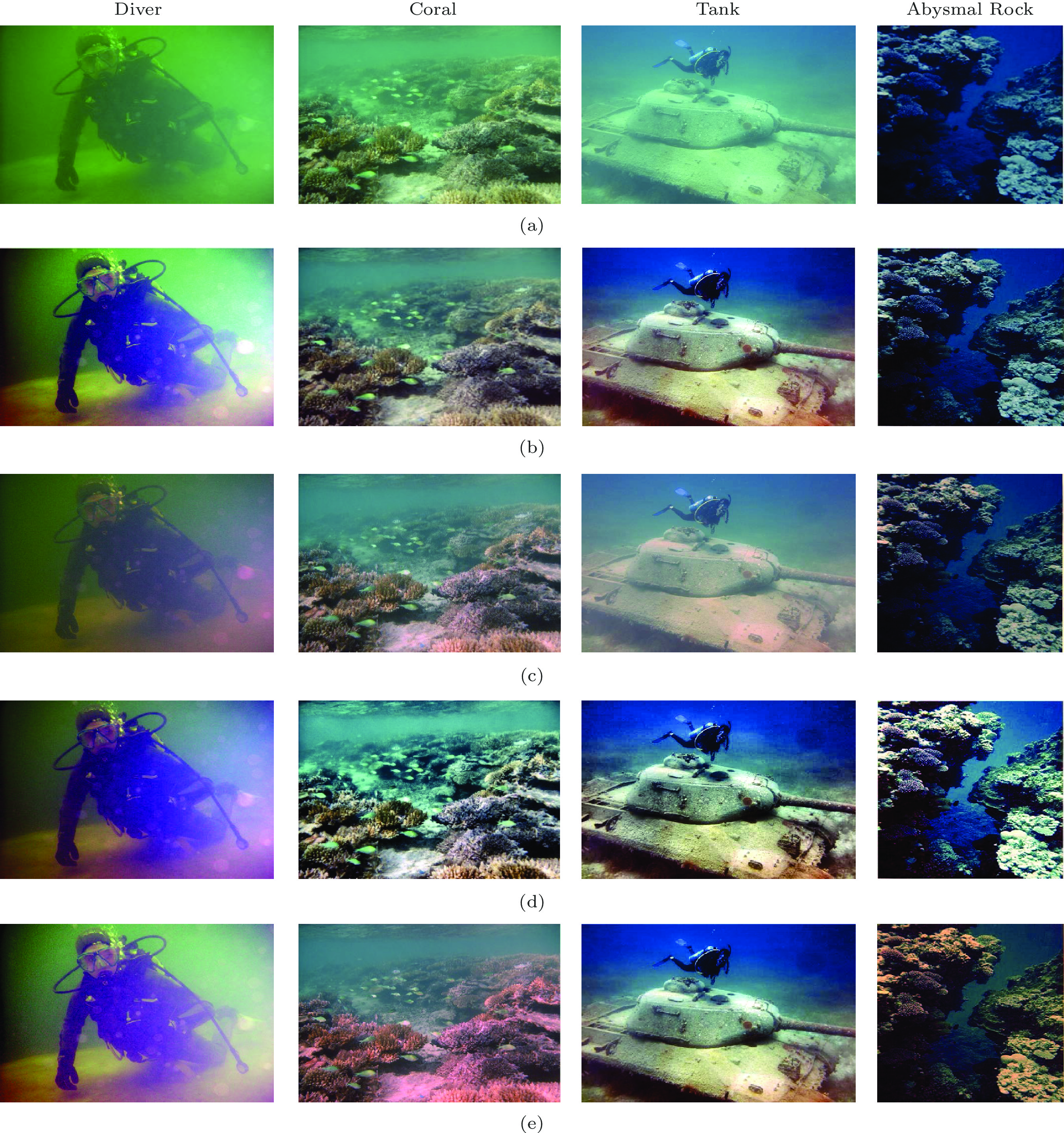

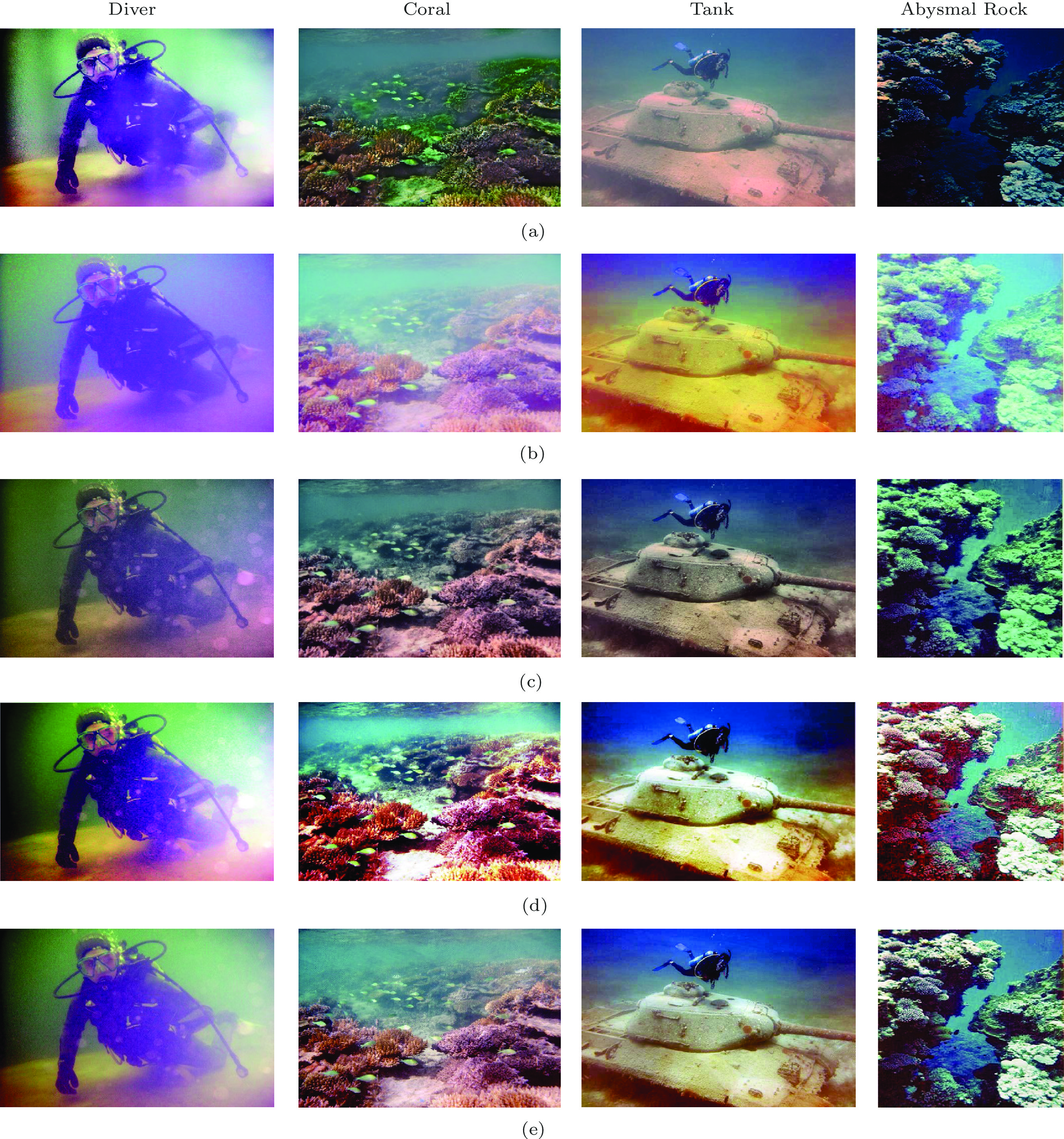

We see that on the diver image scene, the DP2P[21] method fails to correct the color cast, while the Shade[13] method shows poor contrast enhancement and the restored results generated by the other five methods achieve proper color correction and contrast enhancement. Meanwhile, the WaterGAN[18] and HL[19] methods show more consistent color, with reduced effects of vignetting and attenuation compared with the other methods. However, these results could be improved by adding a parameter that adjusts the center position of the vignetting pattern over the image. This demonstrates a limitation of augmented generators. For this diver scene, our method integrally achieves the best performance, although some information loss exists. Our method not only achieves excellent performance for heavy color-casting images, like the diver image in Fig.6(a), but also performs well on images captured in different underwater environments.

![]() Figure 6. Image clarity results of four different underwater scenes using nine methods (part 1: four methods). (a) Original image including four different scenes of diver, coral, tank, and abysmal rock. (b)–(e) are image clarity results of four state-of-the-art methods corresponding to (b) ARC[5], (c) Shade[13], (d) Fusion[15], and (e) WaterGAN[18]. By visual comparison, our AFSR method yields comparable or better results of image contrast enhancement, color cast correction and clarity.

Figure 6. Image clarity results of four different underwater scenes using nine methods (part 1: four methods). (a) Original image including four different scenes of diver, coral, tank, and abysmal rock. (b)–(e) are image clarity results of four state-of-the-art methods corresponding to (b) ARC[5], (c) Shade[13], (d) Fusion[15], and (e) WaterGAN[18]. By visual comparison, our AFSR method yields comparable or better results of image contrast enhancement, color cast correction and clarity.The coral scene clarity results in Fig.6 and Fig.7 show that DP2P[21] and CLM[26] fail to correct and enhance the visibility of the degraded image, while the other six methods[5, 13, 15, 18, 19,22] show various degrees of improvement of color correction and proper contrast enhancement. As shown in Fig.6(d), the Fusion[15] method obtains the best visual performance in the mildly degraded scene. Since the deep learning methods are limited by the choice of augmentation functions, augmented generators may not fully capture all aspects of a complex nonlinear model[18]. Our method obtains better results than the other methods except for the Fusion[15] method. However, compared with the Fusion method, which uses white balancing and multi-scale line filters, the proposed method does not need any post-processing.

![]() Figure 7. Image clarity results of four different underwater scenes using nine methods (part 2: the other methods). (a)–(b) are image clarity results of five state-of-the-art methods corresponding to (a) HL[19], (b) DP2P[21], (c) VRM[22], (d) CLM[26], and (e) AFSR. By visual comparison, our AFSR method yields comparable or better results of image contrast enhancement, color cast correction and clarity.

Figure 7. Image clarity results of four different underwater scenes using nine methods (part 2: the other methods). (a)–(b) are image clarity results of five state-of-the-art methods corresponding to (a) HL[19], (b) DP2P[21], (c) VRM[22], (d) CLM[26], and (e) AFSR. By visual comparison, our AFSR method yields comparable or better results of image contrast enhancement, color cast correction and clarity.Among these methods applied to the tank scene, we can see that the DP2P[21] and CLM[26] methods over-enhance the distorted image but the Shade method[13] under-enhances, causing severe color distortions and non-normal contrast enhancement. The other five methods[5, 15, 18, 19, 22] have the successful integral performance of the enhanced image. The ARC[5] solves the effect of contrast degradation to some degree, but it still tends to be bluish, while the Fusion[15] method obtains satisfactory results for color correction but has relatively low contrast. By comparison, our method and the WaterGAN[18] method can achieve both contrast enhancement and color correction. Meanwhile, the edge region of the tank is over-enhanced, and the background color tends to be bluish in the HL[19] method and VRM[22] method.

In the deep-sea scene of the abysmal rocks, we can see that WaterGAN[18] and HL[19] , and the other methods fail to solve the color-cast issue of the bluish image except for the Fusion[15] method. Although the Fusion[15] method outperforms our method in the mean value, our method still performs the best among all these methods. The proposed method has excellent visual performance as well as good RGB color-space mapping and high-contrast degrees but has degraded edge information due to the information loss.

On the other hand, we also use some quantitative indices to compare these methods. As shown in Table 1 and Table 2, these eight non-reference evaluation indices are all scalar values. The larger the value, the better the image quality. According to the comprehensive assessment index and the objective visual performance, the results obtained by our method are better than or comparable to the other methods’ results. The processing time of each competing method is shown in Table 3. For a frame of 300 × 399 × 3, our proposed method takes about 0.017 seconds to calculate the full-scale parameters while the enhancement process only requires 0.021 s. In other words, video sequences with no need for calculating the full-scale parameters (i.e., the first frame can be achieved) can reach the real-time requirements for video processing (less than 0.04 s).

Table 1. Comparison of Enhancement Results Using General MetricsMethod EN SD EI Diver Coral Tank Abysmal

RockDiver Coral Tank Abysmal

RockDiver Coral Tank Abysmal

RockOriginal image 2.0413 3.0562 4.0213 3.0201 0.0094 0.1320 0.1422 0.0975 0.0441 0.0845 0.0703 0.0746 ARC[5] 6.4221 8.3010 8.9254 5.9874 0.0200 0.2501 0.3521 0.0201 0.0998 0.1907 0.1433 0.1558 Shade[13] 4.0132 6.5408 5.9021 5.0231 0.0115 0.1695 0.2465 0.1540 0.0641 0.0988 1.0200 0.1602 Fusion[15] 6.7021 9.0103 10.0040 6.9421 0.0221 0.3524 0.4020 0.0247 0.1091 0.2201 0.1635 0.1878 WaterGAN[18] 6.7002 8.4013 10.1236 6.8741 0.0204 0.3435 0.4010 0.2460 0.1204 0.2194 0.1647 0.1705 HL[19] 5.8413 8.7956 9.4647 5.4620 0.0136 0.2714 0.3017 0.1731 0.1078 0.1795 0.1421 0.1493 DP2P[21] 3.5761 6.3410 6.3102 4.5822 0.0101 0.1708 0.2011 0.1044 0.0560 0.0987 0.0945 0.1432 VRM[22] 6.5210 8.2514 10.1250 6.9500 0.0175 0.2921 0.3470 0.0195 0.1123 0.2010 0.1547 0.1694 CLM[26] 4.1210 6.5231 6.4203 5.0011 0.0191 0.1802 0.2854 0.1721 0.0757 0.1030 1.2321 0.1567 Our method 6.7817 8.9241 10.1201 7.0320 0.0223 0.3412 0.4021 0.2540 0.1256 0.2168 0.1747 0.1920 Note: Bold numbers represent the maximum value in each column. Table 2. Comparison of the Enhancement Results Using Special Underwater MetricsMethod UICM UISM UIConM UIQM Diver Coral Tank A.R* Diver Coral Tank A.R* Diver Coral Tank A.R* Diver Coral Tank A.R* Original image 0.28 0.36 0.42 0.30 5.03 7.54 8.36 6.44 0.04 0.10 0.21 0.09 1.64 2.59 3.23 2.23 ARC[5] 0.40 0.44 0.67 0.34 9.04 9.34 12.65 7.18 0.12 0.18 0.42 0.10 3.11 3.41 5.26 2.49 Shade[13] 0.33 0.41 0.55 0.38 7.21 9.02 10.77 7.15 0.06 0.19 0.37 0.11 2.35 3.35 4.52 2.52 Fusion[15] 0.39 0.53 0.71 0.52 9.03 11.81 13.16 9.01 0.09 0.24 0.45 0.18 3.00 4.28 5.52 3.32 WaterGAN[18] 0.46 0.50 0.70 0.53 9.10 11.02 13.02 8.95 0.10 0.20 0.48 0.17 3.12 4.17 5.48 3.28 HL[19] 0.40 0.45 0.62 0.37 8.75 9.98 10.97 8.34 0.13 0.22 0.39 0.14 3.04 4.07 5.35 3.04 DP2P[21] 0.31 0.40 0.54 0.36 7.70 11.33 10.98 8.49 0.07 0.16 0.31 0.13 2.53 3.93 4.37 2.98 VRM[22] 0.46 0.51 0.70 0.50 9.01 10.98 13.00 9.04 0.10 0.22 0.46 0.18 3.03 4.04 5.50 3.33 CLM[26] 0.37 0.49 0.57 0.48 8.97 10.90 12.78 8.07 0.08 0.20 0.44 0.17 2.95 3.95 5.36 3.00 Our method 0.47 0.53 0.69 0.55 9.13 11.69 13.01 9.08 0.12 0.21 0.47 0.19 3.14 4.22 5.54 3.38 Note: A.R* stands for abysmal rock. Table 3. Processing Time (s/frame) of Competing Methods with Different Frame SizesFrom the above enhancement results in the four scenes, we can conclude that ARC[5] and Shade[5, 13] are not suitable for dense color-casting and heavy hazy situations. Meanwhile, methods of [15, 18, 19, 22] perform well in both the tank and coral images, but their performance is degraded for heavy color-cast scenes, such as heavily bluish and greenish images. To further increase the network robustness and enable the generalization to more application scenarios, the WaterGAN[18] method needs to train the GAN network across more datasets covering a larger variety of environmental conditions. However, underwater images have no ground truth, making it difficult to acquire the training data. Our method and the fusion method[15] can achieve contrast enhancement and color correction. However, the proposed method is insensitive to illumination and has no over- or under-enhancement like Shade[13] and CLM[26]. In addition, the low computational overhead is also a prominent advantage of the proposed method.

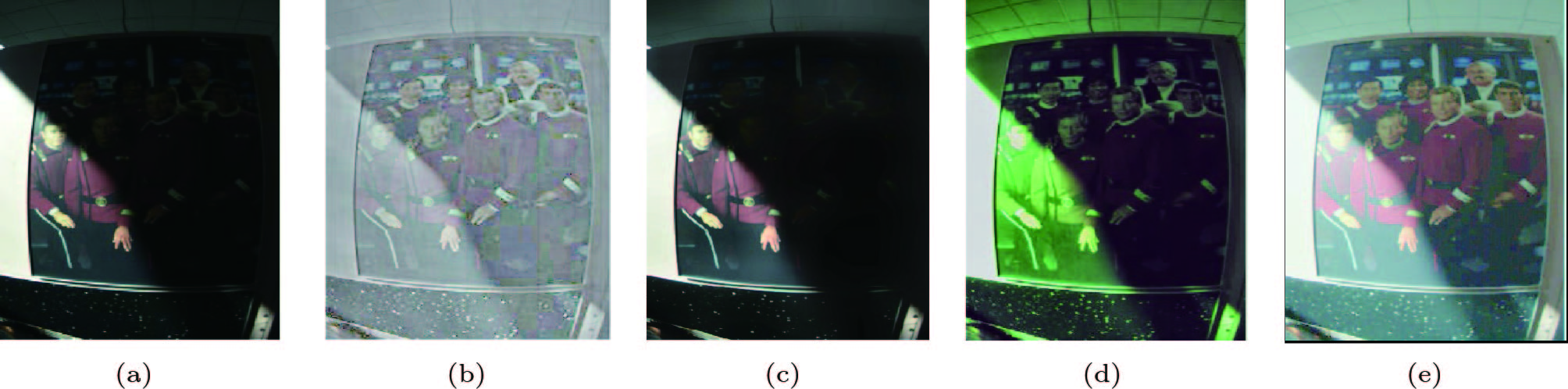

3.3 Extensible Application

In addition, the proposed approach can enhance other kinds of degraded images, including those with color distortion or resolution degradation, such as strong fog and backlight images. Fig.8 shows our experimental results of a backlight image. Compared with the state-of-the-art typical methods[10, 19, 22], our method still performs the best with respect to contrast enhancement, color correction, details, and visibility. In general, it demonstrates the specific application capability of our method.

4. Conclusions

In this work, we proposed a novel adaptive full-scale Retinex (AFSR) method for underwater image enhancement that tackles both color cast and visibility loss. Our work extends the multi-scale Retinex method[10], adapting to perform well in scenes with varying illumination and depth, and avoids color artifacts that can appear due to incorrect depth estimation. Among different images, regardless of different underwater imaging conditions, the experimental results demonstrated that our approach obtains better performance than the other eight state-of-the-art methods for contrast enhancement and color cast. Compared with the other methods that “cascade” restoration (individual steps for correcting color and contrast issues), the proposed method can simultaneously correct the color cast and contrast without post-processing. Moreover, the proposed quantitative method that directly calculates the reflection component using the linear mapping function instead of the logarithmic operations can effectively reduce computational complexity. Under the temporal correlations for video sequences, the proposed method can achieve real-time enhancement processing.

In the future, we will combine the proposed image clarification technique with other applications, such as post-processing in membrane diffractive imaging systems, object recognition and detection.

-

Figure 1. Typical underwater image processing results. (a) Original underwater image. (b) Result of the MSR method[10]. (c) Result of the proposed adaptive full-scale Retinex method.

Figure 3. Scale surround functions under different scale settings. (a) and (b) are spatial surround functions with single scale \sigma = 60 and \sigma = 90 , respectively. (c) The spatial surround function is corresponding to multi-scale \sigma = 40,80,100 . (d) Proposed full-scale {\sigma _i} that is adaptively guided by optical transmission rates.

Figure 5. Analysis results of our method. (a) and (b) are the transmission rate (t) of the ARC method[5] and our method, respectively, while (c)(d) and (e)(f) correspond to the scale values and the transmission rate residuals of these two methods, respectively.

Figure 6. Image clarity results of four different underwater scenes using nine methods (part 1: four methods). (a) Original image including four different scenes of diver, coral, tank, and abysmal rock. (b)–(e) are image clarity results of four state-of-the-art methods corresponding to (b) ARC[5], (c) Shade[13], (d) Fusion[15], and (e) WaterGAN[18]. By visual comparison, our AFSR method yields comparable or better results of image contrast enhancement, color cast correction and clarity.

Figure 7. Image clarity results of four different underwater scenes using nine methods (part 2: the other methods). (a)–(b) are image clarity results of five state-of-the-art methods corresponding to (a) HL[19], (b) DP2P[21], (c) VRM[22], (d) CLM[26], and (e) AFSR. By visual comparison, our AFSR method yields comparable or better results of image contrast enhancement, color cast correction and clarity.

Table 1 Comparison of Enhancement Results Using General Metrics

Method EN SD EI Diver Coral Tank Abysmal

RockDiver Coral Tank Abysmal

RockDiver Coral Tank Abysmal

RockOriginal image 2.0413 3.0562 4.0213 3.0201 0.0094 0.1320 0.1422 0.0975 0.0441 0.0845 0.0703 0.0746 ARC[5] 6.4221 8.3010 8.9254 5.9874 0.0200 0.2501 0.3521 0.0201 0.0998 0.1907 0.1433 0.1558 Shade[13] 4.0132 6.5408 5.9021 5.0231 0.0115 0.1695 0.2465 0.1540 0.0641 0.0988 1.0200 0.1602 Fusion[15] 6.7021 9.0103 10.0040 6.9421 0.0221 0.3524 0.4020 0.0247 0.1091 0.2201 0.1635 0.1878 WaterGAN[18] 6.7002 8.4013 10.1236 6.8741 0.0204 0.3435 0.4010 0.2460 0.1204 0.2194 0.1647 0.1705 HL[19] 5.8413 8.7956 9.4647 5.4620 0.0136 0.2714 0.3017 0.1731 0.1078 0.1795 0.1421 0.1493 DP2P[21] 3.5761 6.3410 6.3102 4.5822 0.0101 0.1708 0.2011 0.1044 0.0560 0.0987 0.0945 0.1432 VRM[22] 6.5210 8.2514 10.1250 6.9500 0.0175 0.2921 0.3470 0.0195 0.1123 0.2010 0.1547 0.1694 CLM[26] 4.1210 6.5231 6.4203 5.0011 0.0191 0.1802 0.2854 0.1721 0.0757 0.1030 1.2321 0.1567 Our method 6.7817 8.9241 10.1201 7.0320 0.0223 0.3412 0.4021 0.2540 0.1256 0.2168 0.1747 0.1920 Note: Bold numbers represent the maximum value in each column. Table 2 Comparison of the Enhancement Results Using Special Underwater Metrics

Method UICM UISM UIConM UIQM Diver Coral Tank A.R* Diver Coral Tank A.R* Diver Coral Tank A.R* Diver Coral Tank A.R* Original image 0.28 0.36 0.42 0.30 5.03 7.54 8.36 6.44 0.04 0.10 0.21 0.09 1.64 2.59 3.23 2.23 ARC[5] 0.40 0.44 0.67 0.34 9.04 9.34 12.65 7.18 0.12 0.18 0.42 0.10 3.11 3.41 5.26 2.49 Shade[13] 0.33 0.41 0.55 0.38 7.21 9.02 10.77 7.15 0.06 0.19 0.37 0.11 2.35 3.35 4.52 2.52 Fusion[15] 0.39 0.53 0.71 0.52 9.03 11.81 13.16 9.01 0.09 0.24 0.45 0.18 3.00 4.28 5.52 3.32 WaterGAN[18] 0.46 0.50 0.70 0.53 9.10 11.02 13.02 8.95 0.10 0.20 0.48 0.17 3.12 4.17 5.48 3.28 HL[19] 0.40 0.45 0.62 0.37 8.75 9.98 10.97 8.34 0.13 0.22 0.39 0.14 3.04 4.07 5.35 3.04 DP2P[21] 0.31 0.40 0.54 0.36 7.70 11.33 10.98 8.49 0.07 0.16 0.31 0.13 2.53 3.93 4.37 2.98 VRM[22] 0.46 0.51 0.70 0.50 9.01 10.98 13.00 9.04 0.10 0.22 0.46 0.18 3.03 4.04 5.50 3.33 CLM[26] 0.37 0.49 0.57 0.48 8.97 10.90 12.78 8.07 0.08 0.20 0.44 0.17 2.95 3.95 5.36 3.00 Our method 0.47 0.53 0.69 0.55 9.13 11.69 13.01 9.08 0.12 0.21 0.47 0.19 3.14 4.22 5.54 3.38 Note: A.R* stands for abysmal rock. Table 3 Processing Time (s/frame) of Competing Methods with Different Frame Sizes

-

[1] Jaffe J S. Underwater optical imaging: The past, the present, and the prospects. IEEE Journal of Oceanic Engineering, 2015, 40(3): 683–700. DOI: 10.1109/JOE.2014. 2350751.

[2] Hou W L, Gray D J, Weidemann A D, Fournier G R, Forand J L. Automated underwater image restoration and retrieval of related optical properties. In Proc. the 2017 IEEE International Geoscience and Remote Sensing Symposium, Jul. 2007, pp.1889–1892. DOI: 10.1109/IGARSS. 2007.4423193.

[3] Wells W H. Loss of resolution in water as a result of multiple small-angle scattering. Journal of the Optical Society of America, 1969, 59(6): 686–691. DOI: 10.1364/JOSA. 59.000686.

[4] Xiang W D, Yang P, Wang S, Xu B, Liu H. Underwater image enhancement based on red channel weighted compensation and gamma correction model. Opto-Electronic Advances, 2018, 1(10): 9. DOI: 10.29026/oea.2018.1800 24.

[5] Galdran A, Pardo D, Picón A, Alvarez-Gila A. Automatic red-channel underwater image restoration. Journal of Visual Communication and Image Representation, 2015, 26: 132–145. DOI: 10.1016/j.jvcir.2014.11.006.

[6] Chiang J Y, Chen Y C. Underwater image enhancement by wavelength compensation and dehazing. IEEE Trans. Image Processing, 2012, 21(4): 1756–1769. DOI: 10.1109/ tip.2011.2179666.

[7] He K M, Sun J, Tang X O. Single image haze removal using dark channel prior. IEEE Trans. Pattern Analysis and Machine Intelligence, 2011, 33(12): 2341–2353. DOI: 10. 1109/TPAMI.2010.168.

[8] Zhang M H, Peng J H. Underwater image restoration based on a new underwater image formation model. IEEE Access, 2018, 6: 58634–58644. DOI: 10.1109/ACCESS.2018. 2875344.

[9] Rahman Z, Jobson D J, Woodell G A. Multi-scale retinex for color image enhancement. In Proc. the 3rd IEEE International Conference on Image Processing, Sept. 1996, pp.1003–1006. DOI: 10.1109/ICIP.1996.560995.

[10] Jobson D J, Rahman Z, Woodell G A. A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Processing, 1997, 6(7): 965–976. DOI: 10.1109/83.597272.

[11] Rahman Z U, Jobson D J, Woodell G A. Retinex processing for automatic image enhancement. Journal of Electronic Imaging, 2004, 13(1): 100–110. DOI: 10.1117/1.1636 183.

[12] Hsu E, Mertens T, Paris S, Avidan S, Durand F. Light mixture estimation for spatially varying white balance. ACM Trans. Graphics, 2008, 27(3): 1–7. DOI: 10.1145/ 1360612.1360669.

[13] Finlayson G, Trezzi E. Shades of gray and colour constancy. In Proc. the 12th Color Imaging Conference: Color Science and Engineering Systems, Technologies, Applications, Nov. 2004, pp.37–41. DOI: 10.2352/CIC.2004.12.1. art00008.

[14] Fu X Y, Sun Y, Liwang M H, Huang Y, Zhang X P, Ding X H. A novel retinex based approach for image enhancement with illumination adjustment. In Proc. the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing, May 2014, pp.1190–1194. DOI: 10.1109/ICASSP.2014.6853785.

[15] Ancuti C, Ancuti C O, Haber T, Bekaert P. Enhancing underwater images and videos by fusion. In Proc. the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Jun. 2012, pp.81–88. DOI: 10.1109/CVPR.2012.6247661.

[16] Zosso D, Tran G, Osher S. A unifying retinex model based on non-local differential operators. In Proc. the 2013 SPIE 8657, Computational Imaging XI, Feb. 2013, Article No. 865702. DOI: 10.1117/12.2008839.

[17] Han M, Lyu Z, Qiu T, Xu M L. A review on intelligence dehazing and color restoration for underwater images. IEEE Trans. Systems, Man, and Cybernetics: Systems, 2020, 50(5): 1820–1832. DOI: 10.1109/TSMC.2017.2788902.

[18] Li J, Skinner K A, Eustice R M, Johnson-Roberson M. WaterGAN: Unsupervised generative network to enable real-time color correction of monocular underwater images. IEEE Robotics and Automation Letters, 2018, 3(1): 387–394. DOI: 10.1109/LRA.2017.2730363.

[19] Berman D, Levy D, Avidan S, Treibitz T. Underwater single image color restoration using haze-lines and a new quantitative dataset. IEEE Trans. Pattern Analysis and Machine Intelligence, 2021, 43(8): 2822–2837. DOI: 10. 1109/TPAMI.2020.2977624.

[20] Li Y J, Lu H M, Zhang L F, Li J R, Serikawa S. Real-time visualization system for deep-sea surveying. Mathematical Problems in Engineering, 2014, 2014: 437071. DOI: 10.1155/2014/437071.

[21] Sun X, Liu L P, Li Q, Dong J Y, Lima E, Yin R Y. Deep pixel-to-pixel network for underwater image enhancement and restoration. IET Image Processing, 2019, 13(3): 469–474. DOI: 10.1049/iet-ipr.2018.5237.

[22] Fu X Y, Zhuang P X, Huang Y, Liao Y H, Zhang X P, Ding X H. A retinex-based enhancing approach for single underwater image. In Proc. the 2014 IEEE International Conference on Image Processing, Oct. 2014, pp.4572–4576. DOI: 10.1109/ICIP.2014.7025927.

[23] Tarel J P, Hautière N. Fast visibility restoration from a single color or gray level image. In Proc. the 12th IEEE International Conference on Computer Vision, Sept. 29–Oct. 2, 2009, pp.2201–2208. DOI: 10.1109/ICCV.2009.5459251.

[24] Kaplan S, Zhu Y M. Full-dose pet image estimation from low-dose pet image using deep learning: A pilot study. Journal of Digital Imaging, 2019, 32(5): 773–778. DOI: 10.1007/s10278-018-0150-3.

[25] Liu Y F, Jaw D W, Huang S C, Hwang J N. DesnowNet: Context-aware deep network for snow removal. IEEE Trans. Image Processing, 2018, 27(6) 3064–3073. DOI: 10.1109/TIP.2018.2806202.

[26] Zhou Y, Wu Q, Yan K M, Feng L Y, Xiang W. Underwater image restoration using color-line model. IEEE Trans. Circuits and Systems for Video Technology, 2019, 29(3): 907–911. DOI: 10.1109/TCSVT.2018.2884615.

[27] Zhang J Y, Chen Y, Huang X X. Edge detection of images based on improved Sobel operator and genetic algorithms. In Proc. the 2009 International Conference on Image Analysis and Signal Processing, Apr. 2009, pp.31–35. DOI: 10.1109/IASP.2009.5054605.

[28] Shahid M, Rossholm A, Lövström B, Zepernick H J. No-reference image and video quality assessment: A classification and review of recent approaches. EURASIP Journal on Image and Video Processing, 2014, 40(2014): Article No. 40. DOI: 10.1186/1687-5281-2014-40.

[29] Rahman Z U, Jobson D J, Woodell G A, Hines G D. Image enhancement, image quality, and noise. In Proc. the 2005 SPIE 5907, Photonic Devices and Algorithms for Computing VII, Sept. 2005, Article No. 59070N. DOI: 10.1117/12.619460.

-

其他相关附件

-

本文英文pdf

2023-4-11-1115-Highlights 点击下载(175KB) -

本文附件外链

https://rdcu.be/dqKFj -

DOCX格式

2023-4-11-1115-Chinese Information 点击下载(27KB)

-

下载:

下载: