信息高铁:一种全球低熵高通量算力网

Information Superbahn: Towards a Planet-Scale, Low-Entropy and High-Goodput Computing Utility

-

摘要:

约翰·麦卡锡在1961年庆祝麻省理工学院百年庆典的一次演讲中提出了效用计算的愿景,包括按需计费服务、大型计算机和私人计算机三个关键概念。60年后,尽管网格计算、服务计算和云计算均取得了进步,但麦卡锡的算力网愿景尚未完全实现。本文基于云计算领域最新进展,提出了一种称为“信息高铁”的算力网。本文认为一个全球算力网即将成为主流现实,并且其面临的技术挑战是可解决的。在有数十亿用户、数万亿设备和泽字节级数据的全球互联环境下,信息高铁视角尽可能保留了麦卡锡的愿景并使得现代的重要需求更加明确。算力网通过全球规模、低熵及高通量算力,提供按需计费的计算服务。本文阐述了算力网的三个显著特征,并提供了初步证据支撑这一观点。初步实验数据表明信息高铁可以同时提升通量和利用率。

Abstract:In a 1961 lecture to celebrate MIT’s centennial, John McCarthy proposed the vision of utility computing, including three key concepts of pay-per-use service, large computer and private computer. Six decades have passed, but McCarthy’s computing utility vision has not yet been fully realized, despite advances in grid computing, services computing and cloud computing. This paper presents a perspective of computing utility called Information Superbahn, building on recent advances in cloud computing. This Information Superbahn perspective retains McCarthy’s vision as much as possible, while making essential modern requirements more explicit, in the new context of a networked world of billions of users, trillions of devices, and zettabytes of data. Computing utility offers pay-per-use computing services through a 1) planet-scale, 2) low-entropy and 3) high-goodput utility. The three salient characteristics of computing utility are elaborated. Initial evidence is provided to support this viewpoint.

-

Keywords:

- cloud computing /

- computing utility /

- utilization /

- low-entropy system /

- high-goodput computing

-

1. Introduction

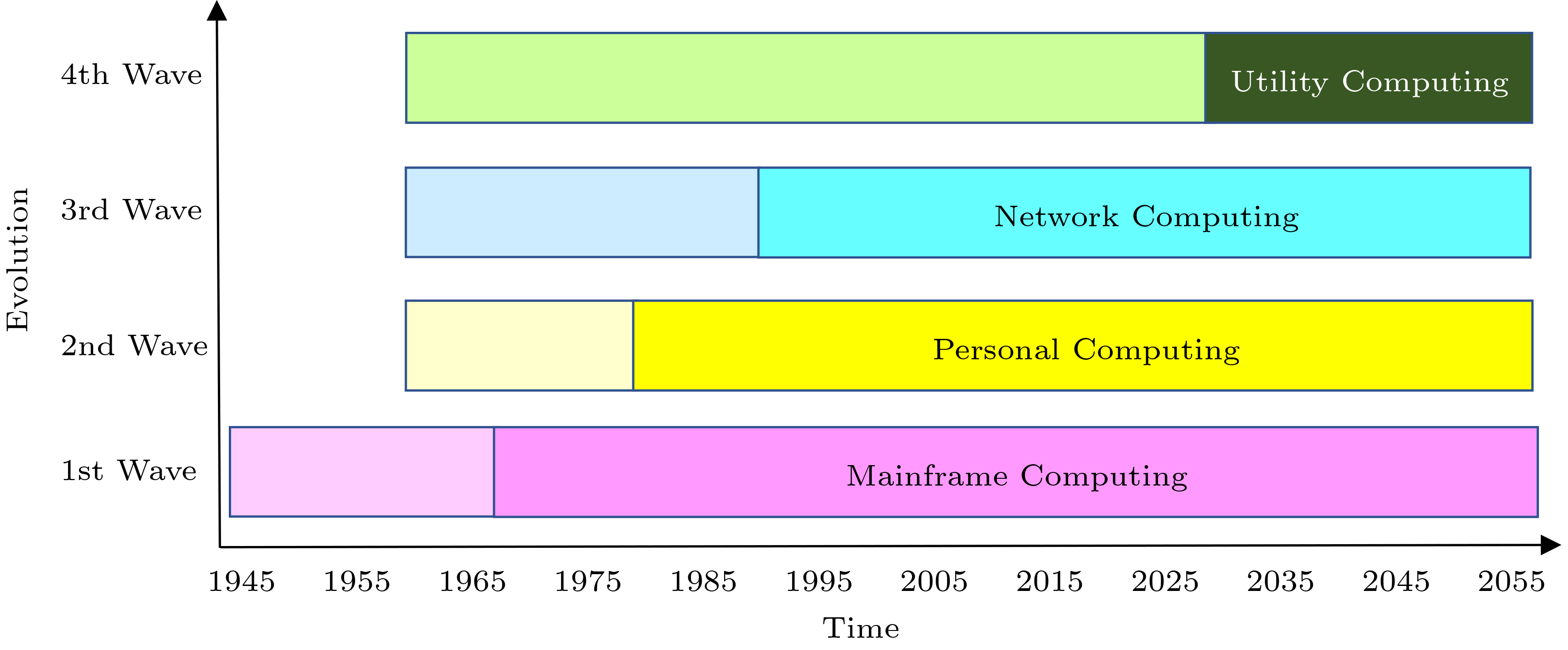

Many ideas of existing computing paradigms were originally proposed in the 1960s. A mega-wave forms by incorporating multiple paradigms. Four important mega-waves are listed in Fig.1, where the dashed boxes show initial ideas and the solid boxes show mainstream adoption.

Mainframe computing enables multiple users to share a centralized computer via batch processing. Personal computing provides a dedicated computer to each user and supports interactive tasks.

Network computing is the dominant mega-wave today. It incorporates paradigms such as Internet, World Wide Web, services computing, cloud computing, big data, and mobile Internet, to provide the advantages of both mainframe computing and personal computing, but in a networked world of billions of users and computers, as well as zettabytes of data.

The next mega-wave is utility computing, the idea of which was proposed over 60 years ago by John McCarthy, when he gave a lecture on “Time-Sharing Computer Systems” to celebrate MIT’s centennial[1]. McCarthy envisioned “Computing as a Public Utility”, or CaaPU if we follow today’s cloud computing parlance of IaaS, PaaS, and SaaS. McCarthy regarded time-sharing as important as the stored-program concept, and CaaPU as a future direction of time-sharing. McCarthy’s CaaPU vision has the following salient features.

● Pay-per-Use Services. Users subscribe to computing services of the utility instead of buying computer devices. A user pays only for capacity actually used.

● Large Computer. A user has access to all “programming language characteristics”, or “computer culture”, of a very large system.

● Private Computer. A subscriber sees a private computer dedicated to his/her use as long as needed.

Today’s cloud computing systems have only partially realized the three features.

The current perspective paper revisits McCarthy’s vision by giving a modern definition of computing utility and outlining a research agenda called Information Superbahn[2], which is one approach to realizing computing utility.

2. Defining Computing Utility

Over 60 years have passed since McCarthy spoke of computing utility. The academic community and the industry have accumulated a lot of knowledge and experiences related to the computing utility idea. The most recent development is cloud computing[3-5]. Leveraging these historical assets, we give a modern definition of computing utility from the Information Superbahn perspective.

Definition 1. Computing utility (Information Superbahn) is a telephone-like public utility providing computing services to worldwide subscribers.

It has the following four essential characteristics.

● Pay-per-Use Services. Users subscribe to computing services of the utility and pay only for resources actually used.

● Planet-Scale Culture. Users have ready access to all computer culture of one worldwide utility from anywhere at any time.

● Low-Entropy Systems. Each of the billions of worldwide subscribers sees a private computer, largely free from disorders such as workload interferences and system jitters.

● High-Goodput Utility. The utility is an efficient system such that most executed tasks show good enough user experiences.

The above computing utility definition tries to retain McCarthy’s vision as much as possible, while making some essential modern requirements more explicit. This is done by retaining McCarthy’s pay-per-use services concept, extending his large and private computer ideas, and introducing a new concept of high-goodput utility. Table 1 summarizes the three stages of computing utility development, i.e., McCarthy’s vision, current cloud computing systems, and Information Superbahn.

Table 1. Three Stages of Computing Utility DevelopmentMcCarthy’s Vision Cloud Computing System Information Superbahn Pay-per-use services Subscribed IaaS, PaaS, SaaS Pay-per-use services Large computer Intra-cloud culture + inter-cloud APIs Planet-scale culture Private computer Various isolation schemes Low-entropy systems – – High-goodput utility Cloud computing has already realized the service computing idea. Users subscribe to various systems of IaaS, PaaS, SaaS, instead of buying devices. However, McCarthy’s pay-per-use idea has not yet been fully realized in current cloud computing systems, which charge subscriptions to physical machines, virtual machines, container images, and functions for some time periods, no matter whether they are used or not. This leads to stranding and low utilization[6-8].

The planet-scale culture concept extends the large computer idea to the whole computer culture (hardware, data and programming resources) of one worldwide utility, which subscribers can access from anywhere at any time. In contrast, a user today sees multiple clouds. Resources from these multiple clouds do not form a pool, but silos with different accessing interfaces. Services from other clouds can be accessed via predefined APIs.

The low-entropy systems concept extends McCarthy’s private computer idea, in the new context of a connected world of billions of simultaneous users and trillions of diverse devices, who and which may interfere with one another[8, 9].

The high-goodput utility concept emphasizes the user experienced efficiency of the utility. Most executed tasks are to the users’ satisfaction. The utility does not waste a big portion of its resources on non-payload tasks or ill-behaved tasks.

3. Explaining Computing Utility

Utility computing is a useful metaphor. However, to guide the research on utility computing technology, we need to go beyond metaphoric thinking by giving qualitative and quantitative descriptions. In Definition 1, the planet-scale culture concept emphasizes the worldwide global utility model, the low-entropy systems concept emphasizes the quality of computing services, and the high-goodput utility concept emphasizes efficiency.

3.1 Planet-Scale Computing Culture

Information Superbahn advocates a computing utility that is a global and rich pool of pay-per-use computing services.

The pay-per-use service idea is clear: 1) users subscribe to desired services from the pool of computing services of the utility; 2) users pay only for services and resources actually used, similar to how the electric utility is used and charged.

Extending the UGC (user-generated contents) concept of Web 2.0, computing utility facilitates UGS (user-generated services), i.e., some services of the utility are generated by users.

Global. Computing utility is one planet-scale pool of computing resources and services. It should not be constrained by silos and boundaries of datacenters, clouds, vendors, or countries. Technology should facilitate one global utility, like telephone. In our world today, telephony has evolved into a single, planet-scale utility, but electricity has not.

Similar ideas have been proposed under the names of cloud federation, multi-cloud and sky comput-ing[10-12]. Ideally, a user should be able to access any services from the global (worldwide) utility, barring only access control constraints. The user should have a universal computing account (UCA), to access resources and be charged for the actual use of such resources[13]. The UCA should not be tied to any cloud or any vendor. Telephone numbers and email addresses are mechanisms half way to meeting this requirement. Decentralized Identifier (DID) from W3C is an encouraging recent development[14].

Global also means that everyone can participate as a stakeholder, similar to and learning from the Internet, the World Wide Web, and the open-source community

1 . In particular, technology needs to be developed to make it easy for resource providers and developers to participate. The core technology should be simple and minimal, to enable users and resource providers to join the computing utility, with minimal or no permission from any central entity, similar to how a webpage can join the World Wide Web.Rich. The pool of services provided by the utility represents a rich diversity of resources, including hardware, system software, application software, data, and even humans. McCarthy called this rich ecosystem of worldwide resources “computer culture” or “programming culture”. He envisioned to each user “Ideally, the whole programming culture should be present and readily accessible[1].”

This is why McCarthy wants each user to have a large computer, i.e., large enough to hold the whole computer culture needed by the user. In McCarthy’s original paper, by “a large computer”, he also meant “one with very large primary memory and very large secondary memory”. In the networked world today, resources should be readily accessible via networking (not just from secondary storage) and stay active in primary memory whenever needed.

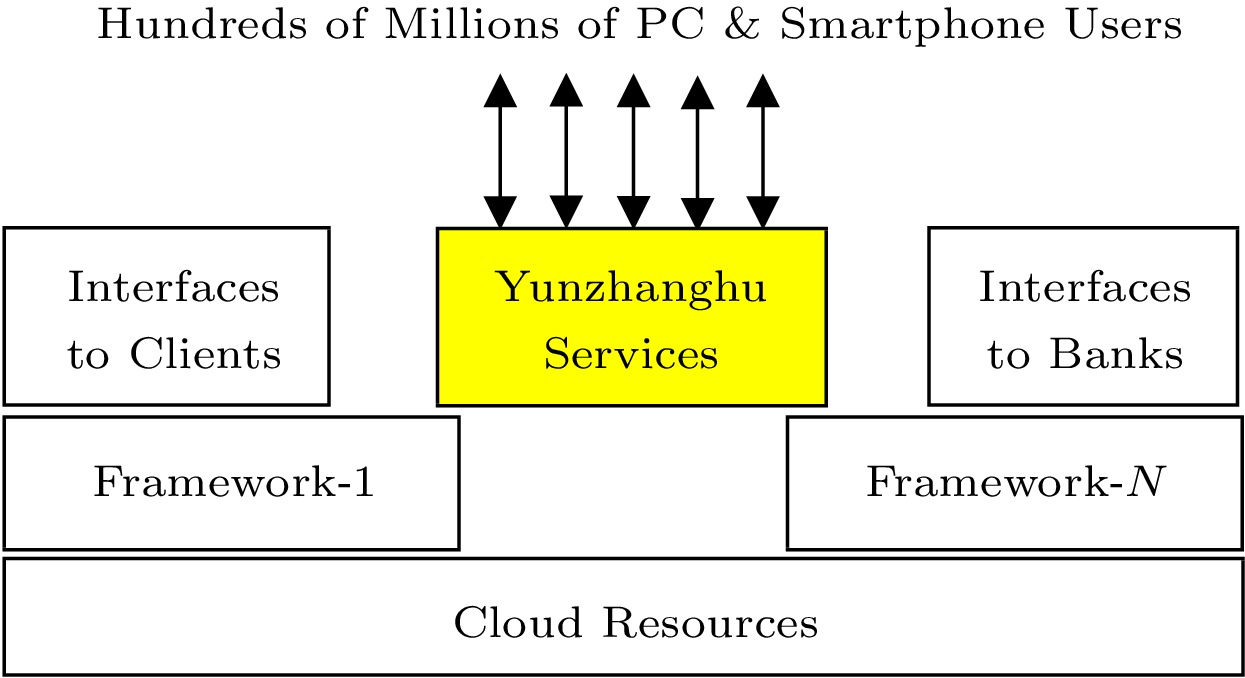

McCarthy’s vision of “readily accessible computer culture” has already been partially realized, which has become a key enabler of many economic and societal innovations. It is an enabler not only for Internet giants and big organizations, but also for individuals and small-and-medium enterprises (SMEs). A case in point is the startup company Yunzhanghu (云账户 in Chinese).

Yunzhanghu was established in 2016 to provide financial services to the 200 million self-employed workers in China. In only six years, Yunzhanghu’s monthly active users grew to over 70 million. The Yunzhanghu services created tangible societal change by increasing income tax payers in China from 5% of China’s population to 10%. All this is done with fewer than 200 engineers.

The Yunzhanghu case becomes possible only with cloud computing. As is shown in Fig.2, Yunzhanghu has ready accessibility to cloud infrastructure resources, application frameworks, and interfaces to other cloud services, so that it can focus on developing and delivering its business services to hundreds of millions of registered users. Computing utility should enable millions of SMEs.

3.2 Low-Entropy Systems

For the past five decades, worldwide electricity consumption has continued to grow, and its portion of total energy consumption grew from 9.5% in 1973 to 19.7% in 2019, according to International Energy Agency[15].

Why do people like electricity? A key reason is that modern electricity has evolved into a form of low-entropy energy[16] such that users see and experience little uncertainty and only controllable noises. Computing utility needs to learn from the electricity and electric grid industry.

Low-entropy systems emphasize the quality of computing services. From the viewpoint of billions of worldwide subscribers, each user should see a private computer, largely free from the negative impact of the following three types of disorders[8].

Disorders. Workload interference happens when task Y’s execution interferes with task X’s execution, by invading into task X’s resource phase space. A common example is that batch jobs take resources away from interactive tasks, drastically increasing the latter’s tail latency[17]. System jitters are noises from hardware, system software and application framework that may interfere with the execution of payload tasks. For example, garbage collection by a virtual machine slows down all applications. Resource mismatches occur when the system resources do not fit applications. Examples include poor system configurations and mismatched data layout.

Oversubscription. The cloud computing industry practices oversubscription to cope with the above high-entropy problems. Applications oversubscribe cloud resources, sometimes multiple times more than what is actually needed, to provide sufficient resource leeway such that disorders do not negatively impact application performance. An extreme case of oversubscription is for each subscriber to use dedicated resources. However, oversubscription leads to stranded resources.

Stranding. Stranded resources are resources allocated or reserved, but are not used and cannot be allocated to other applications. New comers to cloud computing may wonder why a cloud computing system has low utilization but cannot accept more load. A main reason is stranding[6-8].

The cloud computing community has worked hard to reduce stranding and increase utilization. According to a recent study, reported average CPU utilizations have improved from 7% to 20% in Google and Amazon cloud datacenters of 2011, to 38% in Alibaba cloud datacenters of 2018[7, 18].

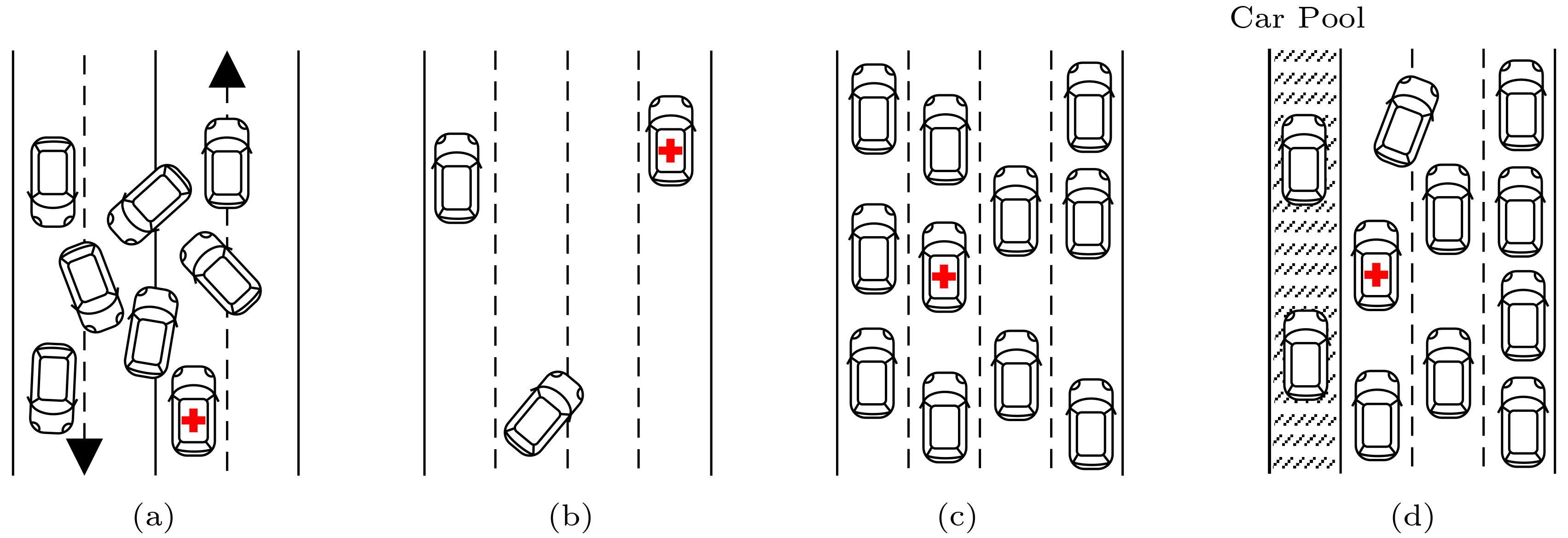

Three-Phase Traffic Theory. In addition to utility industries such as telephone and electricity, we can also learn from the transportation field. The three-phase traffic theory[19] is illustrated by the four types of highway traffic flows in Fig.3 and Table 2.

Table 2. Illustration of Four Types of Traffic FlowsFlow Type Velocity Throughput User Experience Utilization Traffic jam Low (6 m/h) Low (1.2 cars/s) Bad (not on time) “High” Free flow High (60 m/h) Low (4.0 cars/s) Good (on time) Low Synchronous flow High (60 m/h) High (14.7 cars/s) Good (on time) High Prioritized synchronous flow High (70, 60, 50 m/h) High (15.2 cars/s) Good (on time) High Cloud computing tasks want to avoid the traffic jam type of Fig.3(a), although it comes with the “advantage” of chaotic flexibility, in that the cars can make various moves: speeding up, slowing down, changing lanes, overtaking, and making U-turn. The highway infrastructure is heavily occupied, which gives the illusion of “high” utilization.

However, what really happens in the traffic jam type of traffic flow is that cars end up traveling at a low speed. The cars do not arrive at destinations on time. Passengers do not enjoy satisfactory user experience. The system throughput is low.

Cloud applications adopt oversubscription and dedicated use of cloud resources, to cope with uncertainties and disorders present in the traffic jam type of traffic flow. They roughly correspond to the free flow type of Fig.3(b), which shows a good speed and user experience at light load. User experience and velocity are improved with the sacrifice of systems throughput and utilization.

Computing utility wants to avoid these sacrifices. In fact, Information Superbahn aims to the seemingly impossible goal of obtaining high velocity, high throughput, high utilization, and good user experience, all at once. There is a precedent in transportation, i.e., the high-speed railway system, which roughly corresponds to the type of synchronized flow of Fig.3(c). Information Superbahn aims even higher, corresponding to the type of prioritized synchronous flow, by allowing prioritized execution of special tasks (Fig.3(d)). Two classes of special tasks are illustrated: the ambulance car and the cars in the car pool lane. They both enjoy prioritized treatment and can travel at a faster speed (70 miles per hour) than a normal speed (60 miles per hour). Other cars will yield resources to special cars, resulting in a slightly reduced speed (50 miles per hour).

A key to achieving the above goal is to bound uncertainty (entropy) by constraining chaotic flexibility. The cars are not allowed to arbitrarily speed up, slow down, change lanes, or make a U-turn. They are required normally to stay in their lanes and maintain a relatively steady high speed. Some disciplined flexibility, i.e., clearly specified exceptions to the normal rules, is allowed. For instance, prioritized special cars can overtake other cars; normal cars may change lanes to yield to special cars.

DIP Thesis. Some current cloud applications have already achieved low latency and high utilization, through engineering efforts at optimizing particular applications. Information Superbahn aims to help achieve satisfactory user experiences and high utilizations at the system level, by providing low-entropy infrastructure supports to help all applications. We make an observation called the DIP thesis[8]: to have low entropy and to realize the prioritized synchronous flow of application tasks, Information Superbahn, as an infrastructure, must support three properties.

● Differentiation. Different tasks must have different task labels to differentiate themselves.

● Isolation. Resources used by one task must be isolated from other tasks.

● Prioritization. Tasks can have different levels of priorities, which are enforced.

Isolation has multiple definitions[20]. Here, isolation means one of three types. Semantic isolation means the business logic of a task is not affected by other tasks. Fault isolation means the execution of a task is not adversely affected by failures of other tasks. Performance isolation means the execution time of a task is not affected by the execution of other tasks. A convenient check of the isolation property is to see whether a task’s behavior changes from that in a dedicated environment, which deploys and executes the task in an optimized dedicated computing system, free from workload interference and system jitters.

The three properties are all required. Any one property cannot be derived from the other two. It is obvious that prioritization cannot be derived from differentiation and isolation. In fact, the synchronized flow type in Fig.3(c) has differentiation and isolation, but not prioritization. The ambulance car has no priority over other cars. To see that isolation and prioritization do not lead to differentiation, let us look at the car pool case in the prioritized synchronous flow of Fig.3(d). The cars in the isolated car pool lane can travel at a higher speed than the other cars, not because they have different labels, but because they have no less than two passengers per car. To see that differentiation and prioritization do not lead to isolation, we only need to look at process management in Linux, which is still the dominant operating system of most datacenters. Linux systems have process ID and process priority, but do not guarantee performance isolation.

3.3 High-Goodput Utility

The high-goodput utility concept emphasizes the efficiency of computing utility. Experiences from successful public utilities (telephone, electricity and water utility) have shown that a public utility has to be efficient to be sustainable in the long term for masses of subscribers.

We use a number of definitions to clarify. Suppose an objective outsider observes the computing utility for a time period [0,T], during which the utility has executed a total number of n computational tasks, of which only N are good tasks.

Definition 2. A task executed by the computing utility is a good task, if it is a payload task that completes on time (i.e., shows good user experience).

Definition 3. The n tasks each have a user-expected latency threshold tTH. If the task’s execution time ist and t⩽, the task is said to complete on time and show good user experience.

A payload task must be an application task. A systems task does not count. The phrase “completes on time” means that the task finishes within a user-expected latency threshold {t}_{\mathrm{T}\mathrm{H}} . Then, the task is said to show good user experience.

The set of good tasks is equal to the set of total tasks excluding the following types of tasks:

● non-payload tasks, e.g., systems tasks;

● incomplete tasks, aborting before completion;

● repeated tasks (multiple redundant payload tasks for speed or fault tolerance should be counted as one payload task);

● tasks with bad user experience.

Table 3 contrasts good tasks in Information Superbahn to on-time passengers traveling on high-speed railway trains. This analogy may help better understand the concepts of tasks, payload, good tasks, and user experience.

Table 3. Good Tasks vs On-Time PassengersInformation Superbahn High-Speed Railway Payload task Passenger Good task On-time passenger Non-payload task, e.g., systems tasks Non-passenger, e.g., engineer or attendant Incomplete task Passenger aborting the journey Repeated task Passenger having taken the wrong train & having to redo Task with bad user Delayed passenger, i.e., not arriving experience on time Definition 4. For period [0, \,T] , the computing utility’s throughput \lambda is the total number of tasks n divided by the total time T , i.e., \lambda =n/T ; its yield Y is the number of good tasks N divided by the total number of tasks, i.e., Y=N/n ; and its goodput G is the number of good tasks divided by the total time T , i.e., G=N/T .

Definition 5. Assume at time t\in [0,T] the computing utility has a total of p\left(t\right) processor cores, of which only A\left(t\right) cores are allocated to executing tasks, only P\left(t\right) cores are used by tasks, and only Q\left(t\right) cores are used by good tasks. The computing utility’s utilization U and good utilization {U}_{G} are, respectively,

U=\frac{\displaystyle\int _{t=0}^TP(t){\rm d}t}{\displaystyle\int_{t=0}^TA(t){\rm d}t}, U_G=\frac{\displaystyle\int_{t=0}^TQ(t){\rm d}t}{\displaystyle\int_{t=0}^TA(t){\rm d}t}. All five values of throughput, yield, goodput, utilization, and good utilization are observed quanti-ties[21]. Note that we have G=\lambda \times Y .

Information Superbahn advocates high-goodput computing, which is different from high-throughput computing

2 , in that the former emphasizes goodput G while the latter emphasizes throughput \lambda . When there is enough load in the Information Superbahn, our goal is to have many executed tasks to be payload tasks showing good user experiences, such that the system’s good utilization {U}_{G} is high.Cloud computing systems show utilization numbers in the range of 10%–70%[7, 18]. However, the utilization there is often raw CPU utilization. This utilization metric is not a satisfactory metric. What is executed by the CPU cores may be systems tasks or unsatisfactory payload tasks. Traffic jam exhibits high utilization numbers, although few payload tasks enjoy good user experience. Definition 5 and Subsection 4.2 show that Information Superbahn emphasizes another type of utilization metrics: the portion of resources used by good tasks.

Different from the electric utility, where it is difficult to store electric energy, portions of load in computing utility can be stored and executed at a later time. New policies of allocating and charging the use of resources should be exploited to utilize this difference, to realize pay-per-use and to increase the utilization of the computing utility.

In the high-performance computing sector, users are often allocated CPU-hour quotas, and charged by the CPU-hours actually used, as long as the actual used amount does not exceed the quota. In cloud computing, Amazon EC2 cloud offers spot instances, which do not guarantee QoS but are 10 times cheaper than normal instances. All these practices should be exploited and enhanced.

4. Prototype of Information Superbahn

This section provides initial evidence to support the Information Superbahn perspective on computing utility. We first discuss the architecture of a research prototype of Information Superbahn, and then present experimental result numbers for yield, goodput and utilization, from a cloud-edge experiment. A new style of application specification and programming is also briefly discussed.

4.1 Runtime Architecture

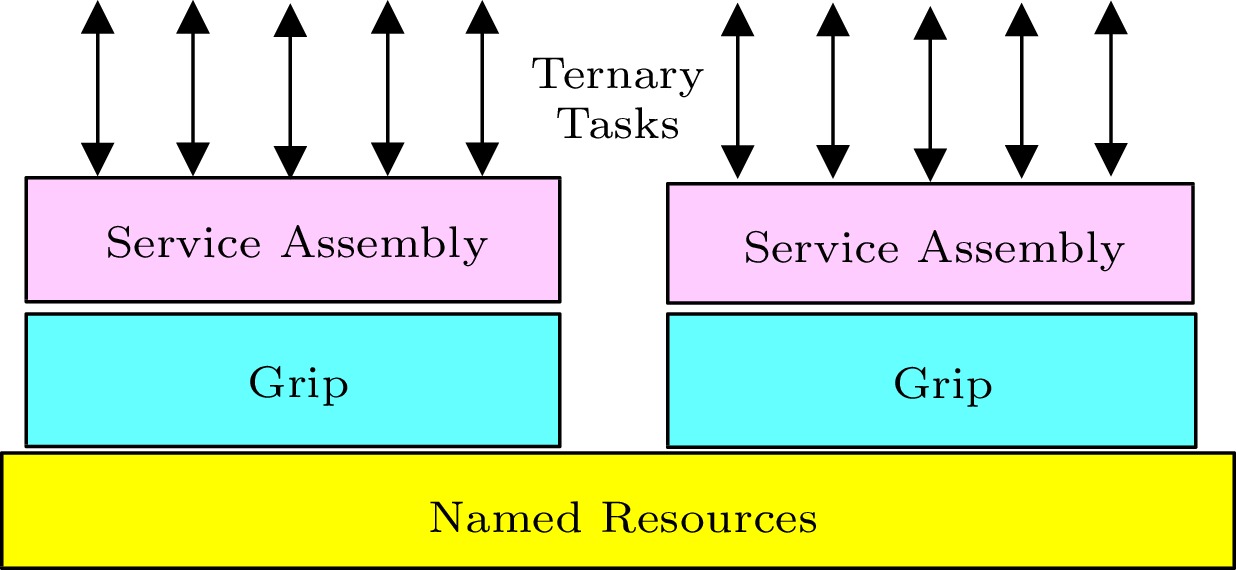

Fig.4 shows an architecture of a research prototype of Information Superbahn. Besides tasks, it has three layers of abstractions: named resources provide accessibility, grips enable orderly use of resources, and service assemblies highlight application business logic.

Computational tasks can be initialized by computers, humans, or physical things. They are human-cyber-physical tasks, or ternary tasks.

4.1.1 Named Resources

In World Wide Web, any hypertexted resource is accessible by any Web client anywhere in the world, barring access control constraints. Similarly, named resources provide accessibility, supporting planet-scale culture. All resources in Information Superbahn form a set accessible by any application. Resources may be pooled or not pooled. It should make sense to say that Information Superbahn has a total of 100 million CPU cores at time {t}_{1} , and 100 million and 1024 CPU cores at time {t}_{2} . One can even name and access the 105th CPU core or the 99th edge device, if the granularity of the access control policy allows.

Resource types are broad, including users, hardware, system software, programming languages, frame-works, application software, data, models, knowledge, business processes, and even physical resources like natural phenomena. Different resources may expose different types of interfaces, such as hardware, services, libraries, or application frameworks.

However, every resource in the resource set is named. This is the foundation of accessibility, controllability, accountability and charging. Resource names are not necessarily visible to users. Developers and users may see named, aliased, or anonymous resources. A resource can be pooled (one-in-many) or individualized (one of a kind).

4.1.2 Service Assembly

An application in Information Superbahn takes the form of service assembly, or simply assembly. Besides the main component, each assembly can access to one or more services on the same computing node or anywhere in the world. The simplest service assembly is a running program capable of response to task requests. One assembly may serve as the component of another assembly.

Service assemblies are orderly application programs, in that they have explicit structure and policy constraints. An analogy is a railway train formed by a series of cars.

Such constraints are visible to professional developers and users so that assemblies can highlight application business logic. Their detailed code, data and metadata may be completed by the environment, artificial intelligence and other means. New techniques of compilation and interpretation are needed to convert programs, data, context and environment into an executable service assembly.

4.1.3 Grip

A service assembly in running becomes a grip, a systems runtime construct that can be monitored and managed as one entity. The grip concept is similar to the process concept in conventional operating systems: a process is a program in execution. However, grip is for distributed systems.

A grip is also an orderly container of all resources used by the service assembly, similar to that a process has segments of text, data, stack, and heap. While all named resources in Information Superbahn form a set, the resources in a grip constitute an orderly subset of this resource set. Uses of these resources follow the same consistent naming, scoping and policy constraints, including how a resource should be accessed, used and charged.

4.2 Red Packet Experimental Results

An experiment is conducted to see whether Information Superbahn has the potential to simultaneously achieve the objectives of high goodput and high utilization. For comparison, we execute the same workload on the same bare-metal resources, using an Information Superbahn prototype (ISB) and a Kubernetes platform (K8s)[22].

ISB Design Rationales. The ISB prototype is designed for the high-goodput utility goal, and partially supports the low-entropy and planet-scale concepts. The prototype is built on the DIP thesis to achieve low entropy, providing unique ID-based differentiation, per-task hardware resource isolation, and three-level prioritization. The prototype supports planet-scale by enabling global scheduling, which allows tasks launched in Beijing to be executed in Nanjing. To achieve high goodput utility, various techniques, such as fine-grained allocation and fast scaling, are adopted to optimize the system to get a high yield in the situation of high load.

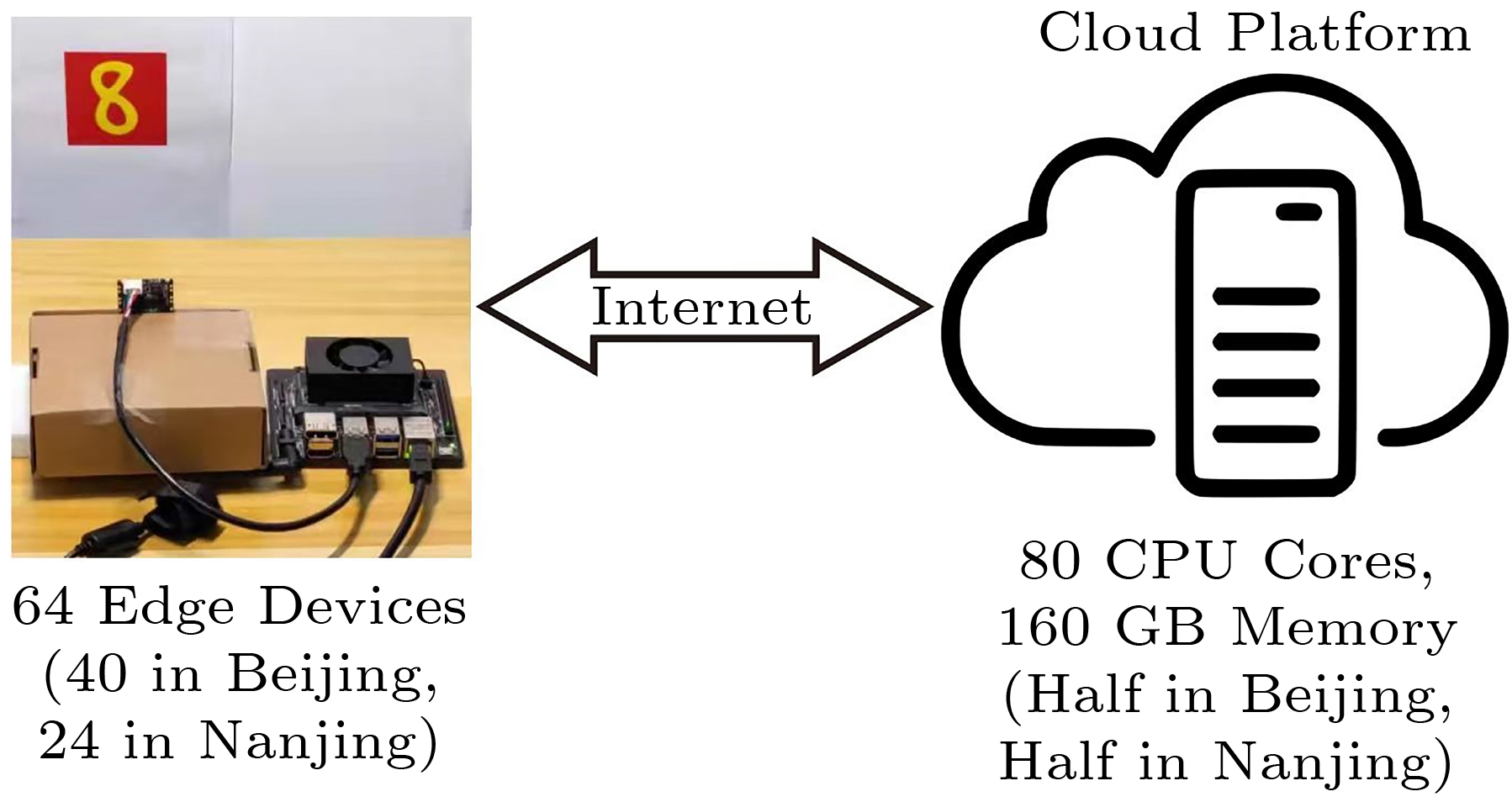

Experimental Setup. As shown in Fig.5, 10 real edge devices (NVIDIA Jetson) and 14 virtual devices are deployed in Nanjing, and 40 virtual devices are deployed in Beijing. We deploy two types of cloud platforms, ISB and K8s, to compare their performance. ISB and K8s are deployed across Beijing and Nanjing with the same hardware resources: 80 CPU cores and 160 GB memory in four servers that are distributed equally in Beijing and Nanjing. Edge devices and computing platforms are connected by Internet.

We build an application named Red Packet for the experiment. The application is a service assembly and Fig.5 presents the named resources it uses. Its workloads are generated by the edge devices with cameras attached. A task execution process consists of the following three steps.

First, the camera on an edge device takes a photo of a handwritten number (“8” in Fig.5) and stores it as an image file.

Second, the edge device sends a task with a latency requirement to the cloud platform for handwriting character recognition. For K8s, the device only sends the image file by HTTP. For ISB, the device sends the image file together with a recognition program by a self-defined protocol.

Third, using a DNN model, the cloud platform computes the recognized number, which is the amount of money in the red packet sent to the one and only red packet receiver.

The experiment consists of 51 test cases. In each test case, every device sends multiple tasks evenly in 40 seconds. According to the definition in Subsection 3.3, let n be the total number of tasks that all devices send. We change n in different test cases, from 6400, 12800, …, up to 365696. During one test case run, only one type of cloud platform (ISB or K8s) is used. For the system observation period [0, \,T] , we observe the system from the time when the first task is sent (0), to the time when the last task finishes (T ).

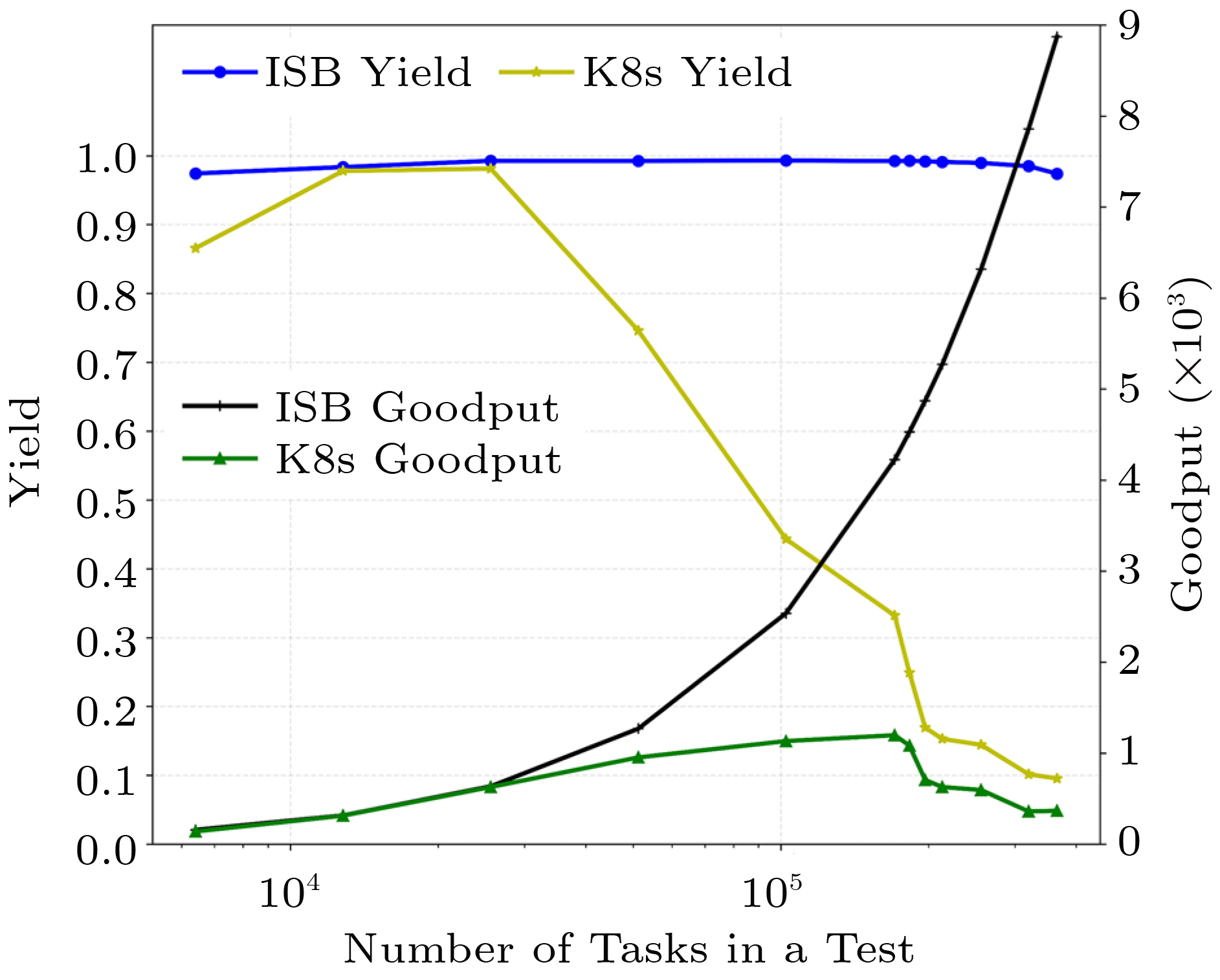

Yield and Goodput. Our experiment shows that, compared with K8s with a common setting, ISB can improve yield and goodput by orders of magnitudes, for heavy load.

As shown in Fig.6, ISB has higher yield and goodput in every test case. In the cases of high load, ISB achieves 1.3x–10.2x in yield and 1.3x–24.2x in goodput, compared with K8s. In all cases, ISB maintains the yield close to 100%, while the yield of K8s decreases dramatically when the number of tasks exceeds 51200.

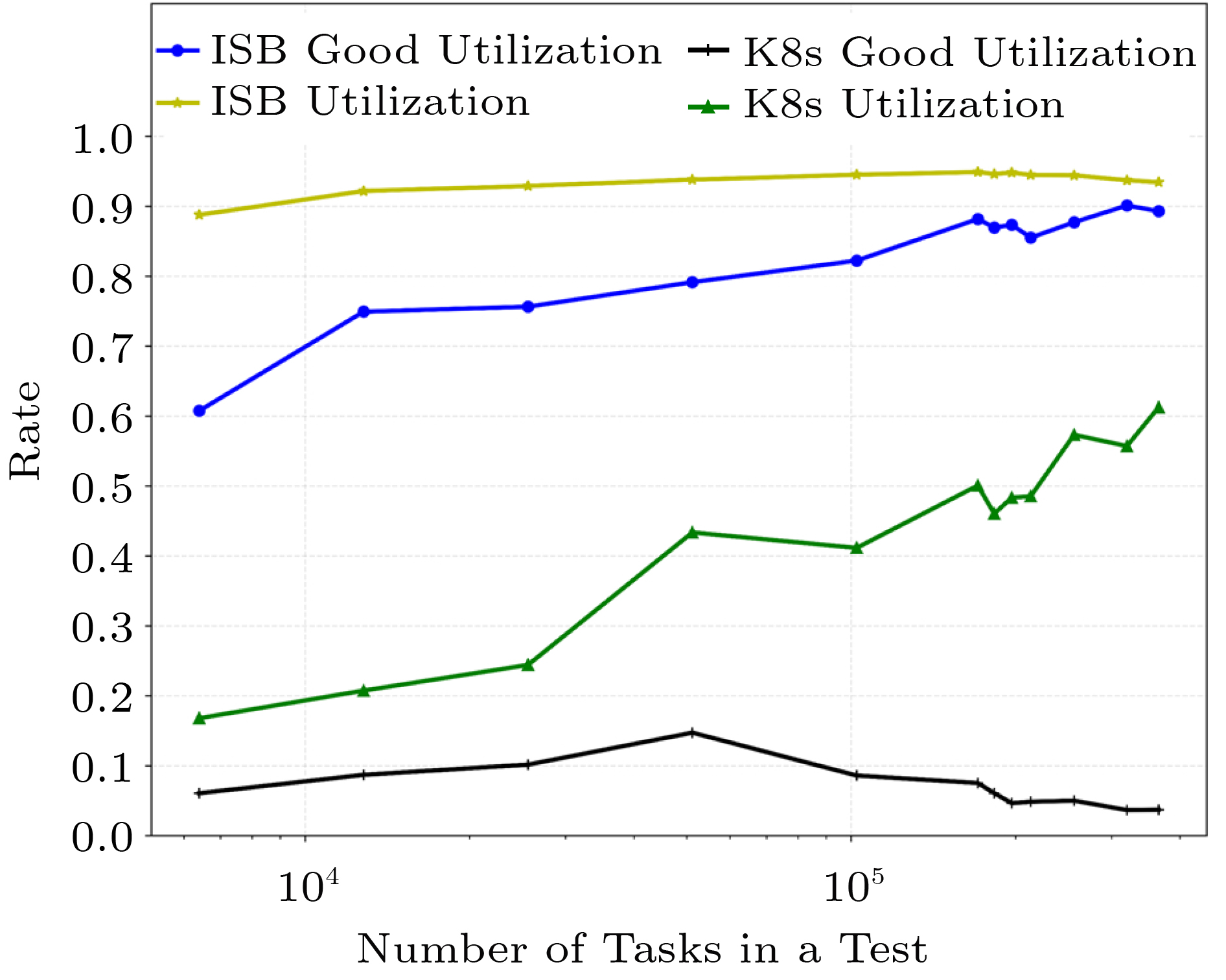

Utilization. The experiment also shows that ISB has the potential to simultaneously achieve high CPU utilization and high goodput.

As shown in Fig.7, ISB has better than 90% utilization ( U ) which is 1.5x–5.29x the utilization of the K8s system. ISB’s good utilization ( {U}_{G} ) is 5.38x– 24.74x of the good utilization of K8s. For the high load cases (when the number of tasks exceeds 51200), the good utilization of ISB increases as the load increases, while the good utilization of K8s decreases as the load increases.

4.3 Profile Programming

How to quickly develop a correct service assembly in Fig.4? The following three requirements should be satisfied.

● Utilizing Existing Ecosystem. Developers can use existing programming languages and environments of cloud computing.

● Enabling Business-Level Integration. The service assembly should include business-level integration code to assemble one or more services into an orderly application program.

● Providing Verifiable Partial-Specification. Existing cloud applications are often completely written by developers, which may lead to over-specification. A service assembly avoids over-specification with successive partial-specifications, allowing the automatic completion of business logic and policies into the detailed code by compilation or artificial intelligence.

To help satisfy the above requirements, we propose a profile-oriented programming (PoP) model based on TLA+[23] for agile development of service assemblies with verifiable and concise characteristics.

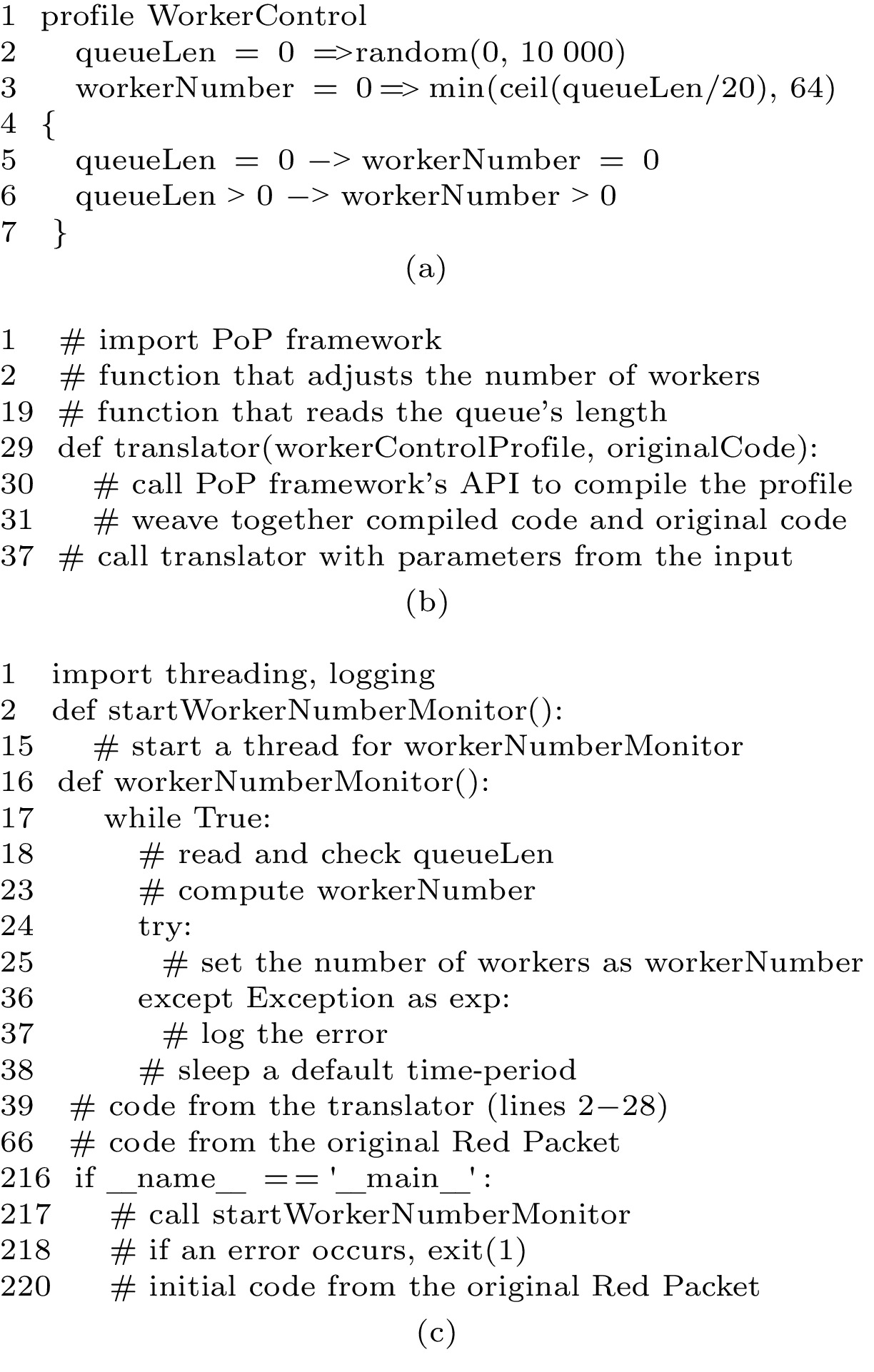

Example. We highlight the PoP concept with an example of a part of the “Red Packet” experiment discussed in Subsection 4.2. The original service assembly has a fixed number of recognition workers. Suppose we want to refactor the assembly to dynamically adjust the number of workers according to the requests queue’s length.

The additional new logic is: “the service assembly maintains workerNumber to be 1/20 of the queue’s length, up to the maximum value of 64”.

The above English description is concise but inaccurate. It cannot be automatically verified or translated into executable code.

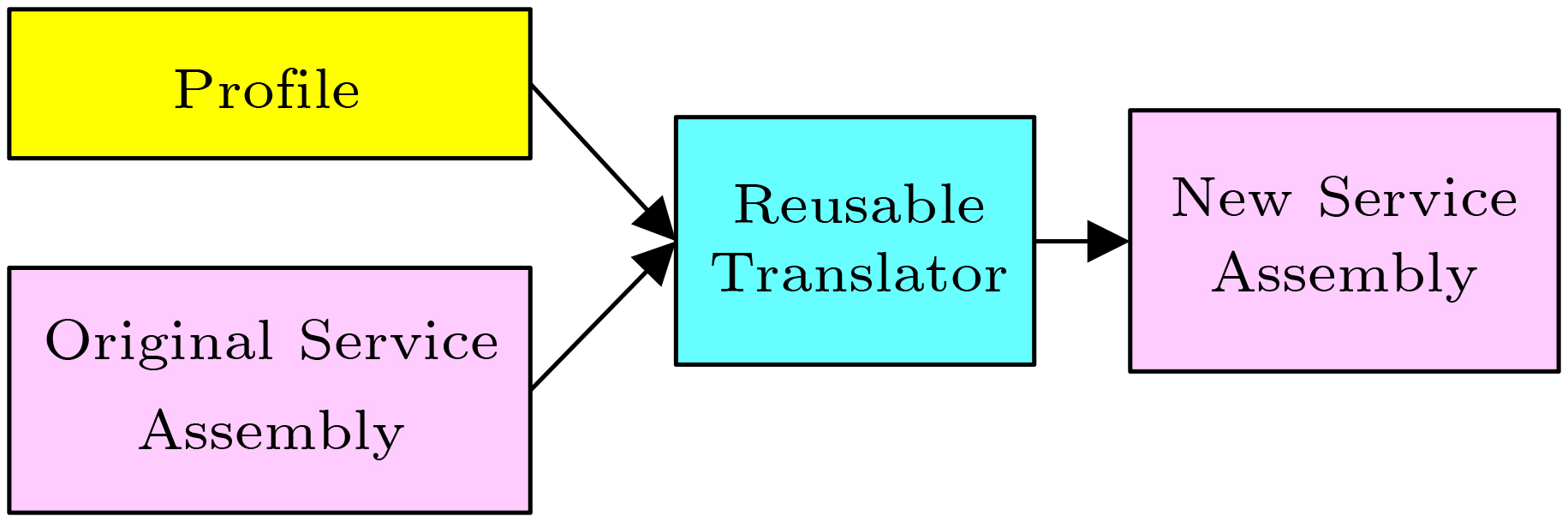

Fig.8 shows a general refactoring process in PoP. The developer needs to develop a profile that specifies the new logic. A reusable translator will compile the profile into executable code and wave the compiled code with the original service assembly into a refactored service assembly. The translator is developed by a professional developer and is reusable, like a library.

Fig.9 shows the PoP programs with the new logic, where a line starting with “#” describes a folded code block. Fig.9(a) is a 7-line profile that specifies the new policy about the number of workers. Fig.9(b) is a translator developed by a professional developer, which is reusable for other service assemblies. For example, it can also be used to refactor a service assembly that does speech recognition from a fixed number of recognition workers to an optimal number of workers. Fig.9(c) is the generated Python program of the refactored Red Packet service assembly.

Profile. A profile is an abstraction to specify a system or an algorithm and highlight its business logic and policy. It can be successfully refined into more and more detailed specifications, and finally into executable code, such as a Python script program. A profile has the following structure.

profile profileName

variablesSection

{

propertiesSection

}

A profile has a keyword profile followed by a profile name, a variables section and a properties section. The variables section defines one variable per line, and the properties section defines one predicate logic expression per line, specifying the properties that the variables should satisfy. The properties section is equivalent to a set of properties to be verified in TLA+.

Integration. A profile is compiled into executable code and integrated with other components. In the Red Packet example, the compiled code is integrated with the code of the original service assembly, which has a fixed number of recognition workers. The executable code that a profile is translated to may include three things: 1) business logic, such as using the DNN model and the algorithm to recognize images; 2) system optimization logic, such as finding the optimal workerNumber; and 3) implementation details, such as starting a new worker by calling the Python thread library. A profile highlights the business logic by leaving the common code to a reusable translator, which automatically generates implementation details.

In Fig.9(a), the variables section of the profile specifies variables by a TLA-like syntax: an initial expression before the arrow and a next expression after the arrow. For queueLen, the initial value is 0, and the next value is randomly chosen from {0, 1, …, 10000}. For workerNumber, the initial value is 0, and the next value is 1/20 of queueLen with a maximum value of 64. The common mathematical functions, such as random(m, n), min(m, n), ceil(x), floor(x), and int(x), can be automatically imported from the standard libraries of the PoP framework.

Verification. PoP uses TLA+ to find functional bugs and performance issues automatically. Suppose the next expression of workerNumber (line 3 in Fig.9(a)) is mis-specified as min(floor(queueLen/20), 64). Then, the second property (line 6 in Fig.9(a)) will be violated. Suppose the value of queueLen is 10. The value of workerNumber will be 0 because min(floor(10/20), 64) = min(0, 64) = 0. The model checker will report the error and print out an error trace of (queueLen, workerNumber) as (0, 0) \to (10, 0) \to (10, 0) \to \dots .

PoP can also reveal performance issues. Suppose the next expression of workerNumber (line 3 in Fig.9(a)) is mis-specified as min(max(queueLen/20, 1), 64). Then, the first property (line 5 in Fig.9(a)) will be violated. Suppose the value of queueLen is 0. The value of workerNumber will be 1 (not in the initial state) because min(max(0/20, 1), 64) = min(1, 64) = 1. This indicates that a worker may keep running even if no requests are in the queue, resulting in unnecessary resource consumption. The model checker will report the error and print out an error trace of (queueLen, workerNumber) as (0, 0) \to (0, 1) \to (0, 1) \to \dots .

Partial-Specification. PoP achieves partial-specification by automatically completing implementation details, such as error handling and logging, and by reusing the translator’s code. The generated python program in Fig.9(c) has three parts: the code compiled from the profile (lines 1–38 and lines 217–219), the code copied from the reusable translator (lines 39–65), and the code copied from the original service assembly (lines 66–216 and line 220). With PoP, the developer only needs to write the 7-line profile (Fig.9(a)). Without PoP, the developer needs to write all 41 lines of the compiled code (lines 1–38 and lines 217–219 in Fig.9(c)).

Low-level system errors are hidden for profile developers. For example, failure to start a worker may lead to a system crash if not handled correctly. The translator developed by a professional developer hides such errors for profile developers. It starts workers in a try-except block to avoid crashes when an error occurs and logs any abnormal behaviors (lines 24–37 in Fig.9(c)).

5. Conclusions

Six decades ago, John McCarthy proposed the vision of utility computing, which is now poised to become a reality. Leveraging recent advances such as cloud computing, this paper presented a perspective of computing utility called Information Superbahn. It retains McCarthy’s vision of pay-per-use computing services, and extends his “large computer” concept to planet-scale culture and “private computer” concept to low-entropy systems. In addition, Information Superbahn introduces a new concept of high-goodput computing utility.

Initial evidence was provided to support the Information Superbahn perspective of computing utility. Experimental data from a cloud-edge computational experiment suggested that Information Superbahn has the potential to simultaneously improve both goodput and utilization.

-

Table 1 Three Stages of Computing Utility Development

McCarthy’s Vision Cloud Computing System Information Superbahn Pay-per-use services Subscribed IaaS, PaaS, SaaS Pay-per-use services Large computer Intra-cloud culture + inter-cloud APIs Planet-scale culture Private computer Various isolation schemes Low-entropy systems – – High-goodput utility Table 2 Illustration of Four Types of Traffic Flows

Flow Type Velocity Throughput User Experience Utilization Traffic jam Low (6 m/h) Low (1.2 cars/s) Bad (not on time) “High” Free flow High (60 m/h) Low (4.0 cars/s) Good (on time) Low Synchronous flow High (60 m/h) High (14.7 cars/s) Good (on time) High Prioritized synchronous flow High (70, 60, 50 m/h) High (15.2 cars/s) Good (on time) High Table 3 Good Tasks vs On-Time Passengers

Information Superbahn High-Speed Railway Payload task Passenger Good task On-time passenger Non-payload task, e.g., systems tasks Non-passenger, e.g., engineer or attendant Incomplete task Passenger aborting the journey Repeated task Passenger having taken the wrong train & having to redo Task with bad user Delayed passenger, i.e., not arriving experience on time -

[1] McCarthy J. Time-sharing computer systems. In Management and the Computer of the Future, Greenberger M (ed.), New York: MIT Press, 1962, pp.220-248.

[2] Xu Z, Li G, Sun N. Information Superbahn: Towards new type of cyberinfrastructure. Bulletin of Chinese Academy of Sciences, 2022, 37(1): 46-52. (in Chinese)

[3] Mell P, Grance T. The NIST definition of cloud computing. Technical Report, NIST Special Publication, 2011. https://doi.org/10.6028/NIST.SP.800-145, Oct. 2022.

[4] Hwang K. Cloud Computing for Machine Learning and Cognitive Applications. MIT Press, 2017.

[5] Jonas E, Schleier-Smith J, Sreekanti V et al. Cloud programming simplified: A Berkeley view on serverless computing. arXiv:1902.03383, 2019. https://arxiv.org/abs/1902.03383, Oct. 2022.

[6] Vahdat A. Networking challenges for the next decade. In the Google Networking Research Summit Keynote Talks, Apr. 2017. http://events17.linuxfoundation.org/sites/events/files/slides/ONS%20Keynote%20Vahdat%202017.pdf, Oct. 2022.

[7] Guo J, Chang Z, Wang S, Ding H, Feng Y, Mao L, Bao Y. Who limits the resource efficiency of my datacenter: An analysis of Alibaba datacenter traces. In Proc. the International Symposium on Quality of Service, Jun. 2019. DOI: 10.1145/3326285.3329074.

[8] Xu Z, Li C. Low-entropy cloud computing systems. Scientia Sinica Informationis, 2017, 47(9): 1149-1163. DOI: 10.1360/N112017-00069. (in Chinese)

[9] Perry T S. The trillion-device world. IEEE Spectrum, 2019, 56(1): 6.

[10] Ritter D. Cost-aware process modeling in multiclouds. Information Systems, 2022, 108: 101969. DOI: 10.1016/j.is.2021.101969.

[11] Lee C A, Bohn R B, Michel M. The NIST cloud federation reference architecture. Technical Report, NIST Special Publication, 2020. https://doi.org/10.6028/NIST.SP.500-332, Oct. 2022.

[12] Chasins S, Cheung A, Crooks N et al. The sky above the clouds. arXiv preprint arXiv:2205.07147, 2022. https://arxiv.org/abs/2205.07147, Oct. 2022.

[13] Xu Z, Li G. Computing for the masses. Communications of the ACM, 2011, 54(10): 129-137. DOI: 10.1145/2001269.2001298.

[14] Reed D, Sporny M, Longley D et al. Decentralized identifiers (DIDs) v1.0: Core architecture, data model, and representations. Technical Report, W3C Working Draft, https://www.w3.org/TR/did-core/, Oct. 2022.

[15] IEA. Key world energy statistics 2021. Technical Report, IEA, 2021. https://www.iea.org/reports/key-world-energy-statistics-2021/final-consumption, Oct. 2022.

[16] Thollander P, Karlsson M, Rohdin P et al. Introduction to Industrial Energy Efficiency: Energy Auditing, Energy Management, and Policy Issues. Academic Press, 2020.

[17] Dean J, Barroso L A. The tail at scale. Communications of the ACM, 2013, 56(2): 74-80. DOI: 10.1145/2408776.2408794.

[18] Barroso L A, Hölzle U, Ranganathan P. The Datacenter as a Computer: Designing Warehouse-Scale Machines. Springer Cham, 2019. DOI: 10.2200/S00874ED3V01Y201809CAC046.

[19] Kerner B S. Introduction to Modern Traffic Flow Theory and Control. Berlin: Springer, 2009.

[20] Crooks N, Pu Y, Alvisi L et al. Seeing is believing: A client-centric specification of database isolation. In Proc. the ACM Symposium on Principles of Distributed Computing, Jul. 2017, pp.73-82. DOI: 10.1145/3087801.3087802.

[21] Little J D C. Little’s Law as viewed on its 50th anniversary. Operations Research, 2011, 59(3): 536-549. DOI: 10.1287/opre.1110.0940.

[22] Burns B, Grant B, Oppenheimer D et al. Borg, Omega, and Kubernetes: Lessons learned from three container-management systems over a decade. ACM Queue, 2016, 14(1): 70-93. DOI: 10.1145/2898442.2898444.

[23] Kuppe M A, Lamport L, Ricketts D. The TLA+ toolbox. arXiv:1912.10633, 2019. https://arxiv.org/abs/1912.10633, Oct. 2022.

-

期刊类型引用(2)

1. Zhi-Wei Xu, Zi-Shu Yu, Feng-Zhi Li, et al. Hypertasking: From Information Web to Computing Utility. Journal of Computer Science and Technology, 2025.  必应学术

必应学术

2. Zhenying Li, Zishu Yu, Lian Zhai, et al. Benchmarking, Measuring, and Optimizing. Lecture Notes in Computer Science,  必应学术

必应学术

其他类型引用(0)

-

本文视频

其他相关附件

-

本文中文pdf

2023-1-7-2898-Chinese Information 点击下载(26KB) -

本文英文pdf

2023-1-7-2898-Highlights 点击下载(613KB) -

本文附件外链

https://rdcu.be/dhR1F

-

下载:

下载: