基于WiFi和声波信号的泛在感知:原理、技术与应用

-

摘要:背景

随着手机、音响和可穿戴设备等智能终端的日益普及,基于各种感知媒介的智能感知技术引起了研究者的广泛关注。由于不同类型的传感介质中WiFi信号具有普适性突出、硬件成本近零、对光照、温度、湿度等环境条件鲁棒性强,声信号具有对环境变化更强的敏感性和适应性的优点。越来越多的研究人员开始关注基于WiFi和声学信号的新型传感技术,也涌现出了许多优秀的研究工作。

目的为了让读者全面了解WiFi和声波感知的相关原理、技术和应用,增进读者对于该研究领域的兴趣与了解,本文对相关领域的研究工作进行了充分细致的调研和总结。

方法本文按照WiFi和声波感知的背景、技术、应用、以及现有技术的局限和讨论可能的开放性课题四个方面对相关领域进行综述。在介绍WiFi和声学感知的各项应用时,本文围绕了数百项相关工作进行讨论。本文选择这些相关工作的标准是,它们是该领域近十年来最具代表性的论文。

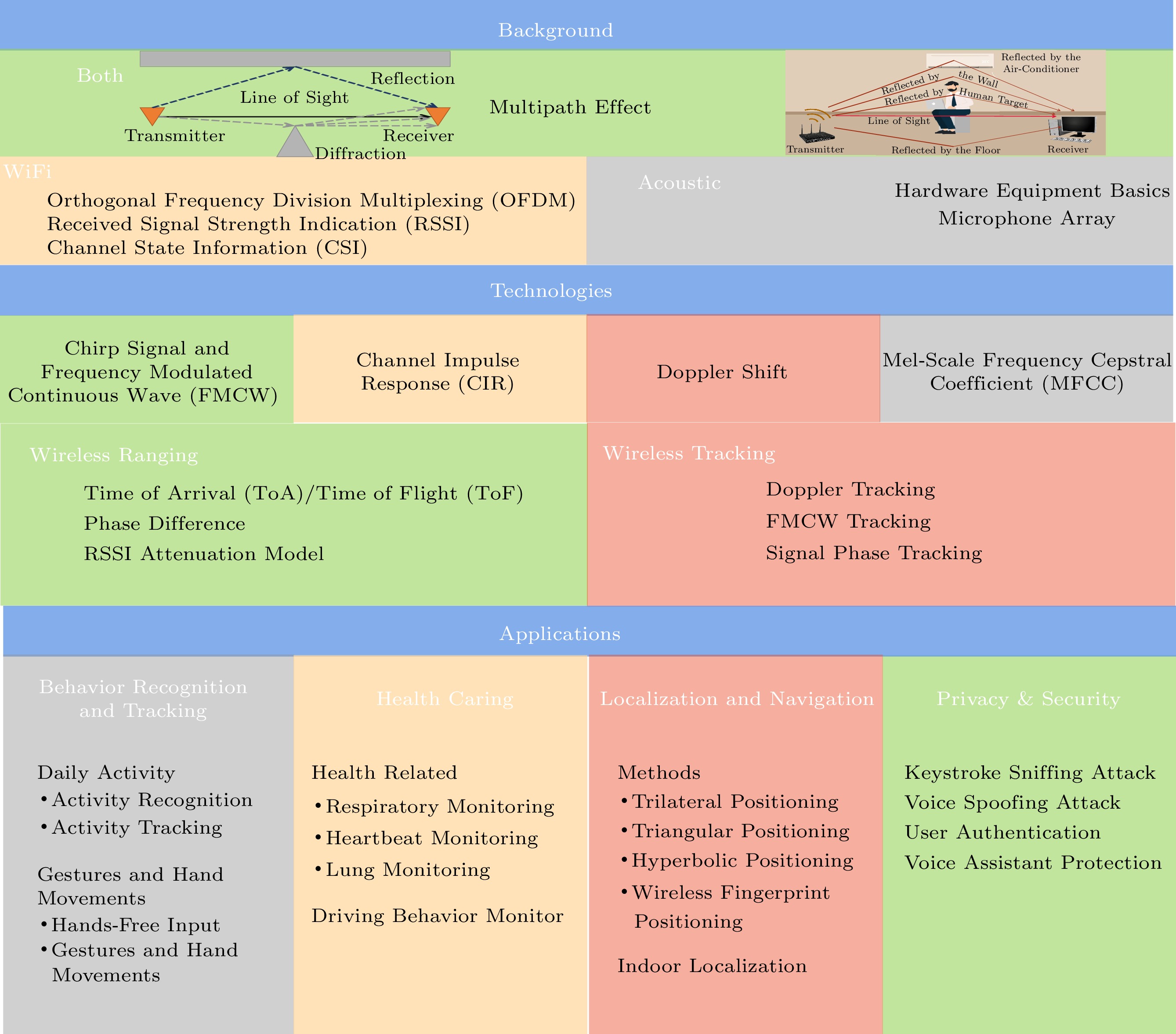

结果本文综合介绍了基于WiFi感知和声波感知的背景与相关技术如OFDM、RSI、CSSI、FMCW、CIR、Doppler Shift、MFCC、ranging、tracking等;并从行为识别与追踪、健康相关、定位、隐私安全四个应用方面分别展示了相关的工作,以及主要工作的核心内容、创新、性能及表现。

结论随着无线信号(如WiFi、声学等)的普及,无线感知技术在人类行为识别等诸多领域有着广泛的应用。为了全面了解无线传感技术,本文仔细回顾了围绕WiFi和声信号感知的数百项相关工作。本文首先介绍了多径效应、OFDM、CSI等无线感知技术的基本原理,然后介绍了这些工作中用到的基本技术,最后展示了这些技术在不同应用领域中的应用。本文最后讨论了现有研究工作的局限性,并提出了一些有待进一步研究的问题。通过此综述,我们希望读者能对无线传感有一个整体的认识。

Abstract:With the increasing pervasiveness of mobile devices such as smartphones, smart TVs, and wearables, smart sensing, transforming the physical world into digital information based on various sensing medias, has drawn researchers’ great attention. Among different sensing medias, WiFi and acoustic signals stand out due to their ubiquity and zero hardware cost. Based on different basic principles, researchers have proposed different technologies for sensing applications with WiFi and acoustic signals covering human activity recognition, motion tracking, indoor localization, health monitoring, and the like. To enable readers to get a comprehensive understanding of ubiquitous wireless sensing, we conduct a survey of existing work to introduce their underlying principles, proposed technologies, and practical applications. Besides we also discuss some open issues of this research area. Our survey reals that as a promising research direction, WiFi and acoustic sensing technologies can bring about fancy applications, but still have limitations in hardware restriction, robustness, and applicability.

-

1. Introduction

In recent years, a new round of scientific and technological revolution has been booming around the world. Human beings have stepped into the era of Internet of Things (IoT). With increasing pervasiveness of wireless and acoustic hardware, researchers have begun to pay more attention to developing novel sensing technologies based on WiFi and acoustic signals. Numerous studies have demonstrated the technical feasibility and effectiveness of sensing applications using these two types of signals, such as in the human activity recognition, health caring, positioning and navigation, and many other aspects of human life.

Among different types of sensing media, WiFi signals have the advantages of prominent pervasiveness, nearly zero hardware cost, and robustness to environmental conditions, such as light, temperature, and humidity. Bahl and Padmanabhan[1] first proposed a WiFi-based sensing application—indoor localization based on the received signal strength indication (RSSI). RSSI is usually computed by using the energy of signals as a reference to 1 mW, which is expressed in a logarithmic form.

The CSI tool was developed in [2] for extracting the channel state information (CSI) from commercial network cards, which greatly facilitated the acquisition of the CSI from commercial WiFi devices and made the use of more fine-grained CSI for sensing a new trend. The sensing of the CSI has become a new trend. Subsequently, the human behavior sensing technology based on WiFi signal was developed rapidly. The emergence of the CSI read interface makes CSI widely used in the WiFi sensing and sleep monitoring[3-8], fall detection[9-17], gesture detection[18-32], lip language recognition[33, 34], crowd detection[35, 36], daily behavior detection[9, 37-48], respiration and heartbeat detection[7, 32, 49-51], gait recognition[52-55], indoor localization[56-69] and a series of other applications[70-72].

The WiFi signal has long-range and good penetration characteristics, and WiFi-based sensing can capture a large range of human activities and even detect people moving behind obstacles. However, a long sensing range also makes it vulnerable to the surrounding environment. In contrast, although the acoustic signal has limited coverage for surrounding sensing due to its fast decay, it is more sensitive and resilient to changes in the environment. This makes acoustic-based sensing and WiFi-based sensing a valuable complement to each other. Based on the sound wave sensing, the hardware base mainly includes two components: microphone and speaker. With the popularity of mobile devices and wearable devices, widespread deployment of microphones and speakers, and the continuous progress of audio chips and technologies, the acoustic signals have become very easy to acquire and handle with high-quality and extensive sensing and communication capabilities. After obtaining the WiFi or acoustic signal, the next step is to characterize it using various sensing techniques. Similar to WiFi sensing, typical applications based on acoustic sensing include daily actions monitoring[73-78], gesture and hand movements recognition[79-87], health caring[88-92], localization and navigation[93-98] and privacy and security[99-111]. In the following, we will also introduce the basic content of signals and other characterization methods.

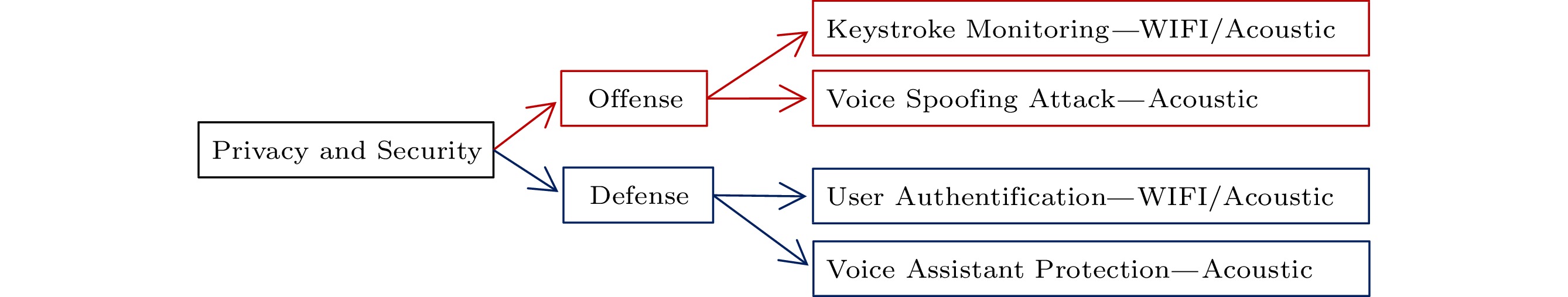

Through these basic contents and technologies, a wide range of applications can improve the quality of our daily life and work efficiency, and bring great influence and changes to the human life. These applications include: daily behavior recognition, gesture and hand motion recognition, and tracking in behavior recognition; health-related applications, such as breathing monitoring, heartbeat monitoring, lung monitoring, sleep quality detection, fall detection, and abnormal sleep detection in abnormal events; various positioning and navigation applications based on WiFi and sound waves; and the user in privacy and security authentication, keystroke snooping, voice assistant attacks, and voice assistant protection touch in every aspect of human life. In Section 4, we will introduce applications based on WiFi sensing and acoustic sensing from four aspects: behavior recognition and tracking, health caring, positioning and navigation, and privacy and security. Fig.1 shows the overall structure frame of this paper.

The rest of the paper is organized as follows. Section 2 introduces the background knowledge of WiFi and acoustic signals. Section 3 demonstrates some important key technologies that enable WiFi and acoustic sensing. Section 4 presents WiFi and acoustic sensing applications from four aspects including behavior recognition and tracking, health caring, localization, and privacy and security. Section 5 discusses the limitations of existing work and highlights future research directions. Section 6 summarizes this survey paper.

2. Background

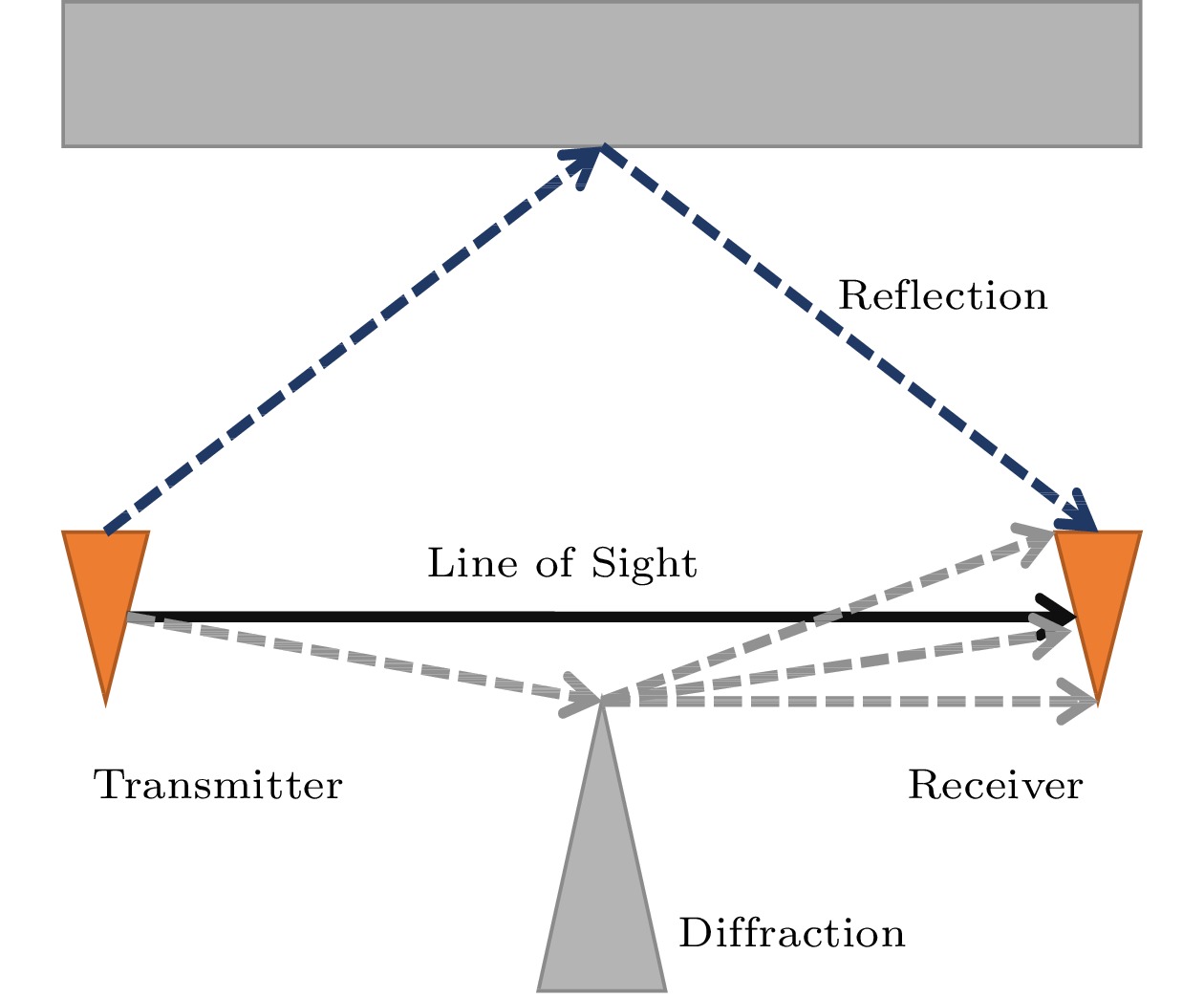

Both WiFi and acoustic signals are wireless signals. They share a common characteristic, namely the multipath effect. It describes the phenomenon that the signal reaches the receiver through different propagation paths, which can affect the performance of many sensing systems.

The wave in the process of transmission inevitably encounters many obstacles due to different material obstructions. The wave incidence angles cause wave refraction and reflection, and make the same waves through different paths to receive node multipath signals in time and to overlap, which causes direct signal distortion and affects the receiving end of signal recognition. The phenomenon of phase inconsistency, caused by the multipath effect and resulting in the fading state of the received signal, is called the multipath fading, which has a great impact on communication, detection, etc.

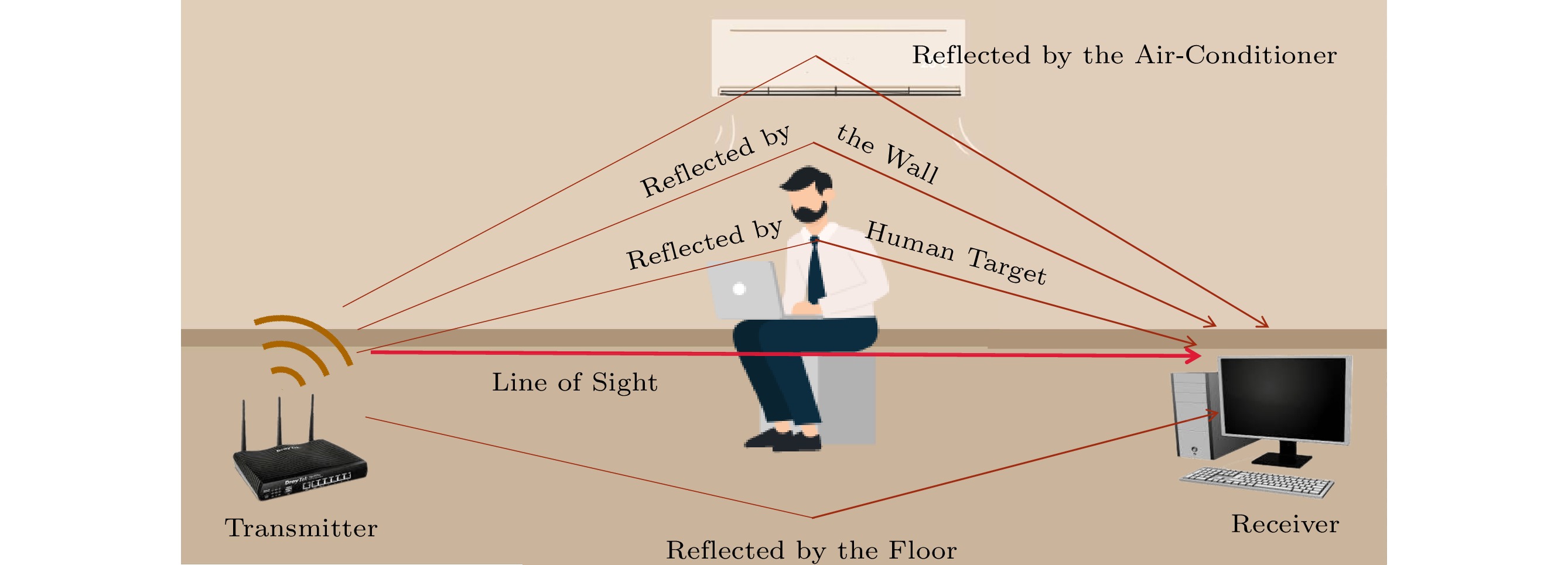

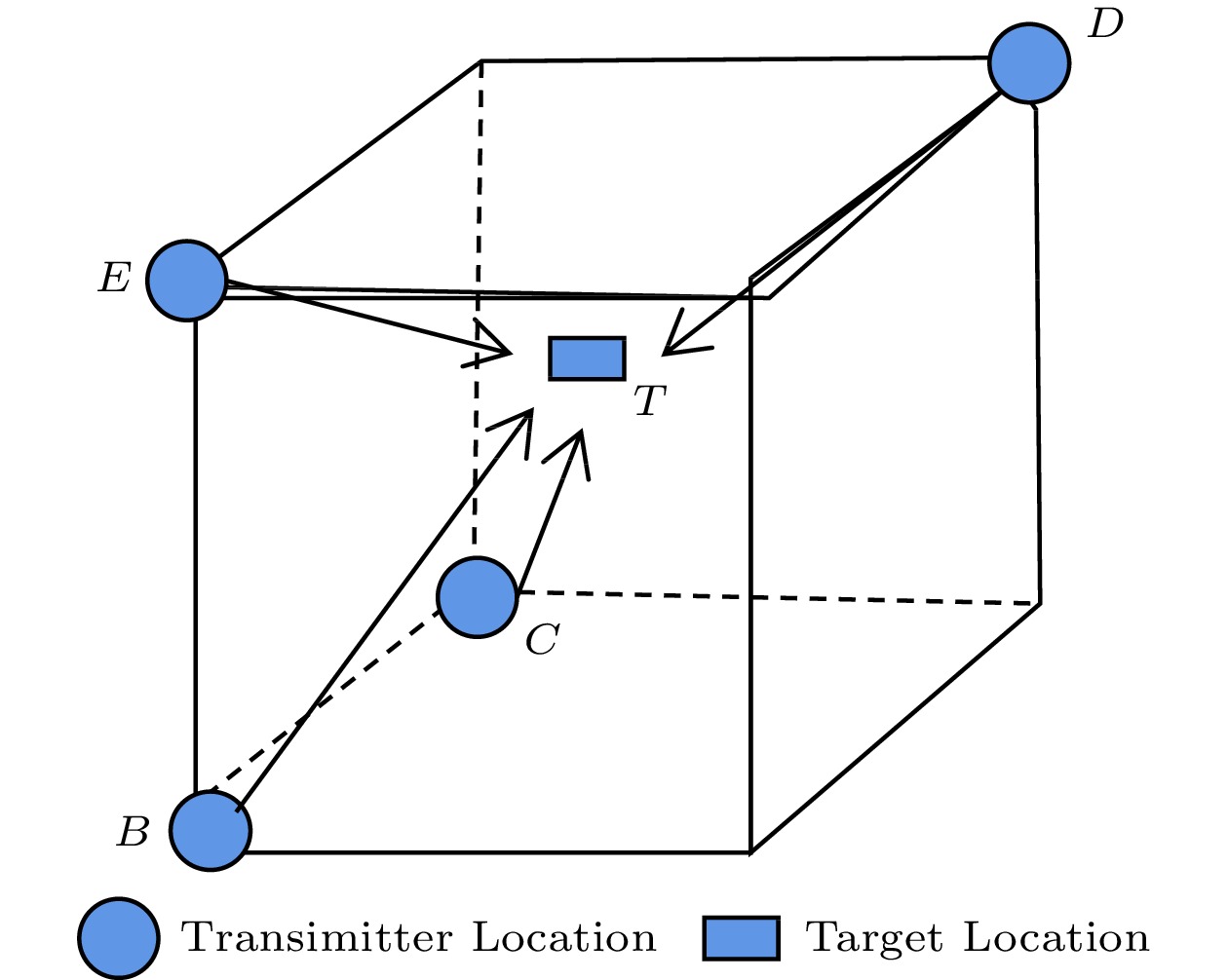

In the HAR (human action recognition) scenario of WiFi, the WiFi channel includes signals reflected by static objects in some environments, such as furniture or others. CSI represents the signal change from the transmitter to the receiver. These additional reflections caused by different activities can be observed in Fig.2.

For sound waves, the air is a multipath and time-varying attenuation channel. The propagation of sound waves in air can be regarded as the superposition of multiple signals with different delays and phases like Fig.3.

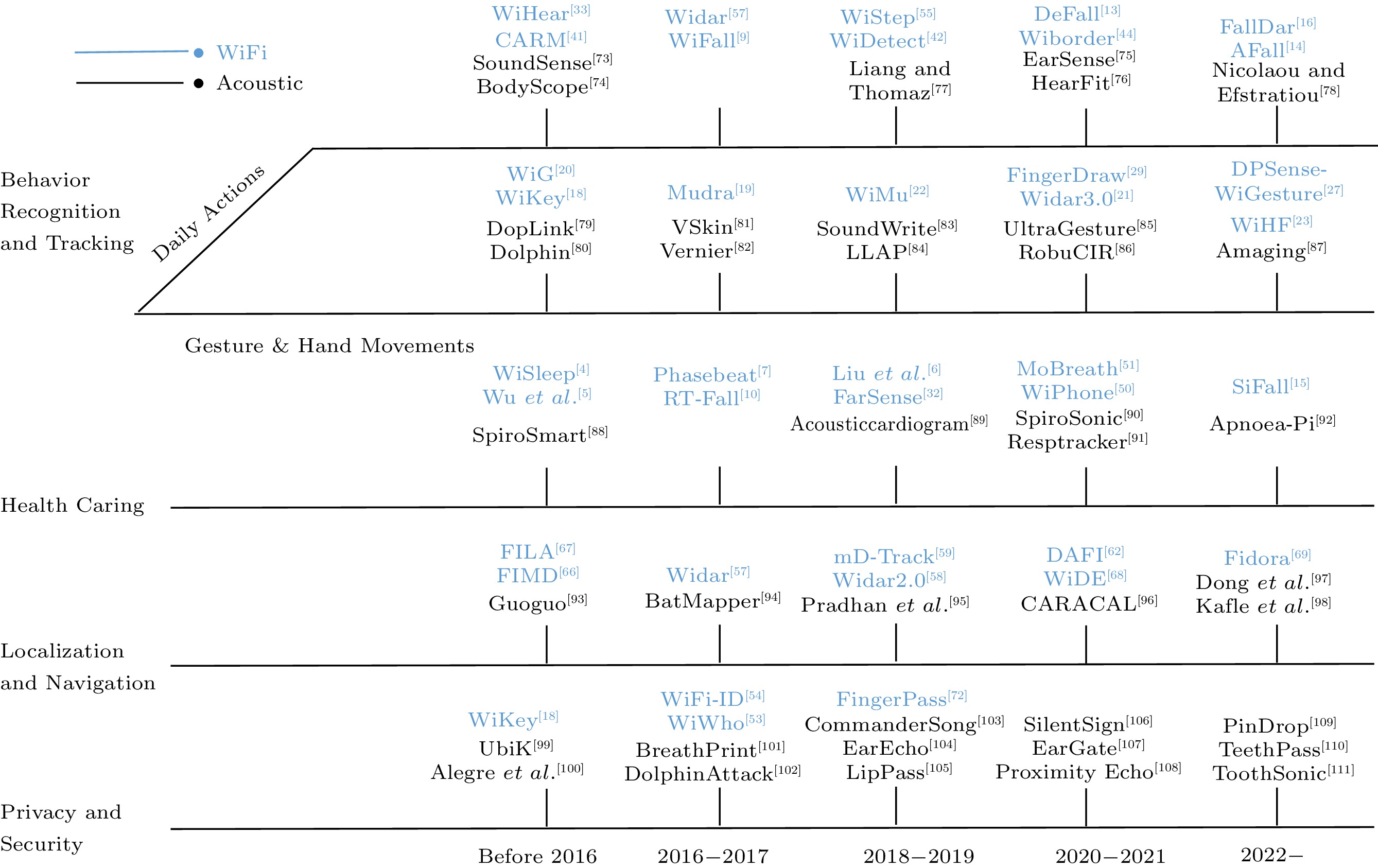

Fig.4 is a roadmap of perception by application, using WiFi and acoustic signals that have evolved over time. In addition to their common features, they also have some unique features, which we will be introduced in two parts.

2.1 WiFi

In this subsection, we mainly introduce Orthogonal Frequency Division Multiplexing (OFDM), as well as channel state information (CSI) and received signal strength indication (RSSI) in the WiFi sensing field. This information will help us understand the WiFi sensing behind it. The physical quantities used by researchers for WiFi sensing are described below.

2.1.1 Orthogonal Frequency Division Multiplexing (OFDM)

There are some applications of acoustic sensing which use this technology, except in FingerIO, where the OFDM technology is used to recognize gestures. However, OFDM is mainly manifested in the CSI signal as introduced in Subsection 2.1.3. Compared with continuous wave (CW) signals, OFDM signals have stronger ability to resist environmental interference. Its mathematical formula is:

xk=N−1∑n=0Xne2πkniN,k=0,...,N−1, where N represents the number of parts into which the bandwidth is cut. The data bits Xn are transmitted to the n-th channel.

The main idea of OFDM is to divide the channel into several orthogonal sub-channels. A high-speed data signal is converted into a parallel low-speed sub-data stream, and then it is modulated to transmit to each sub-channel. The signal bandwidth on each sub-channel is less than the correlation bandwidth of the channel.

Therefore, it can be regarded as flat fading on each sub-channel, and the inter-symbol interference can be eliminated. Moreover, since the bandwidth of each sub-channel is only a small part of the bandwidth of the original channel, the channel equalization becomes relatively easy.

2.1.2 Received Signal Strength Indication (RSSI)

WiFi signals are widely deployed. Since no sensor needs to be carried, human senseless sensing as well as NLOS (none line of sight) sensing, are not affected by external conditions, such as light, humidity, and temperature. WiFi signals were used for sensing for the first time in 2000 when Bahl and Padmanabhan[1] proposed RADAR as a system for indoor positioning based on WiFi signal strength information (RSS). RSS is an important indicator for the wireless transmission layer to determine link quality. The transmission layer uses RSS to determine whether it is necessary to increase the sending intensity of the sender. Normally, RSS is represented by power in watts (W). However, the power of the wireless signal is weak, usually at the milliwatt (mW) level. Therefore, the signal energy is expressed in logarithmic form on the basis of 1 mw, i.e., RSSI. This is a common practice.

RSS information can be read directly through the program interface on universal devices, such as mobile phones and computers, without requiring any equipment or program modification. It is quick and convenient to obtain. It is also compatible with the advantages of universal devices. But RSSI value sensing accuracy is low and fine-grained sensing cannot be achieved as the RSS information obtained from universal devices is not a real signal strength. Meanwhile, the RSS values are updated slowly, but they cannot be updated in real time. In addition, RSS is susceptible to the environmental interference.

2.1.3 Channel State Information (CSI)

In 2011, Halperin et al.[2] released the CSI tool to extract CSI from commercial network cards, which greatly facilitates the acquisition of CSI on commercial WiFi devices, making it a new trend to use finer-grained CSI for sensing. Subsequently, the human behavior sensing technology based on WiFi signals was developed rapidly.

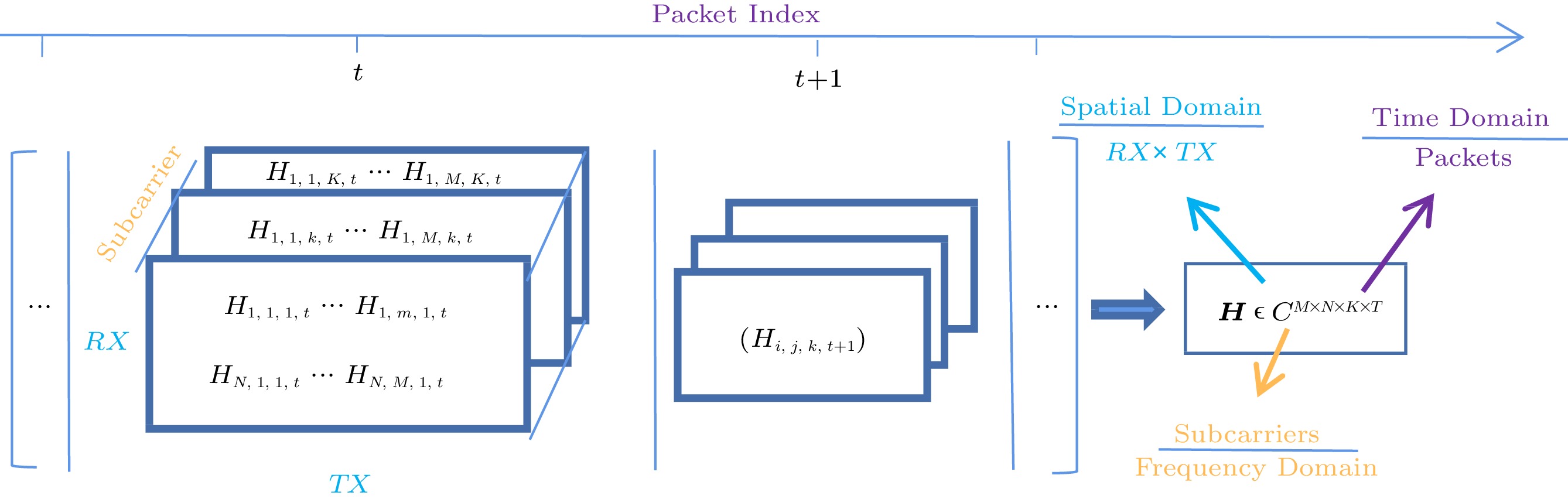

CSI provides information to each transmitter and receiver antenna pair at each carrier frequency based on multiple input multiple output (MIMO) and OFDM. Mathematically a CSI can be expressed as[32]:

{\boldsymbol{H(}}f,t) = \sum_{i=1}^L A_i {\rm{e}}^{(j2\pi \tfrac{ d_i(t) }{ \lambda })}, where L is the number of paths, A_i is the complex attenuation and d_i(t) is the propagation length of the i-th path.

CSI estimates each subcarrier for each transmission link. Therefore, compared with RSS, it has finer granularity and sensitivity, and can sense more subtle changes in the channel. As shown in Fig.5, the time series of the CSI matrix characterizes the MIMO channel variation in different domains[112] (time, frequency, and space). The OFDM technology divides the WiFi channel with MIMO into multiple subcarriers.

The 3D CSI matrix is similar to a digital image with a spatial resolution of N × M and K color channels. Therefore, this also enables CSI-based WiFi sensing combined with the field of computer vision.

CSI can be obtained in three ways, namely beacon frame, injection frame, and data frame. The beacon frame is transmitted periodically and it has the effect of announcing the existence of WLAN. The injection frames are created in the monitor mode to detect network failures. The data frames appear when data is communicated. Since the injection frame monopolizes a sensing channel, the CSI measurement is more controllable, while the data frame can coexist with data communication and CSI acquisition. Therefore, it becomes the two most commonly-used frames for CSI acquisition in most sensing applications. However, most sensing applications do not use the beacon frame as the sampling rate of CSI measurements in the beacon frame is too low for most sensing applications.

In addition, after the release of the CSI tool in 2011[2], Xie et al.[113] released another CSI acquisition tool in 2015, namely the Atheros-CSI-Tool, based on Ath9k, a Linux open source network card driver. In 2020, Hernandez and Bulut[31] developed the ESP32 CSI toolkit, which allows researchers to access CSI directly from the ESP32 microcontroller. ESP32 with this toolkit can provide online CSI processing from any computer, smartphone, or even stand-alone device. In 2021, Jiang et al.[114] developed the PicoScene platform, which is a versatile and powerful middleware for CSI-based WiFi sensor research. It helps researchers to overcome two barriers in the WiFi sensor research: inadequate hardware functionality and inadequate measurement software functionality. These new developments have made it possible to obtain CSI on more devices and have greatly expanded the range of WiFi-aware applications.

2.2 Acoustic

2.2.1 Hardware Basics

The hardware base of the acoustic sensing technology mainly includes microphone and loudspeaker. The microphone is a kind of transducers that can convert the physical sound into analog electrical signals. Most microphones are capacitive in nature, which mainly include two types: electret (ECM) microphones and micro electromechanical (MEMS) microphones. The capacitive microphones are air-gap capacitors with removable membranes and fixed electrodes.

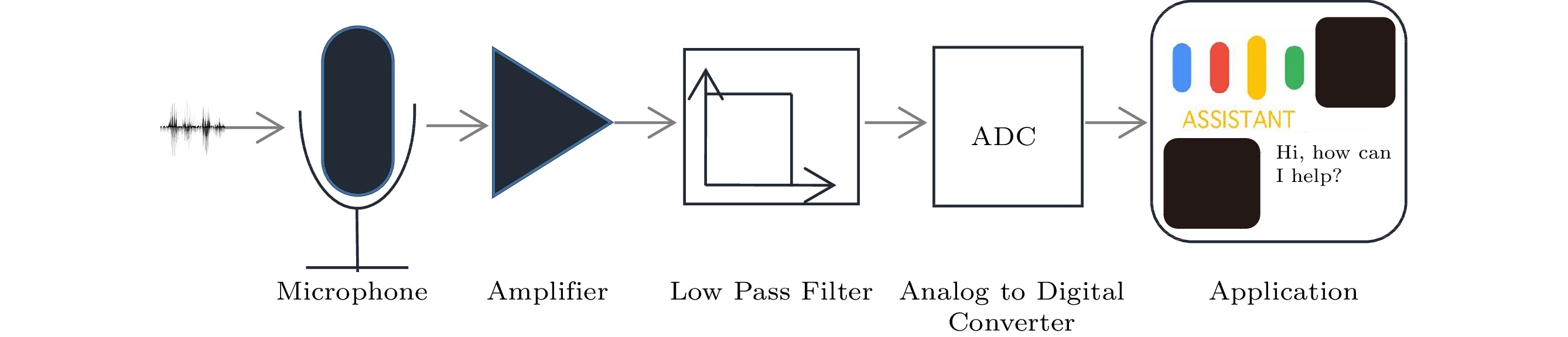

Air pressure due to sound waves can cause the diaphragm to get bent with changes in air pressure. Since the other electrode remains stationary, the movement of the membrane can cause a change in the capacitance value between the membrane and the fixed electrode. Due to their miniature size, low power consumption and excellent temperature characteristics, microphones of micro electromechanical systems have been widely used in mobile devices, including smart phones and wearable devices. Fig.6 shows the schematic diagram of sound signal transmission pathway.

The speaker is a transducer device that converts electrical signals into acoustic signals. It is a sound transmission device that can transmit sound to the mobile phone system. It has the characteristic of causing vibration when it receives current data. At present, many mobile phones are accommodating dual speakers. When a mobile phone is playing sound, the top of the body will also emit sound, and there will be a feeling of surrounding sound. Currently, there are some models whose mainstream configuration is for dual speakers, such as iPhone, Samsung, and so on. In addition, the purpose of the human body with two ears is to hear the sound in three dimensions and identify the position of the sound source. A single speaker cannot transmit three-dimensional sound effects, but dual speakers can do it. The widespread use of microphones and speakers offers great opportunities for acoustic-based sensing, and better-quality hardware can significantly improve the sensing accuracy.

2.2.2 Microphone Array

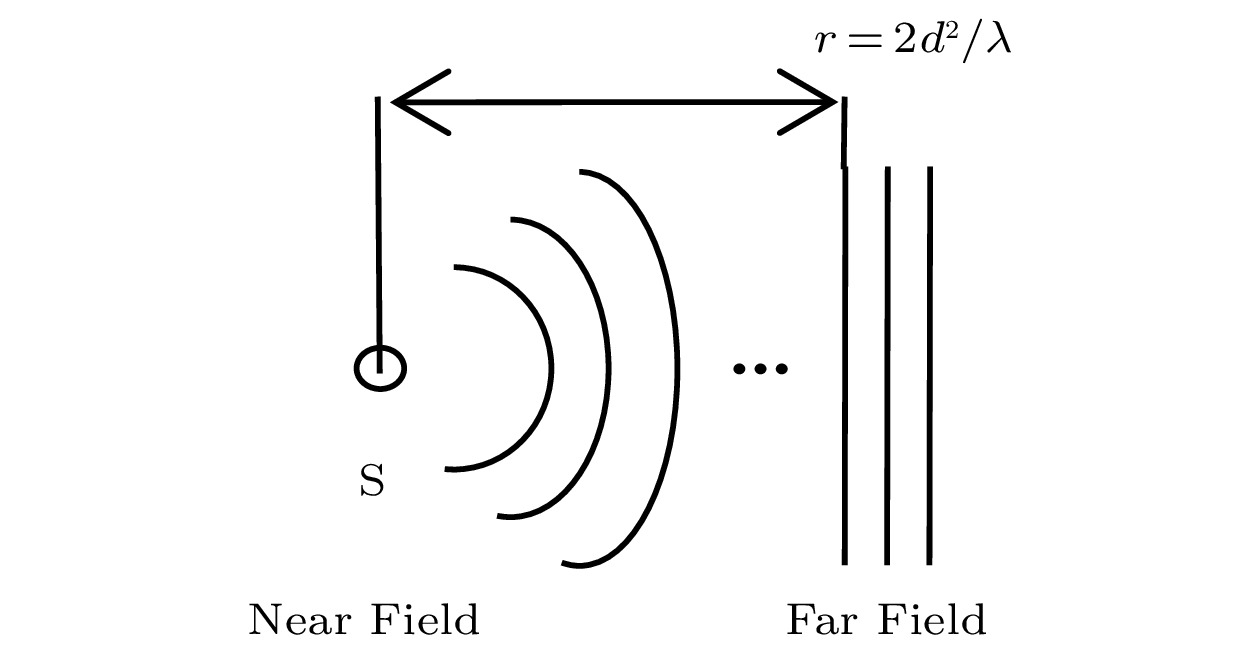

Microphone array is an array formed with a group of omnidirectional microphones, located at different positions in space, according to certain shape rules. It is a device for spatial sampling of spatially propagated sound signals. According to the distance between the sound source and the microphone array, the array can be divided into the near-field model and the far-field model. According to the topology of the microphone array, it can be divided into linear array, cross array, plane array, spiral array, and so on. After the microphones are arranged according to the specified requirements, the corresponding algorithm (arrangement and algorithm) can be used to solve many acoustic problems, such as sound source localization, abnormal sound detection, sound recognition, speech enhancement, whistle capture, and so on.

According to the distance between the sound source and the microphone array, the sound field model can be divided into two groups: the near field model and the far field model. The near-field model regards the sound wave as a spherical wave, which considers the amplitude difference between the received signals of the microphone elements. The far-field model regards the sound wave as a plane wave, which ignores the amplitude difference between the received signals of any array element, and approximately considers the difference between the received signals. Obviously, the far-field model is a simplification of the actual model, which greatly simplifies the processing difficulty. The general speech enhancement method is based on the far-field model. There is no absolute standard for classifying the near-field model and the far-field model. It is generally considered to be a far-field model when the distance between the sound source and the reference point of the center of the microphone array is much greater than the signal wavelength; otherwise, it is a near-field model. Let the distance between the adjacent array elements of the uniform linear array (also known as the array aperture) be d , and the wavelength of the highest frequency speech of the sound source (that is, the minimum wavelength of the sound source) is \lambda_{{\rm{min}}} . If the distance from the sound source to the center of the array is greater than \frac{2d^2}{\lambda_{\rm min}} , then it is a far-field model; otherwise it is a near-field model, as shown in Fig.7.

3. Technologies

3.1 Chirp Signal and FMCW

3.1.1 Chirp Signal

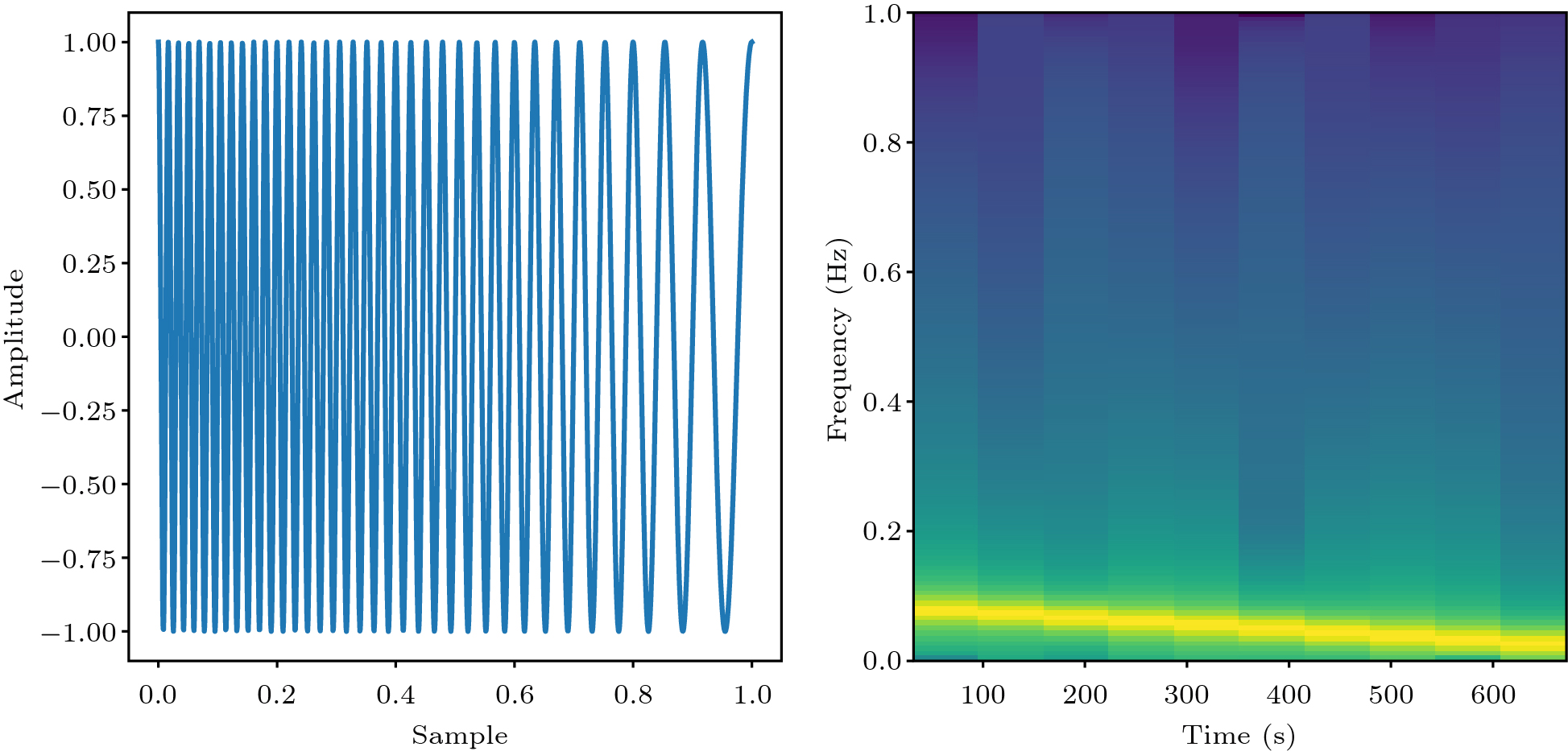

A chirp signal is a signal whose frequency increases (up-chirp) or decreases (down-chirp) as the signal changes. A linear chirp signal is expressed as

s(t)=A\cos\left(2{\text{π}}\left(\frac{f_{{\rm{min}}} t + kt^2}{2}\right)+\varphi\right) , where f_{{\rm{min}}} is the initial frequency, A is the maximum amplitude, \varphi is the initial phase, and k is the modulation coefficient or chirp tweet rate. During the sensing, the chirp signal is transmitted repeatedly, for which it is also called as the frequency modulated continuous wave (FMCW). The time and frequency domains of a chirp signal are shown in Fig.8. An auto-correlated chirp signal produces sharp and narrow peaks whose temporal bandwidth is inversely proportional to the signal bandwidth, a property also known as the pulse compression. Since the energy of the signal does not change during the pulse compression, the concentration of the signal power in a narrower time interval results in a peak signal-to-noise gain proportional to the product of the signal bandwidth and duration. Therefore, acoustic sensing systems using chirp signals are robust to dynamic channel conditions, such as Doppler effects, insensitive to Doppler effects, resistant to strong background noise or interference, and resilient to multipath fading.

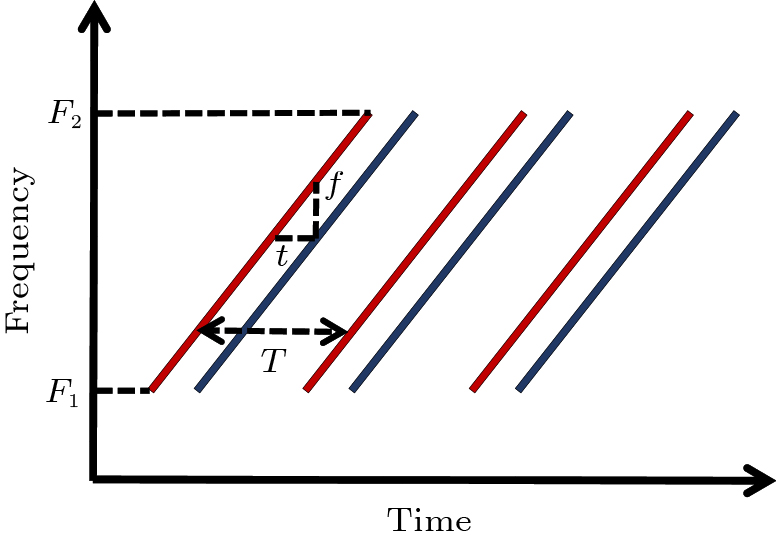

3.1.2 FMCW

FMCW is a frequency-modulated continuous wave. It transmits a chirp signal. The frequency of the signal increases linearly within a predetermined period. The signal touches the reflector of the environment and returns to the receiving end after a period of delay, so that the delay can be determined by comparing the frequencies of the received and transmitted signals. As shown in Fig.9, if f is the frequency difference, the time delay can be obtained as t = {f}/{k} , where k represents the slope of the line that can be obtained as k = ({F_2 - F_1})/{T} . F_2 and F_1 represent the upper and lower limits of the wave frequency, respectively, and T is the time of one cycle of the wave signal. Accordingly, the displacement of the target unit can be obtained from s = {v_t}/{2} and v represents the propagation speed of the wave in air. In the time domain, the FMCW can be formulated as follows:

b = A \cos 2{\text{π}} \left(\frac{F_{1}+F_{2}}{2} t + \frac{(F_{2}-F_{1})(t-N\times T)^2}{2T}\right), where A represents the amplitude of the wave and N means the number of cycles.

3.2 CIR

In WiFi, CSI is CFR (Channel Frequency Response) in the frequency domain. CIR (Channel Impulse Response) is obtained by IFFT (inverse fast Fourier transform).

In acoustics, CIR represents the propagation of acoustic signals under the combined effects of scattering, fading, and power attenuation of the transmitted signals. When the sound signal S(t) is transmitted through the loudspeaker, it propagates into the microphone through multipath and is accepted as R(t) . If h(t) is the CIR of the sound signal propagation channel, then R(t)=S(t)\times{}h(t) , where × is the convolution operator. Since the received acoustic signal is represented discretely, in the actual application scenario, the formula is R[n]=S[n]\times{}h[n] . In order to solve h[n] , the least squares channel estimation method is usually used. It is assumed that the speaker transmits the known signal {\boldsymbol{m}} =(m_1,m_2, ..., m_N), and the microphone receives the signal {\boldsymbol{y}}=(y_1, y_2, ..., y_N). For the length of the transmitted signal, a cyclic training matrix M is generated from the vector {\boldsymbol{m}} , where the dimension of M is P\times L , i.e., the vector {\boldsymbol{m}} is repeated P times to form the P rows of M, and the CIR is estimated as h=({\boldsymbol{M}}^{H}\;{\boldsymbol{M}}) ^{-1}{\boldsymbol{M}}^{H}\;{\boldsymbol{y_L}} (H means conjugate transpose), where {\boldsymbol{y}}_{L} = (y_{L+1}, y_{L+2},...,y_{L+P}),N = L+P. In order to satisfy the constraints, the lengths of L and P of the CIR need to be determined manually. Increasing L can estimate more channel states, but it may reduce the reliability of the estimation. For some practical applications of CIR, UltraGesture[85] provides a resolution of 7 mm, which is sufficient to identify slight finger movements. It encapsulates the CIR measurements into images with better accuracy than Doppler-based schemes, and it can run on commercial speakers and microphones that have already existed in most mobile devices without requiring any hardware modification. RobuCIR[86] uses a frequency hopping mechanism to mitigate frequency of the selective fading to avoid any signal interference. This high-precision CIR can recognize 15 gestures.

3.3 Doppler Shift

When there is a relative motion between the wave source and the observer, the frequency of the wave received by the observer is not the same as the frequency emitted by the wave source. The wave here can be a mechanical wave or an electromagnetic wave. As the wavelength is compressed, the frequency increases. Otherwise, the frequency decreases. Suppose that the observed frequency of the observer is f , the frequency of the wave source is f_s , the propagation velocity of the wave in the medium is c , the relative velocity of the wave source is v_r , and the velocity of the wave source is v_s . The formula for computing frequency f of the wave source is (in the following formula, “/” stands for “or”):

f = \left(\frac{c+ / -v_r}{c- / +v_s}\right). In specific applications, taking the gesture recognition based on the Doppler effect as an example, both microphone and the speaker are integrated in a smart device, where the speaker acts as a wave source to emit ultrasonic waves. Assume that the velocity of the gesture movement relative to the wave source is v_h , the propagation rate of the sound wave in the air medium is v , and the frequency of the sound wave emitted by the speaker wave source is f_s . When the hand is the receiver of the sound wave, the received sound wave frequency f_h can be expressed as follows (in the following formula, “/” stands for “or”):

f_h = \left(\frac{v+ / -v_h}{v}\right) \times f_s. The sound waves reflected by the movement of the hand and received by the microphone can be regarded as emitted by the hand. The hand is used here as a new wave source, and the microphone is used as the receiver. At this time, the receiving frequency difference \Delta f generated by the hand movement can be expressed as (in the following formula, “/” stands for “or”):

\Delta f = |f - f_s| = \frac{2v_h}{v - / + v_h} \times f_s. 3.4 MFCC

The Mel-Scale Frequency Cepstral Coefficient (MFCC) is one of the most commonly-used audio feature parameters[115]. For audio recognition, the most basic operation is to extract feature parameters from speech information. In other words, it is to extract the identifiable components from the audio information that can characterize the entire audio signal, and then to discard other information, such as background noise, which is the most basic audio feature extraction. The mel scale is a nonlinear feature that can be used to characterize the human ear's sensory judgment to sound changes at equal distances. Since the human ear's sensing of audio signals has nonlinear correlation characteristics, the relationship between the auditory sensing ability and its frequency satisfies the logarithmic expression when the speech signal exceeds 1 kHz. The corresponding relationship can be expressed as:

m = 2\;595\log_m\left(1 + \frac{f}{700}\right), where m represents the mel frequency of human auditory sensing, and f is the frequency (in Hz) of the audio signal. If the distribution of the mel scale is uniform, the gap between the actual frequencies becomes larger and larger. In the mel frequency domain, the human auditory sensing ability is obviously linearly related, indicating that the two audio frequencies are in the mel frequency domain. The audio feature parameter extracted according to the auditory sensing ability of the human ear has an excellent effect on distinguishing different key audios. The mel spectrum can be obtained by preprocessing the signal, framing, windowing, and Fourier transform, passing through the mel filter, and then performing logarithmic operations and discrete cosine transform (DCT).

3.5 Wireless Ranging

In HCI, it is often necessary to use a wireless signal to measure the distance between two objects or devices. The location algorithms for wireless sensor networks depend on a variety of distance measurement techniques. There are many factors that affect the accuracy of a location algorithm. Therefore, the selection of the infinite distance algorithm should be based on various applications, such as network structure, sensor density in the area, number of anchors, and geometry of the measurement area. However, the type of measurements and the corresponding accuracy fundamentally determine the accuracy of the location algorithm. Common wireless ranging technologies are time of arrival (ToA)/time of flight (ToF), time difference of arrival (TDOA), angle of arrival (AOA), phase difference, and RSSI attenuation models.

3.5.1 Time of Arrival (ToA)/Time of Flight (ToF)

ToA is the time at which a signal travels between the transmitter and the receiver.

Combining with the propagation speed v of the signal, the propagation distance d= v \times ToA can be calculated. In acoustics, v=344\;{\rm{ m/s}} . This method requires precise synchronization between the transmitter and the receiver to avoid measurement errors.

Due to the large environmental impact, the ToF range selected based on signal strength is unstable. In practice, when the speed of signal propagation in the medium is known, the signal propagation time (also called the flight time) is often used to measure the signal distance. There are three common ways to measure ToF, which are time synchronous measurement, signal reflection, and waves velocity difference.

Time Synchronous Measurement. Assuming precise time synchronization between the sender and the receiver, when the sender sends a packet to the receiver, the receiver records the arrival time of the signal after receiving the message, and obtains the ToF by subtracting the sending time stamp from the receiving time stamp. The pass distance is derived as d= c \times t , where d is the distance from the sender to the receiver, c is the speed at which the signal travels, and t is the measured flight time.

Signal Reflection. Since it is difficult to directly use ToF measurements, it has been proposed to use signal reflection to calculate the flight time in order to avoid the time synchronization between the transceiver and the receiver. This is usually done in two ways. The first method is the direct reflection of the transmitted signal, which uses the range finder as a reflector. For example, to measure ToF using FMCW as the transmission signal, this method requires that the transceiver can operate in full duplex mode and the ranging object has a certain volume. The second method is to use two devices as the sender and the receiver respectively, where the sender transmits the signal, the receiver receives it and waits for a period of time to return the same wave, and finally the sender records the time of reply to calculate the distance. The formula for the same is as follows:

d = (v \times (t_1 - t_0 - \Delta{t}))/2, where t_1 is the moment when the sender receives the reply from the receiver, t_0 is the time when the sender sends the signal and \Delta{t} is the time when the receiver returns the same wave. This method requires bidirectional communication between the sender and the receiver, which is divided into two parts—one-sided bidirectional ranging, which measures the distance from a single-sided round-trip communication, and two-sided bidirectional ranging, which reduces the one-sided bidirectional error. However, this method is still affected by the clock drift of both devices.

Waves Velocity Difference. This method uses the wave velocity difference between the two signals to measure the distance. The sender sends two different wireless signals at the same time, and then records the arrival time of the two signals at the receiver. Based on the different arrival time, the distance between the sender and the receiver can be calculated as follows:

d = \frac{v_r v_s (t_s - t_r)}{v_r - v_s}. In the above formula, r and s are two types of signals, t_r and t_s are the time when the two signals reach the receiving end respectively, and v_r and v_s are the corresponding speeds at which the two signals propagate respectively. The formula for calculating the distance can be simplified if the propagation speed of one signal is much smaller than that of the other. Assuming that the s-signal is much smaller than the r-signal, it can be simplified.

3.5.2 Time Difference of Arrival (TDOA)

In fact, TDOA is a modification of the ToA algorithm, which uses the time difference between the signals reflected by the object to be measured and the different receivers to analyze the distance difference.

Assuming that the time difference between the arrival of the two objects to be measured at different transmitting (receiving) sections is \Delta t , multiplying by the speed of signal transmission v , the travel distance difference \Delta d of the wireless signal to different base stations can be found. The TDOA algorithm is mainly divided into two cases: the first case is that to send data from the object to the receiver, the receiver receives the data, gets the time stamp, and then can calculate the distance difference between the two objects to be measured to each receiver. The other case is that all the transmitters send data to the object to be measured at the same time, and the time difference of the signal arrival is recorded by the object to be measured.

3.5.3 Angle of Arrival (AOA)

The core idea of AOA is to calculate the relative orientation of the receiving and transmitting nodes through a hardware device. It usually relies on multiple antenna arrays. In a multi-antenna array, for signals arriving at the antenna array at different angles, there will be a time difference between the individual antennas, which corresponds to the angle of arrival (AOA). There are three common methods to obtain the angle of arrival: the method using signal time delay estimation; the method using Multiple Signal Classification (MUSIC), and the method using beamforming (Beamforming).

Method Using Signal Delay. The time delay of the received signal of the array is determined, and the information of the angle of arrival is obtained by combining the propagation speed of the signal and the geometric distribution of the array. This method has more applications in acoustics, such as working[116, 117].

Method Using Multiple Signals. It is an algorithm based on subspace decomposition, which uses the orthogonality of the signal and noise subspaces to construct a spatial spectral function and estimate the parameters of the signal by spectral peak search. The basic idea is to decompose the covariance matrix of the output data of an arbitrary array into features, so as to obtain the signal subspace corresponding to the signal component and the noise subspace orthogonal to the signal component, and then to use the orthogonality of these two subspaces to estimate the parameters of the signal, such as the direction of incidence, polarization information and signal strength, and so on. An example is the Music method. This method has high directional accuracy, high resolution for the lateral direction of the signal within the antenna beam, is suitable for short data cases, and can be processed in real time using high-speed processing techniques, but when the wavelength is less than twice the high-frequency component of the array element spacing, the array element cannot receive the signal; and in radar systems, with the increasing requirements of anti-stealth and resolution of the target, the assumption of narrow-band signals is no longer in line with the actual situation.

Beamforming. This method uses antenna arrays to enhance signals in different directions, and detects signal strength information in different directions to determine the arrival angle. There are two common beam shaping methods, one is based on delay sum and the other is based on SRP (Steered-Response Power). After the arrival angle is obtained by the three methods mentioned above, the position of the point to be measured can be obtained by locating it with the theorem of triangle. For example, Gallo and Magone[118] suggested to associate the measured WiFi RSSI with the electronic compass data on a smartphone to calculate the angle of arrival of the smartphone signal and the movement of the smartphone.

3.5.4 Phase Difference

The received signal phase method uses the carrier phase (or phase difference) to estimate the distance[119]. This method is also known as the phase of arrival (POA)[120]. Assume that all transmitters emit a sinusoidal signal with a frequency of F and a phase offset of zero. In order to determine the phase of the signal received at the target point, the signal sent from each transmitter to the receiver requires a limited transmission delay. As shown in Fig.10, transmitter stations B to E are placed in a specific location in a fictional cubic building and T is the target location. The delay is expressed as a fraction of the wavelength of the signal as S_{i}(t) = {\rm{sin}} (2 {\text{π}} ft + \phi_{i}) , where I_{\phi_{i}} = {(2{\text{π}} f D_i)}/ {c} , i \in (B,C,D,E) and c is the velocity of light. As long as the wavelength of the transmitted signal is greater than the diagonal of the cubic building, i.e., 0 \leqslant \phi_{i} \leqslant 2{\text{π}}, the distance can be estimated as D_i = {c \phi_i}/({2\pi f}) .

For indoor positioning systems, the signal phase method can be used in combination with ToA/TDOA or the RSS method to fine-tune positioning. However, the receiving signal phase method needs to overcome the ambiguity of the carrier phase measurement. It requires LOS signaling; otherwise it will cause more errors in the indoor environment.

3.5.5 RSSI Attenuation Model

This ranging method is mainly used in WiFi. Path loss refers to the loss of radio signals in transmission. Generally, RSSI is affected by four factors: transmission power, path attenuation, reception gain and system processing gain. Therefore, the RSSI can be expressed as:

RSSI = Tx_{\rm Power}+Path_{\rm Loss}+Rx_{\rm Gain}+System_{\rm Gain}, where Tx_{\rm Power}, Path_{\rm Loss}, Rx_{\rm Gain}, and System_{\rm Gain} represent the four influencing factors of transmission power, path attenuation, reception gain, and system processing gain, respectively.

Due to the multipath effect, signals from different propagation paths have different delays and energy decay. Intuitively, the farther the distance, the lower the signal strength (it can also be seen in the free-propagation-based path consumption model in theory). The distance measurement using the signal strength is based on a free-space propagation path consumption model

1 , which is used to predict the strength of the received signal in a distance-of-sight environment without any obstacle between the receiver and the transmitter.For the propagation of wireless signals in free space, the path consumption has the same receiving and transmitting distance, which will cause power change loss in the distance of 100 m to 1000 m. In this scenario, the formula for calculating the received power in logarithmic terms is:

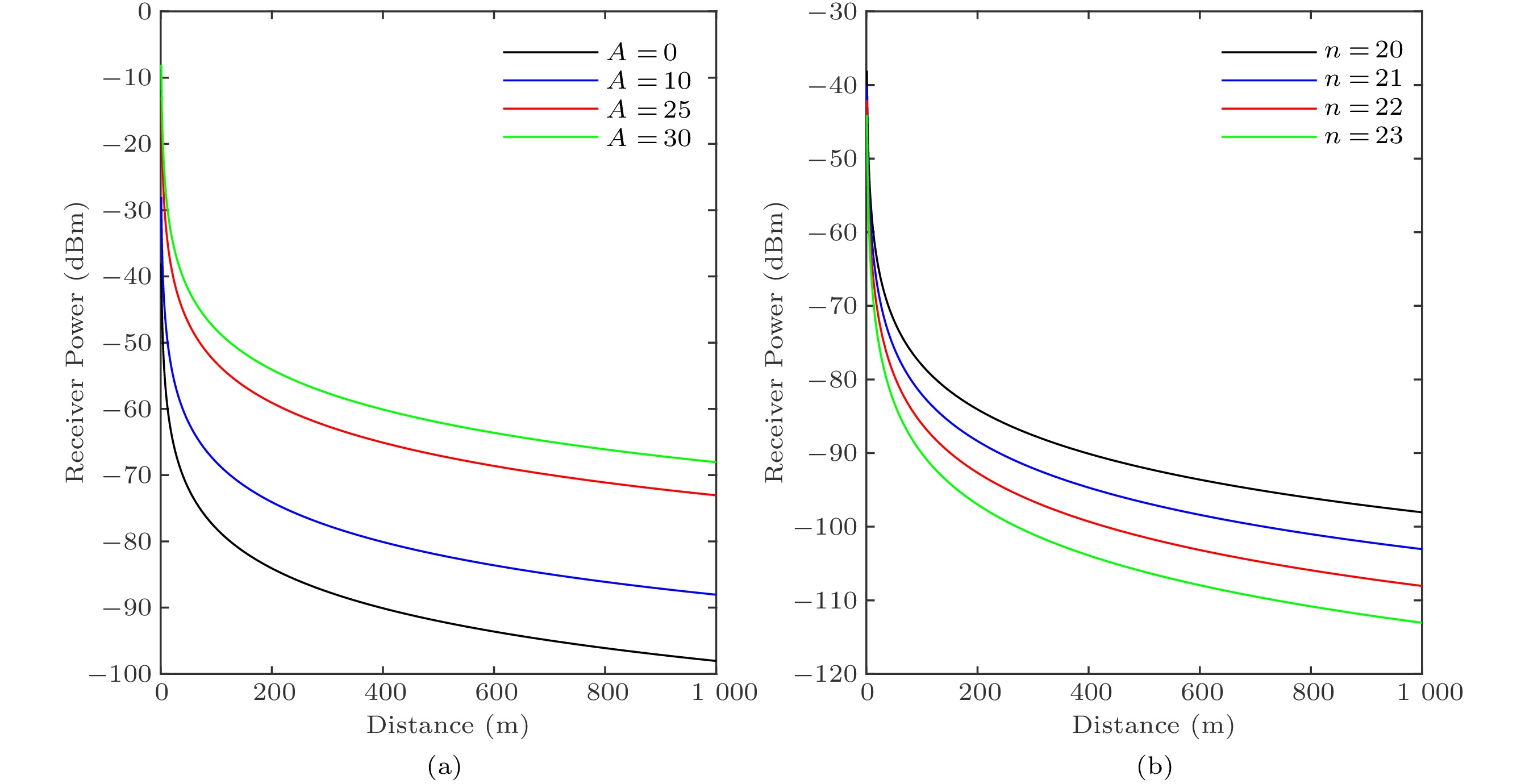

10\lg(P_r) = 10\lg(c_{0} P_t) - 10n\lg(r) = A - 10n\lg(r), where P_r and P_t are the receiving power and the transmitting power of the wireless signal respectively, r is the distance between transceivers, c_0 is a constant related to antenna parameters and signal frequency, and n is the propagation factor whose value depends on the environment in which the wireless signal is propagated. Since the transmission power is known, A-10n\lg(r) can be viewed as the power to transmit 1 m long-time received signal. From the above formula, the values of constants A and n can be obtained, which determine the relationship between the received signal strength and the signal transmission distance.

As shown in Fig.11, if the signal propagation factor n is fixed, the intensity of the wireless signal decreases rapidly when it travels near the field, and slowly and linearly when it travels long distances. When A is fixed, the smaller the attenuation of the signal in the propagation process, the longer the signal can travel①. The RSSI measurement uses the theoretical or empirical loss of the signal propagation model, calculates the distance between the receiver and the receiver through the path distance formula, and calculates the signal loss during the transmission.

To sum up, if we know n and A received when the wireless transceiver nodes are together for 1 m, we can calculate the distance. Generally, these two values are empirical, which are closely related to the used hardware nodes and the environment in which wireless signals are propagated. Therefore, before ranging, these two empirical values must be calibrated well in the application environment. The accuracy of the calibration is directly related to the accuracy of ranging and positioning. Therefore, measuring distances using RSSI can encounter the instability of RSSI, which can be smoothened by designing filters, e.g., the mean filter and the weighted filter.

3.6 Wireless Tracking

Visually, tracking can achieve the goal of tracking a target by constantly calculating its location. However, in practice, we can achieve the effect of tracking without calculating the specific location. As long as the initial position of the target is known, tracking and positioning of the target can be achieved continuously. The tracking technology can use the signal characteristics of frequency, phase, etc. of the object to be measured to know the change in its spatial position, so as to achieve the purpose of tracking. In target tracking, often the object to be measured is tracked based on the change in distance. In the field of acoustics and WiFi, three tracking methods are commonly used: Doppler tracking, FMCW (Frequency Modulated Continuous Wave) tracking, and signal phase tracking.

3.6.1 Doppler Tracking

Based on the Doppler effect, the velocity of motion of the receiver can be calculated as follows:

v = \frac{f-f_0}{f_0} c = \frac{\Delta f}{f_0} c, where f_0 denotes the frequency at which the source emits sound and c denotes the speed of sound. \Delta f denotes the frequency change produced by the Doppler effect. In the time window, the frequency domain information of the signal can be obtained by SSFT (short time Fourier transform), which can then be used to obtain the frequency of the signal. If the original signal is fixed, then it can be obtained as \Delta f .

The variation in the distance of the receiver's motion can be obtained by integrating the velocity with time, that is d = \int_{0}^{T} v {\rm{d}}t , where T represents the time from the beginning to the end of the receiver motion. If the initial location of the recipient is known, the final location of the target can be calculated to track the target.

3.6.2 FMCW Tracking

The basic principle of FMCW (Frequency Modulated Continuous Wave) is to emit a frequency wave continuously whose frequency increases or decreases periodically. The frequency of FMCW periodically increases (from f_{{\rm{min}}} to f_{{\rm{max}}} ) or decreases (from f_{{\rm{max}}} down to f_{{\rm{min}}} ). FMCW tracking can be conducted in two ways. The first way is to measure ToF by using the frequency offset from the mixing of the reflected and transmitted signals, and then to measure the distance between the source and the reflected object. The second way is to measure the change in distance by the frequency difference between the received and the transmitted signals.

If the first method is used for distance measurement, an equipment is required to eliminate the emitted strong signal when receiving the reflected signal, so as to avoid its interference to the latter. The distance measurement by using the second method requires precise clock synchronization between the transmitting and receiving devices. These two methods are difficult to implement in actual scenarios. We can avoid the precise time synchronization and analyze the change in distance by using the FMCW signal received by the receiving device and virtually sending the signal to track the movement.

3.6.3 Signal Phase Tracking

Phase location tracking is a common method in the positioning and tracking of the Internet of Things. Especially in recent years, a series of phase-based positioning and tracking methods have emerged in many fields of research. The phase-based tracking methods will be improved continuously, and its basic principle is to measure the phase change of a signal. Assume that the fixed frequency signal sent by the signal source is R(t)=A\cos(2{\text{π}} f t) , the signal propagates through path p , and the length of the propagation path changes with time to d_p(t) . Then, the received sound signal via path p can be expressed as:

R_p(t) = A_p(t)\cos\left(2{\text{π}} ft - \frac{2{\text{π}} f d_p(t)}{c}-\theta_{p}\right), where A_p(t) is the amplitude of the received signal, {2\pi fd_p(t)}/{c} is the phase offset caused due to the propagation, and c is the sound speed. \theta_{p} is the phase offset caused by hardware delay, half-wave loss due to the reflection, etc., which can be considered constant and does not change with time. If the phase information can be obtained from the received signal R_p(t) , the change of the propagation path length d_p(t) can be obtained, and the receiver's motion path can be tracked.

If multiple sound sources with different frequencies are used to send sound waves at different locations, the spatial position of the device can be calculated based on the distance between the device and different sound sources over a period of time when the starting location is known, so as to achieve high-precision positioning and tracking.

4. Application

4.1 Behavior Recognition and Tracking

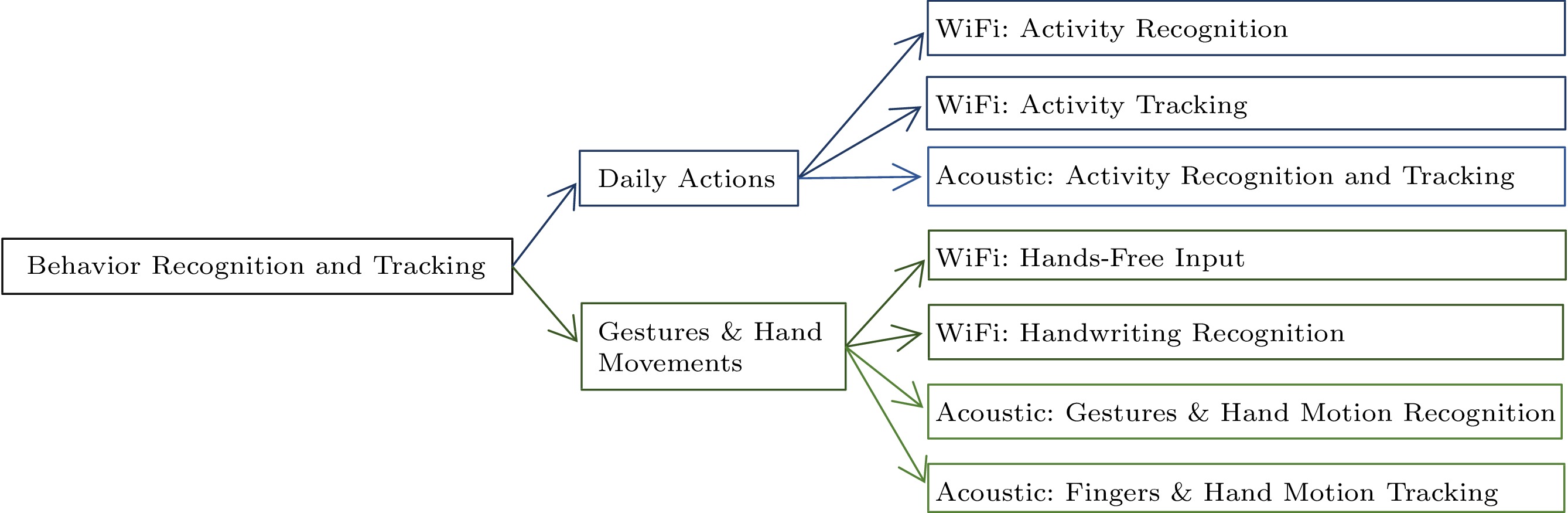

The framework for Subsection 4.1 is shown in Fig.12.

4.1.1 Daily Activity

Human action recognition is one of the important research contents in intelligent application. Daily behavior detection consists of activity recognition and tracking. Activity recognition refers to the classification and recognition of human behaviors according to certain algorithms, such as the deep learning methods by measuring certain signal data generated by human while performing various actions. Tracking is more about tracking the activity process and trajectory through physical methods. By accurately identifying and tracking the human behavior, the quality of human-computer interaction can be improved and the scope of the intelligent application can be expanded. It is the future development trend of intelligent life, which will have great application prospects and economic value for the research of smart home, intelligent teaching, and medical assistance. According to Pedretti and Early[116], daily behaviors mainly include eating, bathing, dressing, going to the bathroom, walking and such other behaviors. Nowadays, there is a growing trend towards intelligent home, in which WiFi and acoustic play a crucial role, which makes many applications to realize human behavior perception emerge. The action recognition and tracking based on WiFi and acoustic sensing are introduced below respectively.

1) Activity Recognition

In the last decade, activity recognition was one of the hottest research topics in wireless sensing. Focusing on large human movements, activity recognition also facilitates exciting applications, such as motion detection, fall detection, and multi-activity classification where multiple activities are combined for detection. Different activities identify different targets with different emphasis. Next are three common types of applications.

Some applications aim to detect the presence of moving objects. Traditional methods, like [43], use a predetermined threshold to distinguish such motion-induced burst signals. However, since wireless signals are sensitive to the environment and environmental changes, they require different calibrations for different environments. WiDetect[42] uses statistical theory to model the signal state in a scattering-rich environment. For example, WiBorder[44] analyzes CSI conjugate multiplication in detail and uses a sensing boundary determination method. Therefore, it can accurately determine whether a person has entered into a given place.

Some applications aim to detect specific actions of everyday activities. Unlike their predecessors, these applications need a distinction that clearly distinguishes between specific actions and others. A typical example of such an application is the fall detection. Falling is a major threat to the life and health of elderly people. Timely detection and rescue of falling can greatly reduce the consequences of falling. Han et al.[9] proposed WiFall, which was the first to propose a WiFi-based fall detection mechanism. In this system, the Local Outlier Factor (LOF) algorithm is used to detect the outlier data in the CSI stream and the one-class Support Vector Machine (SVM) is used to identify the descent action. After that, more and more researchers began to pay attention to this problem. For example, RT-Fall[10], FallDeFi[12] and DeFall[13] have made use of more powerful data processing methods and achieved better detection performance. More recently, researchers[14-17] have proposed new solutions to solve the three major challenges of fall detection: user-related, environment-dependent, and motion interference. For example, since the AOA (angle of arrival) reflected by the human body does not depend on the environment and the subject, AFall[14] proposes to use the Dynamic-Music algorithm[56] to model the relationship between human falls and changes in the AOA of WiFi signals reflected by the human body. Therefore, when the environment changes slightly, the performance of falling can remain stable.

There are also applications that detect multiple activities in daily life. This type of applications is related to the category of activities. In general, the extracted features are different for different scenarios with different activity contents. TW-See[48] is a human activity recognition method based on wall-penetrating passive CSI. The method tracks six human activities: walking, sitting, standing, falling, arm swinging, and boxing. The method uses two key techniques for tracking. First, the inverse Robust PCA (OR-PCA) method is applied to obtain the correlation between human activities and CSI value changes, and then a normalized variance sliding window method is applied to segment the OR-PCA waveform of human actions. On the other hand, Wi-Motion[45] divides a human motion into macroscopic and microscopic motions. It uses a human motion detection method based on CSI amplitude and phase information, which minimizes the random phase offset and uses different signal processing methods to obtain clean datasets.

In many previous studies, machine learning models were used to classify signals by directly comparing them. For example, WiSee[46] and CARM[41] convert the wireless signal into the frequency domain, then get environment-independent DFS (Doppler Frequency Shift) (representing motion speed information), and finally use the model to classify. However, more and more recent studies have been done to improve the robustness of the environment. EI[38] and DeepMV[39] combine DNN with domain discriminator to extract the general characteristics of activities. Alternatively, in-depth learning models are also combined to identify activities. For example, DeepSense[37] proposes a HAR method based on CSI and in-depth learning. The proposed activities (walking, standing, lying, running and empty running) were classified by using a long-term recursive convolution network of self-encoders with an accuracy of 97.4%. Chen et al.[40] proposed a CSI macro activity recognition method based on deep learning. This method divides macro-activities into six categories: fall, walk, sit, lie down, stand up and run, and uses two-way long and short memories (ABLSTM) to learn the representative characteristics of the original CSI from two directions.

2) Activity Tracking

In WiFi, both the large motion and the small motion tracking take place. The large motion tracking mainly includes the calculation of steps, human position tracking, the number of people derived from human position calculation and other activity tracking applications. The tracking of minor movements mainly involves lip reading. Location-aware studies focus on the coordinates of the target people, population counts, and the number of people in a specific area.

In the application of step counting, the corresponding signals from legs or feet may be covered by stronger signals reflected from the trunk. Therefore, it is difficult to track steps directly. WiStep[55] identifies walking modes through time-frequency analysis, separates the leg-induced signals from the trunk-induced signals using wavelet transform, and calculates steps using a peak detection method. WiStep's step count accuracy in the two-dimensional space is higher than 87.6%.

Since WiFi sensing enables non-inductive localization and tracking, human localization has attracted a lot of researchers' attention in the field of WiFi human sensing. In the application of human position tracking, many studies have proposed the use of CSI for indoor localization and activity recognition (see Subsection 4.3.2 for details). As human location has attracted more and more attention, WiFi wireless sensing has also emerged into applications derived from human locations. Examples include crowd counts that focus on the exact number of people in a particular place. Since CSI provides more fine-grained channel information (i.e., amplitude and phase information) through multiple subcarriers, it can be known from the work [35] that the number of subjects has different impact on the amplitudes of CSI data on different subcarriers. More people can induce higher CSI variance through WiFi links. An integrated crowd management system was proposed in [35]. Unlike previous studies, the proposed system uses existing WiFi traffic and uses robust semi-supervised learning to estimate population density, population count, walking speed and direction. The method can be easily extended to new environments. The human intrusion described in Subsection 4.4.3 is also a derived application of human localization.

In addition, human micro-motion tracking is also used. For example, the Smokey[47] system uses CSI to detect smoking by monitoring different smoke-related actions, such as holding, lifting, sucking, dropping, inhaling and exhaling. It is also evaluated in an indoor environment with multiple users and achieves good performance. Human tiny motion tracking based on CSI can be extended to sense lip movements. WiHear[33] uses a directional antenna to send WiFi signals in the direction of the target user's face and recognizes 14 syllables and 32 words with an accuracy of 91%. WiTalk[34] obtains mouth movement information by measuring DFS based on the scene of lip reading while making a phone call, and uses DTW to classify the 12 syllables. The relative position between the phone and the mouth is constant where the user is or which direction he/she is facing. The accuracy of WiTalk is higher than 82.5%.

Compared with acoustic, the current research on WiFi in daily behavior recognition is more in-depth and extensive. But the recognition and tracking of daily behavior based on sound wave sensing also involves some aspects. Early work SoundSense[73] is the first general-purpose sound sensing system specifically designed for mobile phones by modeling sound events on mobile phones and monitoring people's daily activities, such as walking, driving, riding in cars, and so on, demonstrating its ability to identify meaningful sound events that occur in the user's daily life. The extracted acoustic features are the processed phase, signal strength, and frequency and bandwidth, which are then classified into specific categories using decision trees and Markov models. The feature extraction relies on human knowledge and experience. BodyScope[74] uses a wearable activity recognizer based on commercial headphones, extracts MFCC of the captured sound and then uses SVM to classify and monitor oral movements, such as eating, laughing, talking, and so on. In EarSense[75], it was found that the actions of the teeth, i.e., tapping and sliding, create vibrations in the jaw and skull, and these lineups are strong enough to propagate to the face and create a vibrational signal at the earphones. Six different tooth movements can be detected in real time by analyzing the two headphone signals. HearFit[76] turns smart speakers into active sonar, designs a fitness detection method based on Doppler frequency shift, and uses short-term energy to segment fitness actions as fitness quality guidance, which can assist to improve fitness and prevent injuries. It can detect 10 movements with and without dumbbells and give accurate statistics in various environments. In a recent study, Liang and Thomaz[77] proposed an audio-based activity recognition framework that can leverage millions of embedded features from public online video sound clips. Based on the combination of oversampling and deep learning methods, this framework does not require further feature processing or outlier filtering like previous work. Fifteen daily activities of 14 participants achieved an average recognition accuracy of 64.2% and 83.6% in Top-1 and Top-3, respectively. The work[78] performs unsupervised tracking of daily household activities through acoustic sensing, a system that uses captured sound to identify shifts in typical activities without the need for activity tags. It relies on sound embedding, through pre-trained models and a new dimensionality reduction algorithm, and applies dynamic time warping for pattern matching. The accuracy reported in this paper reached a precision of 0.99 and a recall of 0.95.

4.1.2 Gestures and Hand Movements

Gestures and hand movements are the most important ways for human interaction. From the technical route, gesture recognition is mainly based on machine learning or deep learning after extracting the wireless signal characteristics. Such applications are data-driven and focus on identifying the contents of hand movements. For finger-hand motion tracking, physical modeling is the key to its motion process tracking, and the focus also includes the content of the action at each moment in the process itself, which provides more flexible functions to support a variety of human-computer interaction programs. The following first describes the practical applications of WiFi-based and sonic-based sensing in the areas of gestures and hand movements. Gesture/hand movement recognition in WiFi work is mainly divided into two parts: hands-free input and handwriting recognition.

1) WiFi: Hands-Free Input

The gesture recognition technology is an important means of human-computer interaction, which is crucial to the development of human-computer computing. Traditional gesture recognition methods include computer vision, infrared recognition and dedicated sensors. However, computer vision methods are easily limited by light conditions, and infrared and sensor recognition are complex to deploy, inconvenient to carry, and expensive also. Therefore, they cannot well meet the application scene of smart home. As WiFi-based motion sensing is gradually maturing, WiFi-based hand motion recognition has attracted widespread attention.

In the application of recognizing freely changing gestures, the wireless signal changes are much smaller than other behavior recognition signals as the hand and finger gestures are smaller than the body movements. Such small signal changes can easily be masked by strong signal changes caused by other parts of the person's body or moving objects. Extracting these changes is not a simple task. Therefore, researchers often limit the interaction range to a small space to amplify the hand and finger signals. WiG[20] is an early work on WiFi gesture perception, which extracts four features from CSI amplitude to train an SVM classifier to distinguish four common gestures (i.e., right, left, push and pull). The authors of WiKey[18] observed that each keystroke has a unique CSI pattern due to different gestures. Therefore, the keystroke recognition accuracy rate reaches 93.47%. However, the system is very sensitive to the relative position changes between the user and the device. Then, Mudra[19] implements accurate detection of finger-level gesture signals independent of the position. Mudra performs this with the help of interference cancellation techniques based on the differences in received signals between antennas at different positions. In the past two years, researchers[21-29] also proposed various solutions to the challenges of environment, user or motion interference in gesture recognition in the WiFi field. For example, WiHF[23] improves the seam carving algorithm to extract motion change patterns in real time to provide a solution for motion changes. [25] establishes a WiFi frequency theoretical model to demonstrate that the commonly-used motion velocity and motion direction features are position-dependent, and address the environment dependence by extracting two position-independent features.

2) WiFi: Handwriting Recognition

Existing work on handwriting recognition mainly uses wireless tracking in wireless signals. WiDraw[30] uses an over-the-air handwriting recognition method. This method utilizes the AOA of the wireless signal at the receiver and has an average accuracy of 91% for multiple writing.

There are some problems in gesture recognition. The most important requirement is to distinguish gestures accurately, where a major challenge is that hand-induced signals are much lower than those in other parts of the body. Existing systems often deploy sensors near their hands. However, application scenarios are limited. With this in mind, researchers can use the directional antenna and beamforming technology to enlarge the hand space like mmASL[117], or to explore more robust signal models, such as CSI-quotient[32]. The second challenge is to extract distinguishable features. Since gesture modeling is relatively difficult, we expect to use more data-driven models in future.

3) Acoustic: Gesture and Hand Motion Recognition Based on Acoustic

Since sound wave sensing is more fine-grained than WiFi and other sensing methods, it is more suitable for more complex and accurate fine-grained action recognition and tracking, such as gesture recognition and hand motion tracking. It has been widely investigated and applied. The application and related work are introduced below in detail from the two aspects of gesture (mainly finger motion) recognition and hand motion tracking. Human gestures and hand movements are the main ways for interaction. The frequency shift caused by the Doppler effect is the most common and direct method in the recognition technology based on sound wave sensing. Consider a person writing in air next to a smart device, such as a mobile phone as an example. The speaker of the mobile phone emits modulated ultrasonic waves. Due to the Doppler frequency shifting effect caused by the human writing, the frequency of the sound wave received by the mobile phone microphone will change. Such systems usually include steps, such as data acquisition and preprocessing, short-time Fourier transform, feature extraction, and classification and recognition. In addition to the Doppler frequency offset, there are other methods based on FMCW and CIR combining the distance between the target and the sound source. DopLink[79], AirLink[121], etc. are through the connection or interaction between device and device, relying on the user to hold the device and wave it to complete the pairing, information transfer and action recognition between devices. Compared with relatively distant and coarse-grained hand motion recognition, tighter and finer-grained finger gesture motion recognition is increasingly important in human-computer interaction. The Dolphin system designed by Yang et al.[80] uses the built-in speaker and microphone to transmit and receive continuous 21 kHz ultrasonic signals, extract the frequency-domain features related to the Doppler effect, and use machine learning models to achieve up to the recognition of 17 volley gestures? SoundWrite[122] and SoundWrite II[83] describe handwritten features using amplitude spectral density and some other acoustic features, such as MFCC, and use KNN to match the captured features with labeled features in the database. Using the ZC sequence, a periodic pulse signal, as the acoustic signal for sensing gestures, VSkin[81] enables touch gesture sensing on all surfaces of the mobile device, not just the touch screen area, by measuring the amplitude and phase, which use structure-borne sound (that is, the sound that travels through the structure of the device) and air-borne sound (that is, the sound that travels through air) to sense finger taps and movements, enabling fine-grained gestures on the back of the mobile devices based on induced acoustic signals. Wang et al.[123] proposed a dynamic speed warping (DSW) algorithm, based on the observation that the gesture type is determined by the trajectory of the hand component rather than the movement speed, by dynamically scaling the velocity distribution and tracking the movement distance of the trajectory. It can match gesture signals from different domains with ten-fold speed differences, achieving 97% accuracy using only one training sample for each gesture type from four trained users.

Regarding some recent research advances, the gesture recognition pursues more fine-grained, higher accuracy, smaller training set size, and more goals. With the development of techniques, such as the transfer learning[124], few-shot learning[125], and generative adversarial networks[126], these techniques have also been applied to the field of gesture recognition. In [127] a transfer learning based convolutional neural network was used for gesture recognition, whose accuracy is better than those of the existing work on sign language digits and Thomas Moeslund's gesture recognition datasets. In [128], EMG was used for the recognition of learning gesture of small samples. Although it is different from sound-based sensing, its method is easy to reuse on the spectrogram obtained by sound-wave sensing. In [129], a generative adversarial network GAN was applied, and a scene transfer network was developed, which not only uses the real samples of the scene, but also uses real samples from another available scene to generate virtual samples to train and test a small sample dataset on the mmWave-based data and test platform. Although the carriers and methods are different, the above methods also have some inspirations for gesture recognition based on sound wave sensing. Furthermore, Ultragesture[85] is based on the channel impulse response (CIR). CIR measurements can provide a resolution of 7 mm, which is sufficient to identify slight finger movements. It encapsulates CIR measurements into images with better accuracy than Doppler-based schemes, and it can run on commercial speakers and microphones that have already existed in most mobile devices without requiring any hardware modification. RobuCIR[86] uses a frequency-hopping mechanism to avoid signal interference by mitigating frequency-selective fading. This high-accuracy CIR work can recognize 15 gestures. AMT[130] defines a new concept of primary echo to better represent the target motion by using multiple speaker-microphone pairs, which perform multi-point localization of actions, detect primary echoes and filter out secondary echoes, eliminate target bulge multipath effects instead of assuming them as particles, improve tracking accuracy, and achieve multi-target tracking at the centimeter level. Aimed at the challenge of adaptively responding to expected movements instead of unexpected ones in real-time tracking movements systems of gesture recognition, Amaging[87] gives an independent sensing dimension of acoustic two-dimensional hand forming images. Amaging has multiplicative expansion of sensing capabilities and two-dimensional parallel hand shape and gesture-trajectory recognition, and its hand shape imaging performance and immunity to mobile interference have been verified through experiments and simulations.

4) Acoustic: Finger and Hand Motion Tracking Based on Acoustic

The next issue is about the finger and hand motion tracking. Physical modeling is the key to tracking its motion process. Motion tracking provides more flexible functions to support various human-computer interaction programs. The system designed by Yun et al.[131] transforms signals into an inaudible frequency band at different frequencies, and uses Doppler shift to estimate the speed and distance of hand movement. CAT[132] analyzes FMCW of the acoustic signal and converts the time difference mapping into frequency shifts for further improving the tracking accuracy without requiring any precise synchronization. EchoTrack[133] measures the distance from the hand to the speaker array embedded in a smart phone via the chirp's Time of Flight (ToF). A unique triangular geometry is generated from the speaker array and hand to localize the gesture. Doppler shift compensation and trajectory correction are used to improve the trajectory accuracy. LLAP[84] uses a commercial smartphone to achieve motion tracking at the millimeter level by measuring the phase change in the sound signal caused by the gesture movement, and converting the phase change into the distance of movement. FingerIO[134] also uses commercial smartphones only. Using the orthogonal frequency division multiplexing in wireless frequency division multiplexing, the OFDM technology enables finer-grained finger tracking, and finally prototypes a smartwatch-shaped finger I/O device to demonstrate that it can extend the interaction space to 0.5\; {\rm{m}} \times 0.25\; {\rm{m}} on both sides of the device area of 0.25\; {\rm{m}}^2 . It works well even when fingers are completely occluded. Strata[135] estimates the channel impulse effect (CIR) in order to explicitly account for multipath propagation, and to select well-behaved channels and extract the phase change in the selected channel signal to accurately estimate the distance change of the finger, and uses a new optimization framework to estimate the absolute distance of the finger according to the change in CIR. The core work of Vernier[82] is calculated with a small signal window phase transition, whose number of local maxima corresponds to the number of cycles of the phase transition, removes complex frequency analysis and long windows of signal accumulation, and significantly reduces tracking delay and overhead. The evaluated results show that its tracking error is less than 4 mm, and the speed is also faster. The phase-based approach is improved by a factor of 3. Lu et al.[136] designed a tracking system for a conventional computer without a touch screen, emitting inaudible acoustic signals from the two speakers of the laptop, and then analyzed the energy of the acoustic signal received by the microphone features and Doppler shifts to track the hand motion trajectories. For more complex indoor environments, it is sometimes difficult for acoustic-based methods to achieve accurate motion tracking due to the multipath fading and limited sampling rates of the mobile devices. PAMT[137] defines a new parameter, namely the multipath effect ratio (MER), to represent the effect of the multipath fading on the received signals at different frequencies, and develops a new multipath effect mitigation technique based on MER and the phase-based acoustic motion tracking method PAMT, by using multiple speakers to calculate the phase change in the acoustic signal and track the corresponding moving distance. The measurement errors of one-dimensional and two-dimensional scenes on an Android smartphone are less than 2 mm and 4 mm, respectively.

4.2 Health Caring

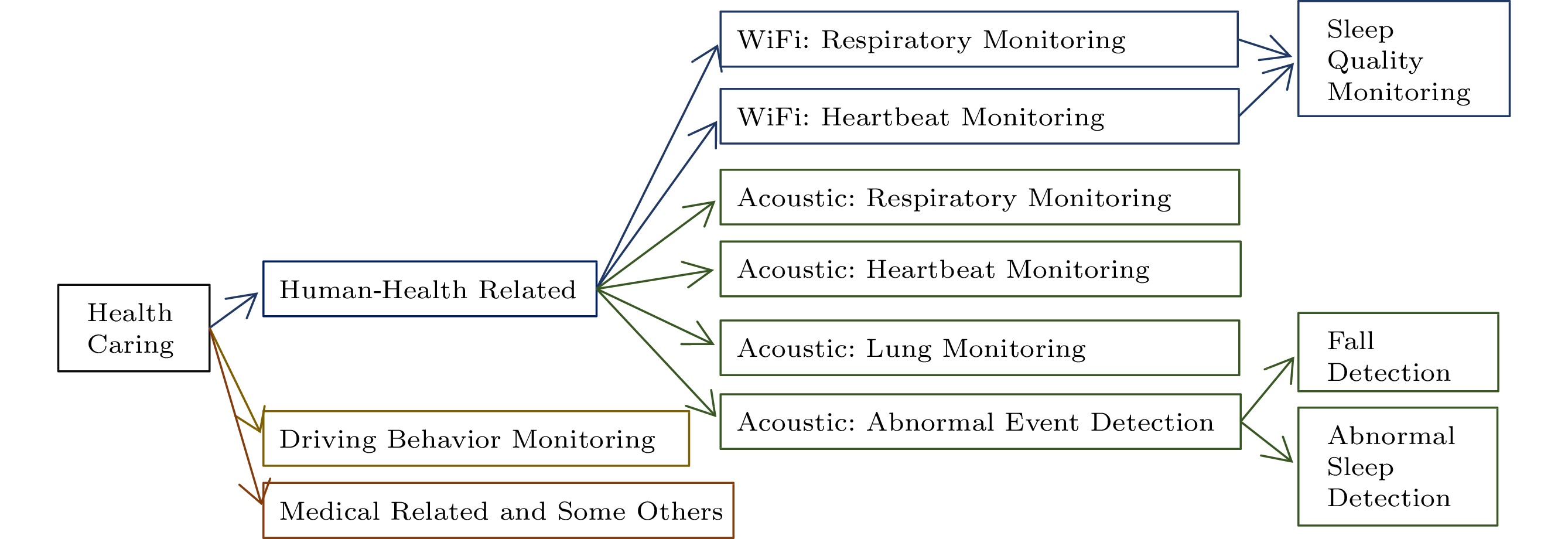

The framework of Subsection 4.2 is shown in Fig.13.

Health concerns mainly include attention to human physiological indicators and detection of driving behaviors. Human physiological indicators include hundreds of items, such as heart beat, breathing, and blood pressure, which can reflect the health of the human body. Traditional methods, such as camera-based methods (e.g., distance PPG[138]) and sensor-based methods (e.g., geophone[139, 140]), can accurately track vital signs. However, these methods require either bright lighting or complex installation and maintenance. In contrast, new sensing methods based on wireless signals have become more attractive due to their low cost, no contact and easy deployment. Next, we will introduce the related work and applications of WiFi-based sensing and sound-based sensing for relative health, driving behavior monitor, and other medical aspects.

4.2.1 Health Related

In the monitoring of life signs of human physiological indicators, their accurate monitoring is conducive to timely understanding of physical conditions, especially for elderly people. Since the detection of breathing and heartbeat in wireless sensing is the detection of subtle chest movements, the work based on WiFi sensing mainly includes the followings.

Respiratory monitoring systems usually use peak detection to calculate respiratory rates based on repetitive patterns of chest movements. Wang et al.[49] designed a Fresnel zone based model for monitoring human breathing without training, which is robust to different locations and directions. Zeng et al.[32] extended the monitoring range to 8 meters using the CSI quotient model. In the last few years, there has been some work on breath detection on smartphones using Nexmon firmware such as WiPhone[50], MoBreath[51]. On the other hand, with the emergence of the COVID-19 disease, there are some jobs that are also focusing on it. For example, Wi-COVID[141] monitors COVID-19 patient respiration rate (RR) via WiFi and tracks RR for medical providers. Due to the high rate of transmission of the COVID-19 disease, healthcare systems around the world are potentially under-resourced to help large numbers of patients at once; non-critical patients are suggested to self-isolate at home. Wi-COVID offers novel, rapid and safe solution to detect and rapidly report patient symptoms to healthcare providers.

Wu et al.[5] extended the breath detection from sleep to standing position for still body detection. WiSleep[4] is the first CSI-based method to monitor the respiratory rate of a person during sleep. Liu et al.[6] proposed a system to detect vital signs and posture during sleep by tracking CSI fluctuations caused by small human movements, and to detect the respiratory rate of one or two people in bed. Similarly, PhaseBeat[8] uses DWT for exercise separation and simultaneously monitors breathing and heartbeat with accuracy of 0.5 bpm and 1 bpm, respectively.

Health-related applications based on acoustic mainly rely on the detection of physiological conditions, such as the capture of human breathing, heartbeat, and so on, or some event monitoring, such as the fall detection, abnormal sleep behaviors, and so on, as well as medical-related assistance control to assist body recovery, etc. Larson et al.[88-91, 142] captured physiological signals, such as human respiration, heartbeat, vital capacity, chest wall motion, and some other non-voice sounds, such as swallowing during eating. Based on these physiological signals, Nahdakumar et al.[142] turned a cell phone into an active sonar system that emits modulated sound signals and detects breathing-induced minute movements of the chest and abdomen from reflexes, and developed the identification of various types of sleeps from sonar reflexes algorithms for apnea events, including obstructive apneas, central apneas, and hypopneas. In [89] inaudible acoustic signals were used to accurately monitor heartbeats, by using only common commercially available microphones and speakers, through transmission sound signals and their reflections on the human body to identify the heart beat rate and heartbeat rhythm, and generate an acoustic electrocardiogram (ACG). Based on this, many subsequent studies also emphasized on capturing heartbeat features by using machine learning techniques to classify the required research. The work[91], continuous multi-person respiration tracking using an acoustic-based COTS device, employing a two-stage algorithm to separate and recombine respiration signals from multiple paths in a short period of time, can distinguish the respiration of at least four subjects within a distance of three meters. SpiroSmart[88] uses a built-in microphone for spirometry. SpiroSonic[90] measures the human chest wall motion through acoustic sensing and interprets it as an index of the lung function based on clinically validated correlations.

Audio equipment can also identify events and perform event detection through inaudible sound signals, such as fall detection[143]. Modulated ultrasonic waves are emitted through speakers, reflected signals are recorded by microphones to detect Doppler shifts caused by events, and features are extracted from the spectrogram to represent fall patterns that are distinguishable from normal activities. Afterwards, SVD (singular value) and K-means algorithms are used to reduce the data feature dimensions and cluster data. The final detection and recognition are accomplished by different methods, such as the Hidden Markov Model for training classification, or modeling each fall through the Gaussian mixture model. In addition, the speakers and microphones on smart devices, such as smartphones, can also collect specific, but distinguishable, body movements and sound signals that accompany each sleep stage of a person. Using the data, it is possible to construct the sleep and wake state, and daily sleep quality. A model that can detect abnormal sleep behaviors (see [142]), and some more detailed sleep events, including snoring, coughing, rolling over, getting up, etc. (see [144]). The recent work Apnoea-Pi[92] presents an open-source surface acoustic wave (SAW) platform to monitor and recognize apnoea in patients. The authors[92] argued that Thin-film SAW devices outperform standard and off-the-shelf capacitive electronic sensors in response and accuracy for human respiration tracking purposes. Combined with embedded electronics, it provides a suitable platform for human respiratory monitoring and sleep disorder identification.

4.2.2 Driving Behavior Monitor

Driver fatigue is a major cause of road accidents, which lead to serious injury, and even to death. The existing work on fatigue detection mainly focuses on the visual and electroencephalogram (EEG)-based detection methods. However, vision-based methods suffer from visual blocking or visual distortion problems, while EEG-based systems are intrusive that inherently bring uncomfortable driving sensations, which may further worsen the driver fatigue. In addition, these systems are expensive to install.