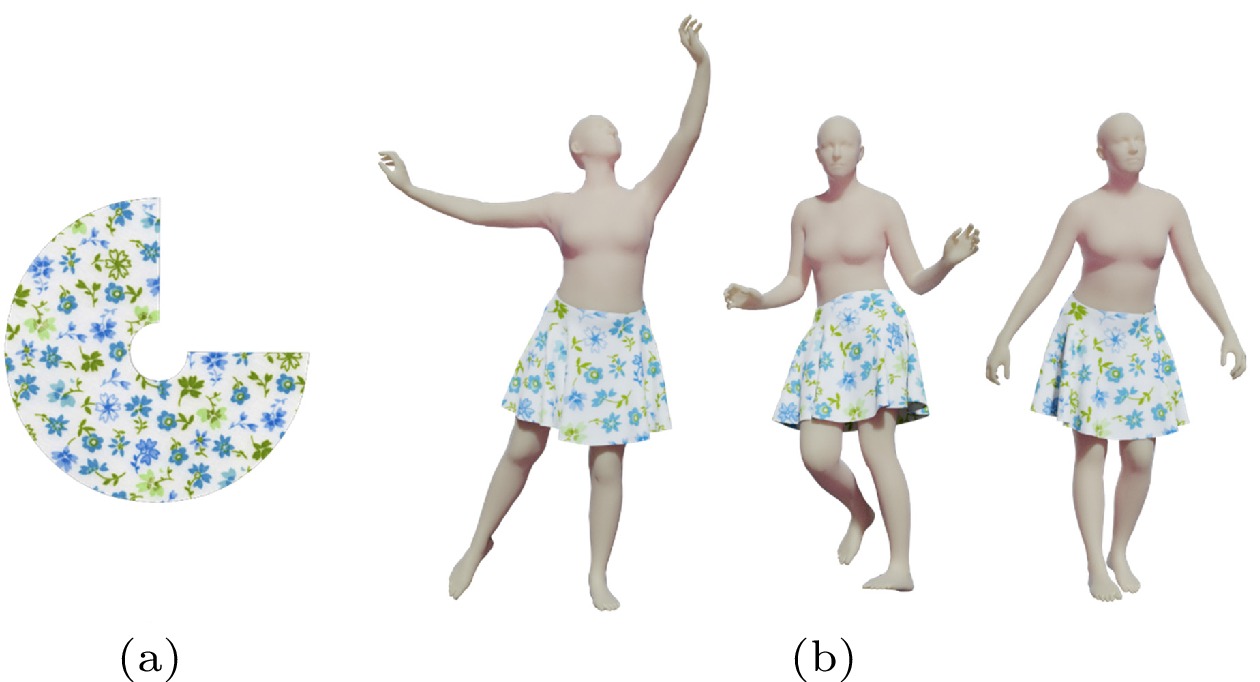

Template-based representation sharing the same texture information which achieves a consistent texture for the composed clothing animation. (a) Garment template with texture information. (b) Texture-consistent garment animation.

Figures of the Article

-

![]() Learning-based garment animation workflow translating the input body motion into clothing dynamics. The efficient workflow is spatially and temporally consistent, easy to control, and capable of garments that are different from body topology.

Learning-based garment animation workflow translating the input body motion into clothing dynamics. The efficient workflow is spatially and temporally consistent, easy to control, and capable of garments that are different from body topology.

-

![]() Overview of our method. (a) Data generation. (b) Garment synthesis network. (c) Runtime stage.

Overview of our method. (a) Data generation. (b) Garment synthesis network. (c) Runtime stage.

-

![]() Detailed architecture of our garment synthesis network as discussed in Subsection 3.3.

Detailed architecture of our garment synthesis network as discussed in Subsection 3.3.

-

![]() We employ a refinement procedure to remove the penetration between the garment and the body. (a) Penetrations between the data-driven clothing deformations and the underlying body. (b) Results from our collision removal process. (c) Collision removal results from [5]. Details are enlarged to show the difference.

We employ a refinement procedure to remove the penetration between the garment and the body. (a) Penetrations between the data-driven clothing deformations and the underlying body. (b) Results from our collision removal process. (c) Collision removal results from [5]. Details are enlarged to show the difference.

-

![]() Template-based representation sharing the same texture information which achieves a consistent texture for the composed clothing animation. (a) Garment template with texture information. (b) Texture-consistent garment animation.

Template-based representation sharing the same texture information which achieves a consistent texture for the composed clothing animation. (a) Garment template with texture information. (b) Texture-consistent garment animation.

-

![]() Garment template used in our experiment. (a) Garment pattern connected by sewing lines. (b) Simulated garment under the body mesh.

Garment template used in our experiment. (a) Garment pattern connected by sewing lines. (b) Simulated garment under the body mesh.

-

![]() Evaluation of hyper-parameters of our network with RMSE (root mean squared error) as the reference. Different settings result in different reconstruction errors. We select different (a) seq_len and (b) emb_size that is represented by 2n to train our network. Comparatively, the network performs best when seq_len=6 and emb_size=28=256.

Evaluation of hyper-parameters of our network with RMSE (root mean squared error) as the reference. Different settings result in different reconstruction errors. We select different (a) seq_len and (b) emb_size that is represented by 2n to train our network. Comparatively, the network performs best when seq_len=6 and emb_size=28=256.

-

![]() Reconstruction performance using different methods. (a) Ground-truth. (b) Ours. (c) Wang et al.[2]. (d) RNN[9]. (e) GRU[7]. (f) LSTM[10]. Details are enlarged to show the difference.

Reconstruction performance using different methods. (a) Ground-truth. (b) Ours. (c) Wang et al.[2]. (d) RNN[9]. (e) GRU[7]. (f) LSTM[10]. Details are enlarged to show the difference.

-

![]() Visualization of reconstruction errors utilizing a difference map. The predicted garments are encoded as hot/cold colors, representing large/small per-vertex errors. (a) Ground truth (simulated garments). (b)–(f) Prediction by our method, Wang et al.[2], RNN[9], GRU[7], and LSTM[10] respectively.

Visualization of reconstruction errors utilizing a difference map. The predicted garments are encoded as hot/cold colors, representing large/small per-vertex errors. (a) Ground truth (simulated garments). (b)–(f) Prediction by our method, Wang et al.[2], RNN[9], GRU[7], and LSTM[10] respectively.

-

![]() Garment animation synthesized by our workflow on a motion sequence not used for training. It infers how the garment dynamics deform with the body motion.

Garment animation synthesized by our workflow on a motion sequence not used for training. It infers how the garment dynamics deform with the body motion.

-

![]() Synthesized garments on unseen poses.

Synthesized garments on unseen poses.

Others

-

External link to attachment

https://rdcu.be/dxI8E -

PDF format

2023-6-10-1887-Highlights 249KB -

Compressed file

2023-6-10-1887-Highlights 125KB

Related articles

-

2025, 40(2): 413-427. DOI: 10.1007/s11390-023-3006-3

-

2024, 39(4): 912-928. DOI: 10.1007/s11390-024-2113-0

-

2024, 39(4): 755-770. DOI: 10.1007/s11390-024-4165-6

-

2024, 39(2): 460-471. DOI: 10.1007/s11390-024-1743-6

-

2024, 39(2): 336-345. DOI: 10.1007/s11390-024-3414-z

-

2022, 37(3): 601-614. DOI: 10.1007/s11390-022-2140-7

-

2017, 32(3): 443-456. DOI: 10.1007/s11390-017-1735-x

-

1999, 14(4): 298-308.

-

1998, 13(2): 110-124.

-

1990, 5(1): 17-23.

Download:

Download: